Watch all the Transform 2020 sessions on-demand here.

Tens of millions of birds make migratory flights for the winter each year, often flying during nighttime. They’re frequently spotted by the National Weather Services’ network of 159 ground-based radars, which scan the skies every 4 to 10 minutes by emitting pulses of microwaves and measuring their reflections. However, ecologists have historically struggled to make use of the resulting data sets because of their sheer magnitude, which can range up to hundreds of millions of images and hundreds of terabytes over decades.

In an effort to lighten the workload, scientists at Cornell’s Lab of Ornithology and the University of Massachusetts’ College of Information and Computer Sciences recently investigated an AI system capable of distinguishing birds in radar images from precipitation. They say that their tool, dubbed MistNet after the fine nets ornithologists use to capture migratory songbirds, not only aids with classification tasks, but can be used to estimate birds’ flying velocity and traffic rates.

“This is a really important advance. Our results are excellent compared with humans working by hand,” said senior author Daniel Sheldon in a statement. “It allows us to go from limited 20th-century insights to 21st-century knowledge and conservation action. [AI] has revolutionized the ability of computers to mimic humans in solving similar recognition tasks for images, video, and audio.”

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

Sheldon and colleagues detail their work in a paper (“Measuring historical bird migration in the US using archived weather radar data and convolutional neural networks“) published in the journal Methods in Ecology and Evolution. They say they first developed an “adapter” architecture to field the radar data’s input channels and to account for variations in elevation. (Unlike RGB images, radar data is collected on a three‐dimensional polar grid.) In compiling a training corpus, they avoided a massive human annotation effort by using noisy labels obtained from dual-polarization (i.e., horizontal and vertical polarization) radar data.

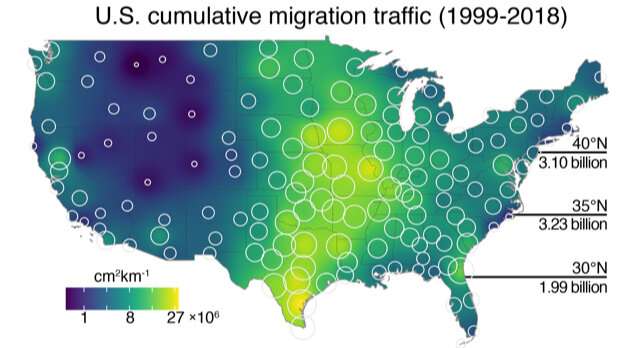

The fully trained MistNet, which the researchers say is completely autonomous, can produce fine‐grained predictions for applications from “continent‐scale” mapping to airspace usage analysis. When tested against historical and recent data from the U.S. WSR‐88D weather radar network, it was at least 95.9% accurate at identifying all biomass, and the team used it to make maps of where and when avian migration occurred over the past 24 years.

“We hope MistNet will enable a range of science and conservation applications. For example, we see in many places that a large amount of migration is concentrated on a few nights of the season,” said Sheldon. “Knowing this, maybe we could aid birds by turning off skyscraper lights on those nights.”

It’s not exactly the first time AI has been used to track wildlife migration. DeepMind, the U.K.-based AI research subsidiary acquired by Alphabet in 2014 for $500 million, in August detailed ecological research its science team is conducting to develop AI systems that’ll help study the behavior of animal species in Tanzania’s Serengeti National Park. Separately, Santa Cruz-based startup Conservation Metrics is leveraging machine learning to track African savanna elephants.