Watch all the Transform 2020 sessions on-demand here.

After a summer-long saga of accusations, denials, and blockbuster reporting by the American Civil Liberties Union, the dust appeared to have settled on Amazon’s Rekognition scandal. But a letter from shareholders this week rekindled the flames, urging the company, which was worth an estimated $1 trillion in September 2018, to prohibit sales of facial recognition technology like Rekognition to governments unless its board independently concludes there is no risk of civil and human rights violations.

The shareholders further claim that Rekognition, which has been piloted by police in Florida and Oregon, threatens to negatively impact Amazon’s stock price. More than 450 employees have demanded that the Seattle company halt sales of Rekognition to law enforcement agencies, presenting the policy as a talent and retention risk. And the service’s unfettered deployment puts Amazon under increased scrutiny from the U.S. Government Accountability Office, which lawmakers tasked in June with studying whether “commercial entities selling facial recognition adequately audit use of their technology.”

When reached for comment, an Amazon spokesperson pointed to a pair of blog posts penned by Matt Wood, general manager of deep learning and AI at Amazon Web Services (AWS), this past summer. Here, Wood pointed out that there has been “no reported law enforcement abuse of Amazon Rekognition” and argued that Rekognition has “materially benefit[ed]” society by “inhibiting child exploitation … and building educational apps for children” and by “enhancing security through multi-factor authentication, finding images more easily, or preventing package theft.”

But any restraint current AWS customers have chosen to exercise is by no means a guarantee against future — or present — abuses.

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

Case in point: In September, a report in The Intercept revealed that IBM worked with the New York City Police Department to develop a product that allowed officials to search for people by skin color, hair color, gender, age, and various facial features. Using “thousands” of photographs from roughly 50 cameras provided by the NYPD, its AI learned to identify clothing color and other bodily characteristics.

Some foreign governments have gone further.

According to a report by Gizmodo, the European Union plans to trial an AI system — dubbed iBorderCtrl — that will vet “suspicious” travelers in Hungary, Latvia, and Greece, in part by analyzing 38 facial micro-gestures. The system can reportedly be customized according to gender, ethnicity, and language.

Later this year, Singapore agency GovTech plans to deploy surveillance cameras linked to facial recognition software on over 100,000 lamp posts. Yitu Technology — a Chinese company weighing a bid to supply the software — says its solution can identify over 1.8 billion faces.

China’s facial recognition plans are perhaps the most ambitious to date. Efforts have long been underway in the country of 1.3 billion — which has an estimated 200 million surveillance cameras — to build a nationwide infrastructure capable of identifying people within three seconds with 90 percent accuracy.

Singapore, the EU, and others claim that facial recognition technology has the potential to deter crime, perform crowd analytics, and aid in antiterrorism operations. But countless research efforts — including a 2012 study showing that facial algorithms from vendor Cognitec performed 5 to 10 percent worse on African Americans than on Caucasians — has demonstrated current systems’ imprecision and susceptibility to bias. And, as Microsoft president Brad Smith noted in a blog post late last year, the normalization of facial recognition is a slippery slope toward a totalitarian dystopia.

“Imagine a government tracking everywhere you walked over the past month without your permission or knowledge,” he wrote. “Imagine a database of everyone who attended a political rally that constitutes the very essence of free speech. Imagine the stores of a shopping mall using facial recognition to share information with each other about each shelf that you browse and product you buy, without asking you first. This has long been the stuff of science fiction and popular movies — like Minority Report, Enemy of the State, and even 1984 — but now it’s on the verge of becoming possible,” Smith said.

So what can be done about it?

In a December event in Washington, D.C. hosted by the Brookings Institution, Smith proposed that companies review the results of facial recognition in “high-stakes scenarios,” such as when it might restrict a person’s movements, and called on legislators to investigate facial recognition technologies and craft policies guiding their usage.

“In a democratic republic, there is no substitute for decision-making by our elected representatives regarding the issues that require the balancing of public safety with the essence of our democratic freedoms,” he said. “We live in a nation of laws, and the government needs to play an important role in regulating facial recognition technology.”

Brian Brackeen, CEO of facial recognition software company Kairos, said in a hearing with the Congressional Black Caucus last year that standards should be put in place to ensure baseline accuracy, and to avoid misuse by foreign adversaries.

“We have to have AI tools that are not going to false-positive on different genders or races more than others, so let’s create some kind of margin of error and binding standards for the government,” he told VentureBeat in an interview.

There’s evidence the discourse has had a persuasive effect in at least a few cases. In July 2018, working with experts in artificial intelligence (AI) fairness, Microsoft revised and expanded the datasets it uses to train Face API, a Microsoft Azure API that provides algorithms for detecting, recognizing, and analyzing human faces in images. And Google recently said it would avoid offering a general-purpose facial recognition service until the “challenges” had been “identif[ied] and address[ed].”

“Like many technologies with multiple uses, [it] … merits careful consideration to ensure its use is aligned with our principles and values, and avoids abuse and harmful outcomes,” Kent Walker, senior vice president of global affairs, wrote in a blog post.

But there’s more work to be done. In the midst of government dysfunction in the U.S. and abroad and ethics-skirting advances in AI, it’s critical that regulators — and organizations — pursue mediating laws and policies before it’s too late.

For AI coverage, send news tips to Kyle Wiggers and Khari Johnson — and be sure to bookmark our AI Channel.

Thanks for reading,

Kyle Wiggers

AI Staff Writer

P.S. Please enjoy this video of Ubtech’s walker robot from the 2019 Consumer Electronics Show.

From VB

Facebook and Stanford researchers design a chatbot that learns from its mistakes

In a new paper, scientists at Facebook AI Research and Stanford describe a chatbot that learns from its mistakes over time.

Robomart to roll out driverless grocery store vehicles in Boston area this spring

Robomart is partnering with the Stop & Shop grocery store chain to make deliveries with its driverless grocery store vehicles this spring.

Above: The third-generation Echo Dot.

Alexa can now read your news like a newscaster

Amazon’s Alexa assistant can now read the news in the style of a newscaster, thanks to a novel machine learning training technique.

Project Alias feeds smart speakers white noise to preserve privacy

Project Alias is a crowdsourced privacy shield for smart speakers that prevents intelligent assistants from listening in on conversations inadvertently.

Clusterone raises $2 million for its DevOps for AI platform

Clusterone raised $2 million to take care of DevOps for developers and data scientists more interested in AI than infrastructure management.

Above: Badger Technologies Marty

Badger will deploy robots to nearly 500 Giant, Martin’s, and Stop and Shop stores in the U.S.

Badger Technologies is teaming up with Retail Business Services to supply more than 500 Giant, Martin’s, and Stop & Shop stores with robot employees.

Beyond VB

A New Human Ancestor Has Been Discovered Thanks To Artificial Intelligence

An international team of researchers have examined human DNA using deep learning algorithms to analyze genetic clues to human evolution for the very first time. (via IFL Science)

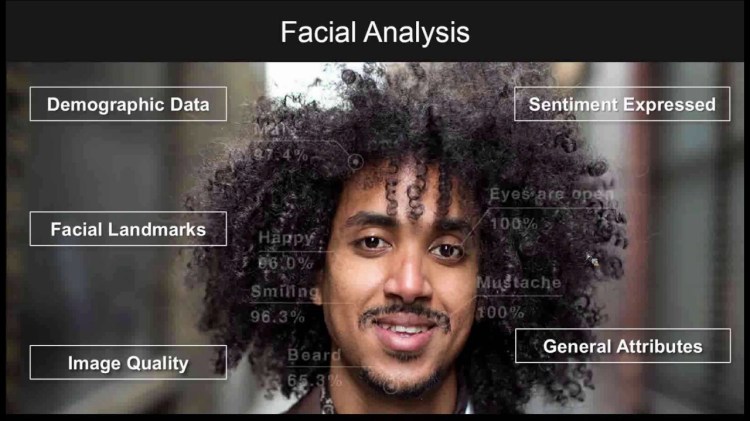

Facial and emotional recognition; how one man is advancing artificial intelligence

Scott Pelley reports on the developments in artificial intelligence brought about by venture capitalist Kai-Fu Lee’s investments and China’s effort to dominate the AI field. (via CBS News)

A country’s ambitious plan to teach anyone the basics of AI

In the era of AI superpowers, Finland is no match for the US and China. So the Scandinavian country is taking a different tack. (via MIT Tech Review)

The Weaponization Of Artificial Intelligence

Technological development has become a rat race. In the competition to lead the emerging technology race and the futuristic warfare battleground, artificial intelligence (AI) is rapidly becoming the center of global power play. (via Forbes)