testsetset

Recent revelations about Cambridge Analytica’s use of Facebook data have a lot of people rightfully concerned about how their personal information is collected and used online. But while Facebook is trying to position the Cambridge Analytics breach (and collection of user data by other third-party apps) as a function of bad actors on its platform, those actors wouldn’t be attracted to Facebook data in the first place if it wasn’t for its power.

In other words, the cardinal sin behind the Cambridge Analytica breach isn’t unethical developer behavior. The cardinal sin is how we as a society have allowed the tech industry to collect and handle user data. Facebook controls a significant component of its users’ social lives, both online and off. Google controls what we know and how we get work done.

This control extends not only to the data we see — like photos, videos, articles, and screeds from conspiracy theorist relatives — but also to the data we don’t see. All of these companies can view how we engage with data: what we find worthwhile, who we find interesting, and so on.

To get a touch dramatic, this is incredibly concerning from a philosophical standpoint, since we don’t have control over information about who we are. Our interactions online are as significant and real as those we have in meatspace, but we only genuinely control information about the latter. It’s something that makes me sincerely worried about the future.

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

There’s another reason to be worried from a far less intellectual standpoint, however: Our lack of control over this data makes it far harder for us to benefit from it. For example, Siri may never be able to make a decision based on information stored in my Google and Facebook accounts, and there’s nothing I can do about it.

I’d happily give Siri all the data I could about my dining preferences if Apple assured me it would be used only for booking me reservations through my Apple Watch. As it stands, I can’t do that, because that information is trapped inside Google, Foursquare, Yelp, OpenTable, Resy, and yes, Facebook.

Sure, Apple’s assistant integrates with two of the companies on that list to help arrange dining for its users. But that doesn’t provide the sort of deep, personal understanding necessary to turn “Hey Siri, book me dinner for two tonight” into a reservation that perfectly fits my schedule and preferences without further intervention.

Part of this has to do with tech companies wanting to cement their power using the network effects of their data. If I can’t get the information and functionality I want through Siri, I might be willing to switch to the Google Assistant or Alexa. Data control leads to revenue — just look at the quarterly financials of tech companies like Google, Facebook, and Microsoft. But the Cambridge Analytica fiasco also shows how bad actors can abuse data portability through false pretenses.

So what do we do? Data isolation harms the creation of intelligent experiences and increases the power of gigantic companies. Opening up data access provides the potential for abuse. Both are problems worth tackling, in equal measure.

In an ideal world, I’d like to see us shift to a centralized platform of user data that companies build applications upon. Users would grant apps the ability to read and write information to that platform but retain complete ownership of all the information therein. Such a platform would allow for granular and specific access to data, and make it possible for users to migrate from one application to another.

If companies want to make money using data to sell advertisements, they would have to be up-front with people about how they plan to use it. That codifies the value exchange that takes place implicitly with so many of these companies that collect more data than many realize to improve targeting.

I’ll be the first one to acknowledge that an independent data platform is a pipe dream with far too many hurdles to creation and adoption, riddled with user experience challenges. But I think there’s a middle path to get some of the benefits of such a system in our current tech ecosystem.

First, companies should be more transparent about the data they collect, and programmatically restrict it to only the purposes for which they acquired it. If they want to do something new with that data, they should ask users for permission at that point.

That would have helped with the Cambridge Analytica case, in which data collected for a survey application was subsequently used for targeting political advertisements. I’m sure some people would be perfectly happy providing their personal information for political advertising, but that is a choice they should be able to make openly and honestly.

Second, companies that collect massive amounts of user data should be required to make a copy of all that data available to users upon request, not just the subset of information they deem appropriate. That way, individuals can audit what they’re giving away and take their data with them for use with another provider’s machine learning systems, should they so choose.

I’ll still be surprised if something like that comes to pass. But I know one thing for sure: Unless we change the way tech companies handle user data, we’ll see more problems like what came along with Cambridge Analytica.

For AI coverage, send news tips to Blair Hanley Frank and Khari Johnson, and guest post submissions to Cosette Jarrett — and be sure to bookmark our AI Channel.

Thanks for reading,

Blair Hanley Frank

AI Staff Writer

P.S. Please enjoy this video of Google AI chief Jeff Dean discussing the applications of machine learning at the company’s TensorFlow Dev Summit:

FROM THE AI CHANNEL

HomePod sales reportedly slow as Apple works on fixing Siri and wood rings

Initial sales of Apple’s HomePod have underperformed — even measured against the company’s conservative forecasts — leading Apple to cut manufacturing orders and lower forecasts, Bloomberg reports. After debuting to mediocre reviews, the smart speaker was found to damage wood surfaces and stumble over Siri requests, and Apple is still working on fixes. The report cites both […]

Overblown expectations for autonomous cars could force the next AI winter

GUEST: Artificial intelligence has long had to manage expectations of its potential benefits versus what it is capable of providing at any point in time. This tension slowed down the overall progress and benefits of AI by introducing skepticism, reducing funding, and limiting adoption of the technology. Ideas around AI date back to even before the 1950s, when […]

Mark Zuckerberg is betting AI will address Facebook’s biggest problems

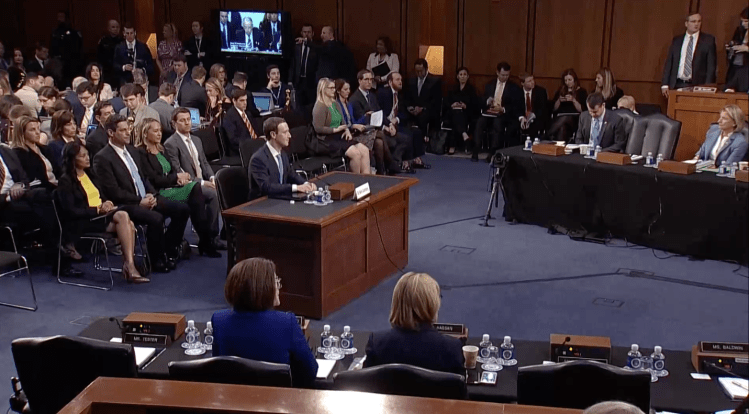

During Facebook CEO Mark Zuckerberg’s testimony to members of the U.S. Senate on Tuesday, the main topic of discussion was regulation. Zuckerberg was asked why Facebook should be trusted to self-regulate, what kind of regulation he would like to see, whether he would be willing to endorse the CONSENT Act (which would allow the FTC to […]

How a strong board of directors keeps AI companies on an ethical path

GUEST: Following the corporate corruption scandals of the early 2000s, then-Securities and Exchange Commission chairman William Donaldson said determining the company’s moral DNA “should be the foundation on which the Board builds a corporate culture based on a philosophy of high ethical standards and accountability.” Today’s crisis of confidence in technology companies, especially those controlling deep pools […]

Mozilla Foundation: Tech giants like Amazon and Facebook should be regulated, disrupted, or broken up

The Mozilla Foundation today released its inaugural Internet Health Report, which calls for the regulation of tech giants like Google, Amazon, and Facebook. The report debuts the same day as Facebook CEO Mark Zuckerberg is scheduled to testify before a gathering of two U.S. Senate committees, his first appearance before Congress. Though tech giants in the […]

BEYOND VB

China now has the most valuable AI startup in the world

SenseTime Group Ltd. has raised $600 million from Alibaba Group Holding Ltd. and other investors at a valuation of more than $3 billion, becoming the world’s most valuable artificial intelligence startup. (via Bloomberg)

FDA approves AI-powered diagnostic that doesn’t need a doctor’s help

Marking a new era of “diagnosis by software,” the US Food and Drug Administration on Wednesday gave permission to a company called IDx to market an AI-powered diagnostic device for ophthalmology. (via MIT Tech Review)

Researchers develop device that can ‘hear’ your internal voice

Researchers have created a wearable device that can read people’s minds when they use an internal voice, allowing them to control devices and ask queries without speaking. (via The Guardian)

Killer robots: Pressure builds for ban as governments meet

They will be “weapons of terror, used by terrorists and rogue states against civilian populations. Unlike human soldiers, they will follow any orders however evil,” says Toby Walsh, professor of artificial intelligence at the University of New South Wales, Australia. (via The Guardian)