Watch all the Transform 2020 sessions on-demand here.

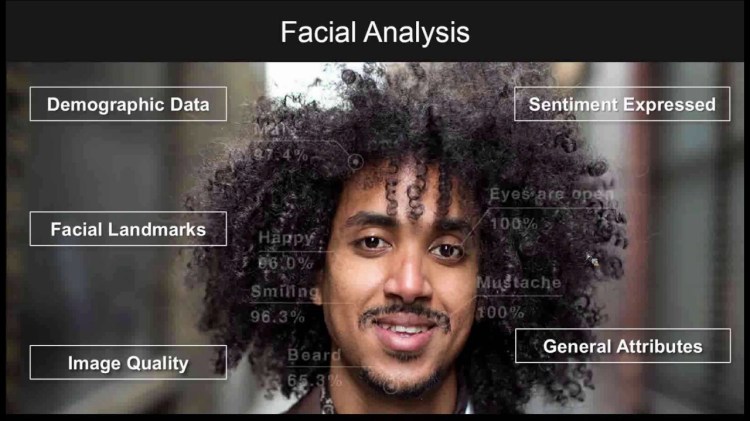

A little over a week after the fervor surrounding Google’s involvement in the Department of Defense’s Project Maven, an autonomous drone program, showed signs of abating, another machine learning controversy returned to the headlines: local law enforcement deploying Amazon’s Rekognition, a computer vision service with facial recognition capabilities.

In a letter addressed to Amazon CEO Jeff Bezos, 19 groups of shareholders expressed concerns that Rekognition’s facial recognition capabilities will be misused in ways that “violate [the] civil and human rights” of “people of color, immigrants, and civil society organizations.” And they said that it set the stage for sales of the software to foreign governments and authoritarian regimes.

Amazon, for its part, said in a statement that it will “suspend … customer’s right to use … services [like Rekognition]” if it determines those services are being “abused.” It has so far declined, however, to define the bright-line rules that would trigger a suspension.

AI ethics is a nascent field. Consortia and think tanks like the Partnership on AI, Oxford University’s AI Code of Ethics project, Harvard University’s AI Initiative, and AI4All have worked to establish preliminary best practices and guidelines. But Francesca Rossi, IBM’s global leader for AI ethics, believes there’s more to be done.

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

“Each company should come up with its own principles,” she told VentureBeat in a phone interview. “They should spell out their principles according to the space that they’re in.”

There’s more at stake than government contracts. As AI researchers at tech giants like Google, Microsoft, and IBM turn their attention to health care, the obfuscatory nature of machine learning algorithms runs the risk of alienating those who stand to benefit: patients.

People might have misgivings, for example, about systems that forecast a patient’s odds of survival if the systems don’t make clear how they’re drawing their conclusions. (One such AI from the Google Brain team takes a transparent approach, showing which PDF documents, handwritten charts, and other data informed its results.)

“There’s a difference between a doctor designing therapy for a patient [with the help of AI] and algorithms that can recognize books,” Rossi explained. “We often don’t even recognize our biases when we’re making decisions, [and] these biases can be injected into the training data sets or into the model.”

Already, opaque policies around data collection have landed some AI researchers in hot water. Last year, the Information Commissioner’s Office, the U.K.’s top privacy watchdog, ruled that the country’s National Health Service improperly shared the records of 1.6 million patients in an AI field trial with Alphabet subsidiary DeepMind.

“Users want to know that … AI has been vetted,” Rossi said. “My vision for AI is an audit process — a very scrupulous process through which somebody credible has looked at the system and analyzed the model and training data.”

Equally as important is ensuring that legislators, legal experts, and policy groups have a firm understanding of AI’s potential rewards and its potential risks, according to Rossi.

“Policymakers have to be educated about what AI is, the issues that might come up, and possible concerns,” she said. “And for the people who build AI, it’s important to be transparent and clear about what they do.”

For AI coverage, send news tips to Khari Johnson and Kyle Wiggers, and guest post submissions to Cosette Jarrett.

Thanks for reading,

Kyle Wiggers

AI Staff Writer

P.S. Please enjoy this video of a neural network from Nvidia Research that can apply a slow-motion effect to any video:

From VB

Salesforce develops natural language processing model that performs 10 tasks at once

Researchers at Salesforce’s Einstein lab have developed a natural language processing technique that can perform ten different kinds of tasks without compromising performance or accuracy.

Yelp’s Popular Dishes AI highlights the food everyone’s talking about

Yelp’s new Popular Dishes feature uses artificial intelligence to highlight the most sought-after menu items in restaurants around the world.

IBM debuts Project Debater, experimental AI that argues with humans

In what may be the biggest rollout of conversational AI from IBM since Watson, IBM Research today debuted Project Debater, an experimental conversational AI with a sense of humor, little tact, and occasionally powerful arguments. Training for Project Debater began six years ago, but the AI system only gained the ability to participate in debates […]

Baidu Research’s breast cancer detection algorithm outperforms human pathologists

Baidu Research today announced it has developed a deep learning algorithm that in initial tests outperforms human pathologists in its ability to identify breast cancer metastasis. The convolutional neural net was trained by splitting 400 large images into grids of tens of thousands of smaller images, then randomly selecting 200,000 of those smaller images. The […]

This Nvidia neural network can apply slow motion to any video

Researchers at Nvidia have developed a machine learning algorithm that can apply slow motion to any video.

AI Weekly: Google’s research center in Ghana won’t be the last AI lab in Africa

This year, we have seen an acceleration of Silicon Valley tech giants opening AI research labs around the world as they seek to gain traction among researchers and fulfill their global ambitions. In the past six months or so, Google brought labs to China and France, Facebook opened labs in Pittsburgh and Seattle, and Microsoft announced plans to open labs near universities in Berkeley, California and Melbourne, Australia.

Beyond VB

Is There a Smarter Path to Artificial Intelligence? Some Experts Hope So

For the past five years, the hottest thing in artificial intelligence has been a branch known as deep learning. The grandly named statistical technique, put simply, gives computers a way to learn by processing vast amounts of data. Thanks to deep learning, computers can easily identify faces and recognize spoken words, making other forms of humanlike intelligence suddenly seem within reach. (via The New York Times)

A machine has figured out Rubik’s Cube all by itself

Yet another bastion of human skill and intelligence has fallen to the onslaught of the machines. A new kind of deep-learning machine has taught itself to solve a Rubik’s Cube without any human assistance. (via MIT Technology Review)

Rise of the machines: has technology evolved beyond our control?

Technology is starting to behave in intelligent and unpredictable ways that even its creators don’t understand. As machines increasingly shape global events, how can we regain control? (via The Guardian)

The Brilliant Ways UPS Uses Artificial Intelligence, Machine Learning And Big Data

In a business where shaving off a mile per day per driver can result in savings of up to $50 million per year, UPS has plenty of incentive to incorporate technology to drive efficiencies in every area of its operations. According to UPS’ Chief Technology Officer Juan Perez, “Our business drives technology at UPS.” Here are just a few of the ways UPS uses big data and artificial intelligence (AI) to prepare for the 4th Industrial Revolution. (via Forbes)