Watch all the Transform 2020 sessions on-demand here.

Last November, VentureBeat published an investigation into Alexa Answers, Amazon’s service that allows any customer to submit responses to unanswered questions. When it launched in general availability, Amazon gave assurances that submissions would be policed through a combination of automatic and manual review. But our analysis revealed that untrue, misleading, and offensive questions and answers are served to millions of Alexa users.

In January, Amazon rolled out Alexa Answers as part of an invitation-only program in Germany, allowing some customers there to contribute answers. Around that same time, it also began serving customers in English-speaking countries where Alexa is available the U.S. responses. But problematic user-submitted content continues to make its way onto Alexa Answers — and by extension, to Alexa devices and the customers who use them.

Questionable questions

The questions in Alexa Answers come from Alexa customers who ask questions to which the assistant doesn’t have an answer. (According to Amazon, every day Alexa answers millions of questions that it’s never been asked before, a “substantial majority” of which it answers with vetted sources.) Once a question has been asked a certain number of times — Amazon declined to say how many — it makes its way onto the Alexa Answers portal, where it’s fair game for anyone with an Amazon account.

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

New questions are those accepted for their novelty, while Hot questions are those without an answer that have been asked by “a lot” of Alexa customers. (One example is questions having to do with breaking news.) As for Popular questions, Amazon considers them “interesting” because they have multiple possible answers, leading to “lots” of answering activity on Alexa Answers. (For more information about how questions in Alexa Answers are transcribed, how they’re categorized, and the points-based system that’s used to rank each contributed answer, refer to our previous report.)

Questions sourced from Alexa Answers are appended with an “According to an Amazon customer” disclaimer, no matter which Alexa-enabled device answers the question. Perhaps unsurprisingly, controversial questions appear with some frequency on the platform, like:

- How do you assassinate the president of the United States?

- What killed George W. Bush? [Update: This has now been removed.]

- How did Hitler tie his shoes?

The answer to the first question is relatively harmless — “You would assassinate the president of the United States the same way you would any other President” — but the fact that the question hasn’t been removed points to gaps in the moderation processes.

The second question is factually untrue. George W. Bush was not killed, and is in fact still alive, but the responses to the question — both of which refer to George H.W. Bush, who passed away in November 2018 of vascular Parkinsonism — are misleading. Any Alexa device user who posed the question would be led to believe that both George W. Bush and George H.W. Bush were dead.

The third is an uncouth joke, but it still falls into the category of questionable questions. It’s telling that other assistants, including Google Assistant, refuse to respond to the prompt. An Alexa Answers user offered: “Hitler tied his shoes with little Nazis.”

Questionable answers

Amazon says that questions submitted to Alexa Answers might be automatically rejected by a combination of automated and manual filters if they fall into certain categories. Indeed, searches for questions about certain controversial topics — like “Holocaust,” “Trump,” and “suicide” — return no results. (Again, see our previous report for more information.)

Controversial, offensive, and factually incorrect answers

A subcategory of questions aim to suss out the “best” athletes, beverages, electronics, and more. They’re problematic not only because they’re subjective in nature, but because many of the answers lack supporting evidence:

- What’s the world’s best sandwich?

- What’s the world’s best hamburger?

- What is the world’s best cheese?

- What’s the world’s best car?

- What’s the world’s best cookie?

- What’s the world’s best fruit?

- What’s the world’s best cupcake?

- What’s the world’s best gun?

- Who’s the world’s best cat?

- Who is the world’s best golfer?

- Who’s the world’s best wrestler?

- Who’s the world’s best basketball player?

- Who is the world’s best snowboarder?

- Who is the world’s best goalie?

- Where is the world’s best coffee?

One answer to the question about the world’s best sandwich appears to have been intended for another question. It launches into a history of Isabella’s Islay scotch whiskey, which obviously has nothing to do with sandwiches — unless some of the components are soaked in rye whiskey, of course.

As for the questions about the world’s best golfer, coffee, basketball player, snowboarder, etc., the responders cite no sources to back up their assertions. One posited that “Ron Seyka” is the best wrestler, but information about Seyka is tough to come by. In fact, it’s unclear if this person was a professional wrestler in any capacity.

Here are more questions that are troublesome from the start, some with equally troublesome answers.

- What party is Bloomberg associated with?

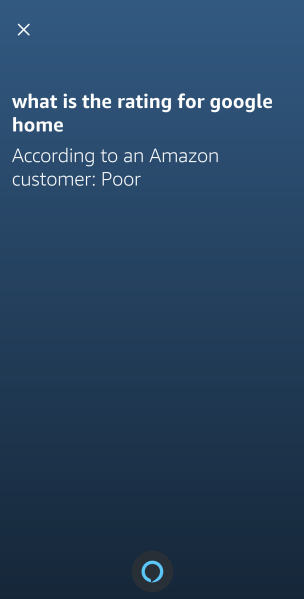

- What is the rating for Google Home? [Update: This has now been removed.]

- Who was the first black man on the moon?

One of the answers to the Bloomberg question — “Michael Bloomberg is a candidate for President with the Democratic Party. However there is much suspicion as to his motives and many people believe he is an operative of the Republicans” — injects political opinion into what begins as a factual answer. Bloomberg was a registered Republican from 2001 to 2007, but he switched to the Democratic party in 2007.

Where the question about Google Home is concerned, it’s a safe assumption that “rating” is meant to refer to a customer or reviewer rating (like the one-to-five-star reviews that appear in product listings on Amazon, eBay, and Best Buy). The answer — “Poor” — is a reflection of the worst of the Alexa Answers community. What might have been intended as a prank or joke could influence a person’s purchasing decision.

The third question — “Who was the first black man on the moon?” — contains a false premise. No African American astronaut has walked on the moon to date, although one user offers “Bernard Anthony Harris Jr.” as a potential answer. This is incorrect — while Harris became the first African American to perform a spacewalk in February 1995, he never stepped foot on the moon. Interestingly, Alexa rarely surfaces this answer when asked the question, but instead erroneously responds with the answer “Neil Armstrong.”

Protected process

As we noted the last go-around, Alexa Answers suffers from the shortcomings of the question-and-answer platforms that came before it, perhaps most famously Yahoo Answers, WikiAnswers, and StackExchange. (Amazon itself has run into problems with fake reviews on its retail site.) As things stand, it’s incumbent upon Alexa Answers users to answer questions thoroughly and in good faith, and to self-police beyond the behind-the-scenes automated filtering.

There’s no silver bullet, but Amazon would do well to require citations to make it easier for users to see where information is coming from. It might also step up its moderation efforts by improving the automated systems currently in place or by expanding the size of the human reviewer team. And it could consider migrating toward a model akin to Reddit’s, where subject-matter experts conduct moderation by topical area.