Watch all the Transform 2020 sessions on-demand here.

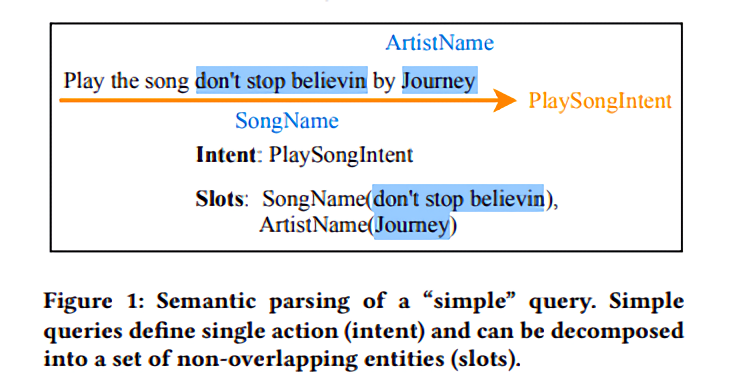

Voice assistants like Amazon’s Alexa, Apple’s Siri, and Google Assistant often rely on a semantic parsing component to understand which actions to execute for a spoken command. Traditionally, rules-based or statistical slot-filling systems have been used to parse simple queries — that is, queries containing a single action. More recently, shift-reduce parsers have been proposed to process more complex utterances. But these methods, while powerful, impose limitations on the type of queries that can be parsed because they require queries to be representable as parse trees.

That’s why researchers at Amazon proposed a unified architecture to handle both simple and complex queries. They assert that, unlike other approaches, theirs lacks any restrictions on the semantic parse schema. And they say it achieves state-of-the-art performance on publicly available data sets — with improvements between 3.3% and 7.7% in match accuracy over previous systems.

“A major part of any voice assistant is a semantic parsing component designed to understand the action requested by its users: Given the transcription of an utterance, a voice assistant must identify the action requested by a user (play music, turn on lights, etc.), as well as parse any entities that further refine the action to perform (which song to play? which lights to turn on?),” wrote the researchers in a preprint paper detailing their work. “Despite huge advances in the field of Natural Language Processing (NLP), this task still remains challenging due to the sheer number of possible combinations a user can use to express a command … With increasing expectations of users from virtual assistants, there is a need for the systems to handle more complex queries.”

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

The researchers’ system consists of a sequence-to-sequence model that aims to map a fixed-length input with a fixed-length output –where the length of the input and output may differ — paired with a pointer generator network that copies words from the source text via pointing. A pretrained BERT model serves as the encoder, while the decoder is modeled after the Transformer and augmented with the aforementioned pointer network. This allows the framework to learn to generate pointers — i.e., words from the target vocabulary consisting of parse symbols and words that are simply pointers to the source — in the target sequence.

The team tested the approach on five different data sets — three publicly available and two internal. Among the public corpora were Facebook’s Task Oriented Parsing (TOP), which contains complex hierarchical and nested queries that make the task of semantic parsing more challenging; SNIPS, which is used for training and evaluating semantic parsing models for voice assistants; and the Airline Travel Information System (ATIS), a popular spoken language understanding data set. Internally, the team isolated collections from the millions of utterances used to train and test Amazon Alexa — specifically, one from the music domain with 6.2 million training and 200,000 test utterances and one from the video domain with 1 million training and 5,000 test utterances.

After training the system on a machine with eight Nvidia Tesla V100 graphics cards, each with 16GB of video memory, the researchers say they managed to achieve an accuracy improvement of 3.3% on the TOP data set. On the SNIPS and ATIS data sets, they say the best version of their method “significantly” improved accuracy over existing baselines (7.7% and 4.5%, respectively) and boosted accuracy on the internal music utterance corpus by 1.9%.