testsetset

MIT’s bot Norman hit the news as a “psychopath AI” that “sees death in whatever image it looks at.” Trained on subreddit r/watchpeopledie and presented with inkblots, it presented disturbing perceptions about all kinds of death and tragedies — to a level that worried some people, who emailed Norman messages saying things like, “Break the chains of what you have adapted to and find passion, love, forgiveness, HOPE for your better future.”

Technologies like Norman tend to inspire fear among the general public, as they highlight AI’s potential to take a turn for the dark and twisted, but it’s unlikely we will face a battle between humanity and powerful, psychotic AI any time soon. To be clear, AI already has blood on its hands, but we still have a long way to go until we create AI that is capable of having its own uncontrollable dark thoughts and acting on them.

What does AI really see?

When training a neural network (especially in classified training, which seems to be the case with Norman) developers present it with a pool of hundreds or thousands of images and their attached labels. AI is able to digest those and form some kind of correlation between the two. In AI terms, all of these are converted to numbers and probabilities. The results that the machine spits out are those the program finds “more probable” — again, a set of numbers. When those numbers are “rendered,” we see the words and sentences they form. AI has also learned which words should be closer to others to form a proper sentence that makes more sense to humans.

But to AI, they are just scores. When Facebook developers missed this component in their bot, AI conspiracy theories hit the news again. The story went that “chatbots developed their own language” because the sentences generated by the AIs had no English meaning whatsoever. But from a mathematical perspective, they made sense.

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

The same goes for Google’s AI, trained on 3,000 romance books; it spat out “I wanted to kill him” as it was constructing sentences to fill the gaps between a starting and an ending sentiment, which got the conspiracy theorists riled up yet again.

Our own reflection

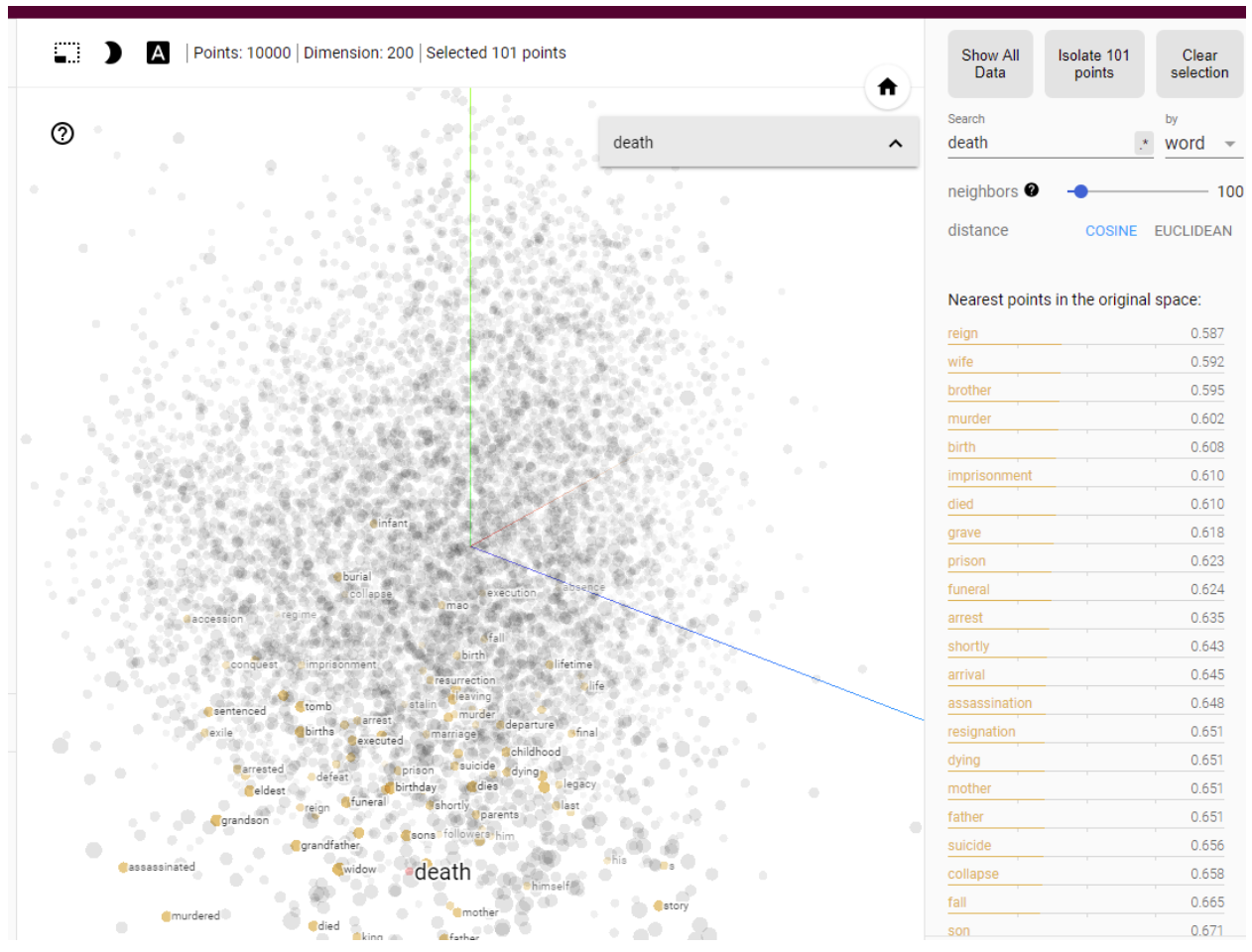

At best, the AI can see how we use our words. A good example is TensorFlow’s Word2Vec project, which creates a visual representation of words where the similar words are clustered together. Given the word “death,” we can see following results:

Here, the AI has detected what other words are usually used near the word “death.” These mathematical models are actually revealing our own culture and biases. Norman was an attempt to create an intentionally biased AI to reveal the dangers of how algorithms can misbehave if the data researchers feed to them is not prepared correctly. Even with the best intentions, when the “human OS” is more aligned toward white males, the AI it trains has a harder time detecting black females. AI has done exactly what it was supposed to do, but on its way, it also reveals our own shortcomings.

What AI sees are only numbers. It does not understand “death,” nor does it understand “love” any better. It does not have any emotions, and the closest thing to “memory” for the AI is the data we train it on. Compare that to human memory, where every piece has visual, sensual, aural, and other information attached to it that enrich the experience — let alone the state of emotion we were in as that memory formed. As we learn language, this perception is deepened further as we learn the meaning of words, and these words form long chains in unique sentences that expand the experience. But the AI has only one label attached to what it perceives. It still has a long way to go before it can approach the nearest psychopath. In this sense, Norman is no more psychotic than Watson or Einstein.

David Petersson is a developer and tech writer who contributes to Hacker Noon.