Watch all the Transform 2020 sessions on-demand here.

It can be a bit difficult to wrap your brain around what exactly neural interface startup Ctrl-labs is doing with technology. That’s ironic, given that Ctrl-labs wants to let your brain directly use technology by translating mental intent into action. We caught up with Ctrl-labs CEO Thomas Reardon at Web Summit 2019 earlier this month to understand exactly how the brain-machine interface works.

Founded in 2015, Ctrl-labs is a New York-based startup developing a wristband that translates musculoneural signals into machine-interpretable commands. But not for long — Facebook acquired Ctrl-labs in September 2019. The acquisition hasn’t closed yet, so Reardon has not spoken to anyone at the social media giant since signing the agreement. He was, however, eager to tell us more about the neural interface technology so we could glean why Facebook (and the tech industry at large) is interested.

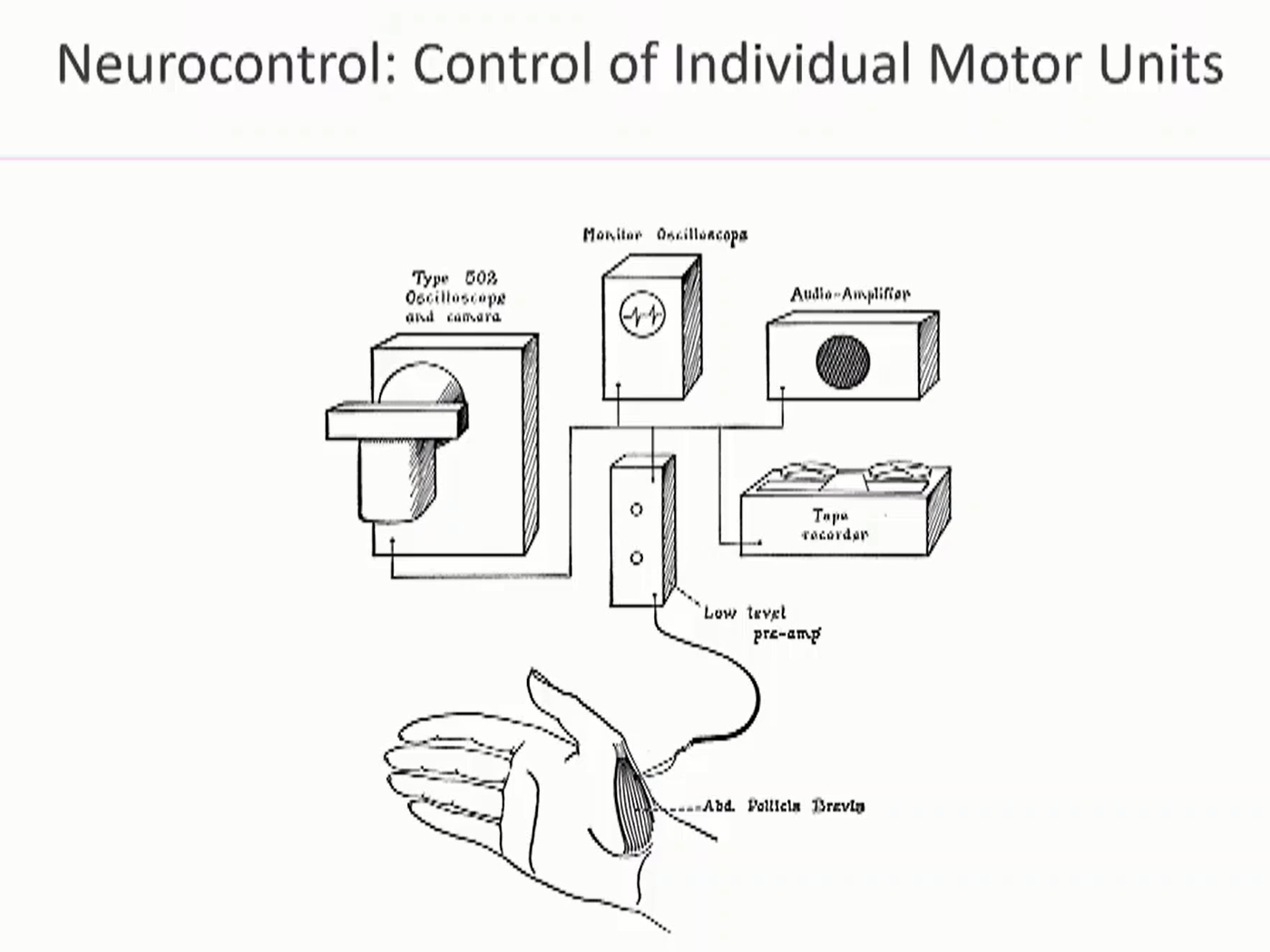

Above: Neurocontrol: Interrogating individual neurons in the spinal cord

In short, Ctrl-labs wants us to interact with technology not via a mouse, a keyboard, a touchscreen, our voice, or any other input we’ve adopted. Reardon and his team expect that in a few years we will be able to use individual neurons — not thoughts — to directly control technology.

‘Mother of all machine learning problems’

Reardon has said many times that his company is tackling the “mother of all machine learning problems.” He used that phrase a few times at the conference as well. Apple CEO Tim Cook has previously described self-driving cars as “the mother of all AI projects.” Everyone understands why self-driving cars are so complex, so we had to ask Reardon what exactly Ctrl-labs is finding so difficult.

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

“This idea of being able to decode neural activity in real time and translate that into control,” Reardon told VentureBeat. “So, your body does it. Each cascading series of neurons are different layers, say in the cortex, and then they send output to the spinal cord. Each set of neurons is interpreting the set before it. And in some millisecond timescale, turning that into a final action.”

https://www.youtube.com/watch?v=xl9BOyfOhFw

First you have to capture all that activity, and then you have to repurpose it. “We’re trying to do that with a machine at the other end,” he continued. “Just the decoding part alone takes a lot of very clever, bespoke algorithms to do in real time. Doing it if we could record all that data and then come back and analyze it over weeks — that’s kind of how neuroscience is done. Most of this stuff is done in what we call post facto. We do analysis of data after the fact and we try to understand ‘How does the activity of a neuron, or neurons, generate a behavior?’ That is the central goal of all neuroscience. Neural activity — final behavior, how do I connect them? What’s the code of these neurons? Trying to do it in real time is effectively not done.”

In short, there’s no system more complicated in nature than the human brain. It’s incredibly difficult to create algorithms that can figure out intent without drilling into a human skull. Decoding the activity of individual motor neurons in order to control machines is the ultimate machine learning challenge. Everything else pales in complexity.

“It’s easy to get worked up about some of the breakthroughs from DeepMind and how they can do game-playing things,” Reardon said. “The real thing is to do it in human real time.”

Electromyography

In December 2018, Ctrl-labs showed off its Ctrl-kit prototype. It comprised two parts: an enclosure roughly the size of a large watch packed with wireless radios, and a tethered component with electrodes that sat further up the arm. The wrist-worn device Reardon showed me connects over Bluetooth to a PC or smartphone for processing.

Before we can get to the part where intentions turn into actions, it’s important to grasp what the Ctrl-labs device on a human wrist is detecting. “This is called surface electromyography (EMG),” Reardon explained. “This is differential sensing electrodes. Your motor neuron sends a little spike called an action potential. It travels down an axon that perforates into the muscle. It spans out and it touches a bunch of fibers in the muscle — hundreds to thousands. When it sparks, each of those fibers in the muscle has a big spark in response. The spark of the motor neuron is incredibly small — invisible. You can’t electrically hear it, it’s so small. Tiny little nanoamps to picoamps of current. Your muscle fibers have massive amounts of electrical activity. The other fact is that there’s this massive electric field that each of the fibers of your muscle are generating. This is picking up that field.”

To translate mental intent into action, the EMG device is measuring changes in electrical potential caused by impulses traveling from your brain to your hand muscles. “The cool thing, because we’re neuroscientists, that we figured out is ‘How do I take the electroactivity of the muscle and figure out what was the electroactivity of the neuron that created that activity?’ And that’s what we’ve done. We’re able to recreate the activity of these spinal motor neurons out of the electrical response of the muscle.”

Motor babbling

Reardon says that you can learn to use a Ctrl-labs wristband in about 90 seconds to accomplish a task with your brain. So, what exactly is happening between you and the EMG device in those 90 seconds?

“We are taking advantage of that thing that you did as a baby,” said Reardon. “You did what’s called ‘motor babbling’ as a baby. You figured out ‘Hey, when I do this thing with a neuron, it causes this thing over here to happen.’ And you create a neural map to your body and a neural map to the output. You know this. A baby can’t do this. It takes quite a while for them to grab an object skillfully. It’s called ‘skill, reach, and grasp.'”

“You are wired for that,” he continued. “It is the thing you are more wired for than anything else. More than speech. More than math. More than even understanding language. The thing you do really well is learn how to move, in particular your hands and then your mouth. You can do it in a really fine, skillful way. With incredibly subtle movements with very little neural activity. A baby takes a long time to get through that. But you’re always doing that in the world. You never stop doing that. You basically build this gross motor map of that first year and a half.”

To illustrate his point, Reardon put a cup of water on the table. He then asked me to demo my body to him by taking a sip. I did.

“It’s the most difficult fucking thing you will ever do in your life,” he said.

I asked Reardon if he was essentially saying that this action is hard to teach a robot to do. After all, the exact path of my hand is different every time. “It’s so difficult to teach a robot,” he said. “The number of degrees of freedom that are involved, both in your output capacity — 27 degrees of freedom in the arm — but in this thing itself. You’ve taken a sip of something, 10,000, 100,000, a million times in your life. But every time you do it, it’s different. Do it again. It’s now a new task. Different weight. Different texture on the glass, etc. You’re doing this in real time.”

Tapping into motor feedback

Ctrl-labs is trying to tap into how we learn to use our own bodies. It’s re-purposing that ability to let us control technology.

“You have this phenomenal ability to do motor adaptation,” Reardon explained. “You have the general map of the task. ‘I’ve got to grab this glass and move it to my mouth and not jam it through my face.’ But you did it, and there was no cognitive load. You aren’t like ‘I got to stop and concentrate on this.’ You just did it. You have so much capacity in that motor feedback loop that is unexploited by the way we use machines today. That’s at heart what we’re trying to kind of tunnel into and break open for people is to tap into that ability to learn and deploy motor skills in seconds. You have a general idea how to go do it and you adopt it really fast. And you do it incredibly well.”

At the end of the day, your brain does one thing and one thing only, according to Reardon: It turns muscles on and off. Humans are incredibly good at using their brain to leverage muscles in a dynamic way, adapting to specific circumstances every single time. This requires an immense amount of computation, without creating a feeling of cognitive load. That’s why this is the mother of machine learning problems. Ctrl-labs is trying to capture how humans learn.

Jumping dinosaur

Google Chrome has a built-in Offline Dino Game that you can play when you don’t have an internet connection (you can also access the game by typing chrome://dino/ into your address bar). Ctrl-labs has used this game to show off its technology before, including at Web Summit, so we kept coming back to it as an example in our interview.

https://www.youtube.com/watch?v=lcMRMpAVlsc

Say you have a Ctrl-labs wristband and you’re tying to learn to get the dinosaur to jump using your mind. At first, you do so by pushing a button. The wristband detects the electrical activity of your muscles. You push the button and the dinosaur jumps. You keep pushing and it keeps jumping. Eventually, you wean yourself off the button-pressing and just use your mind. The dinosaur still jumps when you intend it to.

There’s some special sauce on the software side that makes this work. You can’t just go around pushing buttons and then not pushing buttons without a little bit of help. “At first it’s because we make it jump based on the key,” Reardon explained. “And then we slowly dial it back so that the key isn’t what makes it jump. It’s the electrical activity that makes it jump.”

“Everything you’ve ever done with a machine in the world in the past you did by moving,” Reardon said. “Literally everything required a movement. And what we’re trying to get you is to kind of unlearn the movement, but preserve the intention.”

The movement you perform doesn’t have to be defined. Ctrl-labs doesn’t have to declare that it’s, say, the middle finger on your right hand tapping a button. The actual movement can be anything. It’s completely arbitrary. It can be your thumb hitting the button or your ear wiggling. But that’s not the crazy part.

Force modulation

“Here’s the cool thing. I don’t have to tell you to stop. What you start to realize is the dinosaur is going to jump whether you push the button or not,” said Reardon. “When I said ‘this is what your brain is really good at’ — your brain is trying to solve this problem of ‘what’s the minimal action I can create to cause a reaction?’ And that’s what your brain learns. That adaptation task you’re doing is force modulation. You’re trying to do the least amount of neural activity to generate muscle contractions to grab the glass and bring it back. And you’re always trying to absolutely minimize. You can’t not do this. You can’t stop doing it; it’s impossible.”

As your brain sees that there’s no connection between the movement that you’re doing and the result, it stops doing the full movement. Once you’ve got the dinosaur jumping with your mind and someone asks you what you’re doing, you can’t quite say. It started with you moving something, but now you’re doing it without moving. Ctrl-labs is hacking into our innate ability of exerting minimal effort to achieve an action.

You could soon go through mini training sessions to teach yourself to control individual software actions with your mind. You put on a wearable and you’re asked to tap the screen to do something in an application or a game. About 90 seconds later, you’re doing it without tapping the screen. Every human can do this, Reardon says.

“It’s one of the weirdest little tricks,” he said. “It works so reliably. And you can just keep building things up. We try to show it as this very simple thing, but you keep building these up into hundreds of keys. It’s a pretty weird experience when you have it the first time, yourself. It’s fun.”

Typing

Speaking of keys, since we’re talking about an ultimate input device, typing is an obvious place to start. It’s one thing to make a dinosaur jump with your mind; it’s quite another to type with your mind. We’ve seen typing demos from Ctrl-labs before, but the one showed off this month was fundamentally different, according to Reardon. Instead of typing as you know it today, whether that’s hitting physical keys or virtual keys with autocorrect, Ctrl-labs wants to let you form words directly.

“This one we had the ability to actually control the language model at the same time. We call it ‘word forming.’ So you’re not typing. You’re kind of forming words in real time and they kind of spill out of your hand. It’s giving you sort of choices between words and you quickly learn how to get to the word you want to form. As opposed to typing one key at a time [to form words].”

And what’s the main attraction to writing this way? Speed. It’s all about speed.

“Ultimately we want you to be able to make words at the rate of speech. With some presumption that the rate of speech is probably approximately the cognitive limit of language production, of creating language, that we’re very well adapted to, but obviously typing is not. If you were speaking really, really fast — could you type at 250 words per minute? Nobody can do that today. We want to be able to have everybody do that. Really there would be no difference between how you produce oral speech and how you produce this controlled text flow.”

To be clear, Ctrl-labs hasn’t achieved this yet. Their demo only showed about 40 words per minute typing speed. Anyone can learn to type faster on a keyboard. Speed aside though, there are clearly many applications for typing without a keyboard, touchscreen, or microphone.

AR, VR, and emerging platforms

“These obviously have huge value in what I’ll call the emerging platforms,” said Reardon. “Whether it’s the computer you wear on your wrist or the computer you wear on your face, they both need a whole new textual interface. Among other things, we are betting pretty massively — I’d say we’ve pretty much bet the company on the idea that speech wasn’t going to be the way you controlled machines. It’s going to be part of the solution, but it’s not the be-all-end-all solution. It’s a solution you’ll use somewhat rarely and episodically. This is probably best shown by how people use Alexa today. It’s really useful, but a very limited context in which it’s useful. We control machines with text, and we actually write and we author with text today, and speech is a bad way to author.”

AR and VR are obvious targets for potential applications because they don’t have good control experiences today. It’s thus no surprise that Facebook plans to fold Ctrl-labs into its Reality Labs division. Ctrl-labs is estimated to be Facebook’s biggest acquisition since acquiring Oculus for $2 billion in March 2014. Robotics is another area that Reardon mentioned, though it’s not clear if that will still be pursued under Facebook.

Typing with your mind is just one of many examples Ctrl-labs has shown off that intrigued Facebook enough to buy the company. But again, it’s how the company achieved the typing, not the typing itself, that is impressive.

A single neuron

When Ctrl-labs works with neuroscientists, the first question is always around whether the signals are coming from individual neurons. Is Ctrl-labs masking its tech with other tricks? The dinosaur demo is meant to show in real time that the company can decode a single neuron. The person is not moving at all, and yet the dino is jumping.

That begs the question: What was that neuron used for before?

In response to this question, Reardon shared some frustration with the discussion around brain-machine interfaces today. That includes “the crazy invasive stuff that Elon Musk and Neuralink are doing that can have profound value for people who have like no motor abilities.” Sure, there are people who have lost certain motor abilities, and there is an opportunity to give them that function again. But then the discussion quickly devolves into using neurons to control things outside of your body and controlling AIs directly.

Intentions, not thoughts

“The problem is every neuron in your brain is already doing something,” Reardon explained. “It’s not like there’s a bench of neurons all lined up sitting around waiting to be used and the whole point of brain machine interfaces is to go listen to those. Instead, you’ve got these neurons that are already doing stuff. So are you trying to decode a thought and turn that into some action? I don’t know a neuroscientist alive who can tell me what a thought is. Which is why we had this unique approach about really trying to talk about intentions rather than thoughts. We’re really focused on that. We’re trying to focus on allowing you to control the things you want to control. You must intend the thing you’re doing.”

Again, in the dinosaur demo, the user is controlling the neuron in a very intentful way. “He doesn’t think ‘Hey, I’m considering jumping here.’ He says ‘Jump. Jump. Jump.’ He wants to jump. That causes a motor neuron in his spine to actually fire.”

That motor neuron is the one that’s controlling probably a collection of 1,000 to 10,000 fibers in the ulnar muscle. At least, that’s what it is for the subject in the video. Again, you could use a different muscle.

Breakthroughs

“The hard part isn’t just listening to a neuron and allowing you to use that neuron to control something in a new way,” said Reardon. “It’s for you to still be able to use that neuron. The really hard work that we’ve done is to distinguish between ‘Are you using that neuron to move, to control your body? Or are you using it to control the machine?’ We have some, I think, pretty breathtaking breakthroughs to distinguish between different kinds of neural activity from the same neuron.”

How do you know it’s just one neuron?

“We’ve gone through exhaustive proofs,” said Reardon. “I can’t describe here. But stuff that will be published as we do our academic publishing of this work. But we’ve done the proof using a bit more traditional techniques to show that this is a single neuron being activated. In our world, this is called a motor unit, which is a set of fibers that correspond to a singular neuron. It’s actually a difficult thing to prove, but we feel like we’ve proved it with some scientific rigor.”

When Ctrl-labs publishes its proof, it will go through standard peer-review. Reardon clarified that part of this single neuron breakthrough has been published already, but the latest has not, yet.

When?

We were being ushered out of the room as I posed my last question: When will neural interfaces be commercially available? “That’s for the future,” said Reardon.

Roughly, are we talking like 10 years from now? “No, it’s much sooner than that.”

Five years from now? “I think it’s sooner than five.”

So we’ll buy a device like this in less than five years from now? “Or it will be part of another device, but yeah.”

So maybe my next phone? “As we embody it, it’s something that you’re wearing.”