Watch all the Transform 2020 sessions on-demand here.

Fundamental problems in robotics involve both discrete variables, like the choice of control modes or gear switching, and continuous variables, like velocity setpoints and control gains. They’re often difficult to tackle, because it’s not always obvious which algorithms or control policies might best fit. That’s why researchers at Google parent company Alphabet’s DeepMind recently proposed a technique — continuous-discrete hybrid learning — that optimizes for discrete and continuous actions simultaneously, treating hybrid problems in their native form.

A paper published on the preprint server Arxiv.org details their work, which was accepted to the 3rd Conference on Robot Learning, held in Osaka, Japan in October 2019. “Many state-of-the-art … approaches have been optimized to work well with either discrete or continuous action spaces but can rarely handle both … or perform better in one parameterization than another,” the coauthors wrote. “Being able to handle both discrete and continuous actions robustly with the same algorithm allows us to choose the most natural solution strategy for any given problem rather than letting algorithmic convenience dictate this choice.”

The team’s model-free algorithm — which leverages reinforcement learning, or a training technique that rewards autonomous agents for accomplishing goals — solves control problems both with continuous and discrete action spaces and hybrid optimal control problems with controlled and autonomous switching. Furthermore, it allows for novel solutions to existing robotics problems by augmenting the action space (which defines the range of possible states and actions an agent might perceive and take, respectively) with “meta actions” or other such schemes, enabling strategies that can address challenges like mechanical wear and tear during AI training.

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

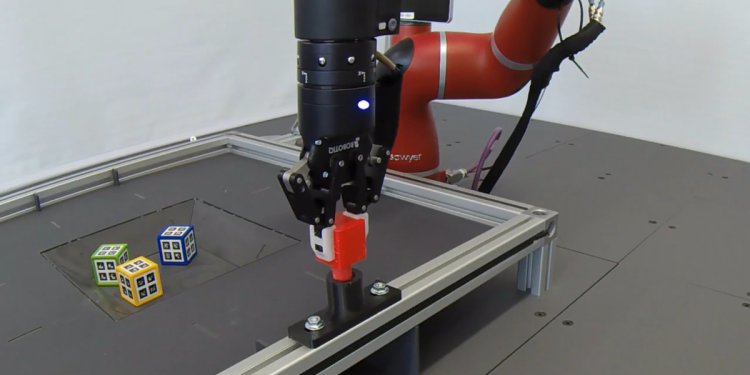

The researchers validated their approach on a range of simulated and real-world benchmarks, including a Rethink Robotics Sawyer robot arm. The say that, given the task of reaching, grasping, and lifting a cube where the reward was the sum of the three sub-tasks, their algorithm outperformed existing approaches, which were unable to solve the task.

That’s because reaching the cube required the agent to open the arm’s gripper, but grasping the block required closing the gripper. “Initially, the [baseline] policy will have most of its probability mass concentrated on small action values and will thus struggle to move the gripper’s fingers enough to see any grasp reward, explaining the plateau in the learning curve,” wrote the coauthors. “[Our method] on the other hand always operates the gripper at full velocity and hence exploration is improved, allowing the robot to solve the task completely.”

In a separate experiment, the team set their algorithm loose on a Parameterized Action Space Markov Decision Processes (PAMDP), or a hierarchical problem where agents first select a discrete action and subsequently a continuous set of parameters for that action. In this case, the agent was tasked with manipulating the robot arm such that it inserted a peg into a hole, where the reward was computed based on the hole position and kinematics.

They say that their approach achieved a larger reward than both fine and coarse approaches, and they assert it could serve as the foundation for “many more” applications of hybrid reinforcement learning in the future. “For an expert designer, selecting an appropriate mode beforehand can be difficult,” they wrote. “[Our] approach is beneficial since it only requires a single experiment, whereas [alternatives] … need to be verified by an ablation.”