Watch all the Transform 2020 sessions on-demand here.

Computer vision algorithms aren’t perfect. Just this month, researchers demonstrated that a popular object detection API could be fooled into seeing cats as “crazy quilts” and “cellophane.” Unfortunately, that’s not the worst of it: They can also be forced to count squares in images, classify numbers, and perform tasks other than the ones for which they were intended.

In a paper published on preprint server Arxiv.org titled “Adversarial Reprogramming of Neural Networks,” researchers at Google Brain, Google’s AI research division, describe an adversarial method that in effect reprograms machine learning systems. The novel form of transfer learning doesn’t even require an attacker to specify the output.

“Our results [demonstrate] for the first time the possibility of … adversarial attacks that aim to reprogram neural networks …” the researchers wrote. “These results demonstrate both surprising flexibility and surprising vulnerability in deep neural networks.”

Here’s how it works: A malicious actor gains access to the parameters of an adversarial neural network that’s performing a task and then introduces perturbations, or adversarial data, in the form of transformations to input images. As the adversarial inputs are fed into the network, they repurpose its learned features for a new task.

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

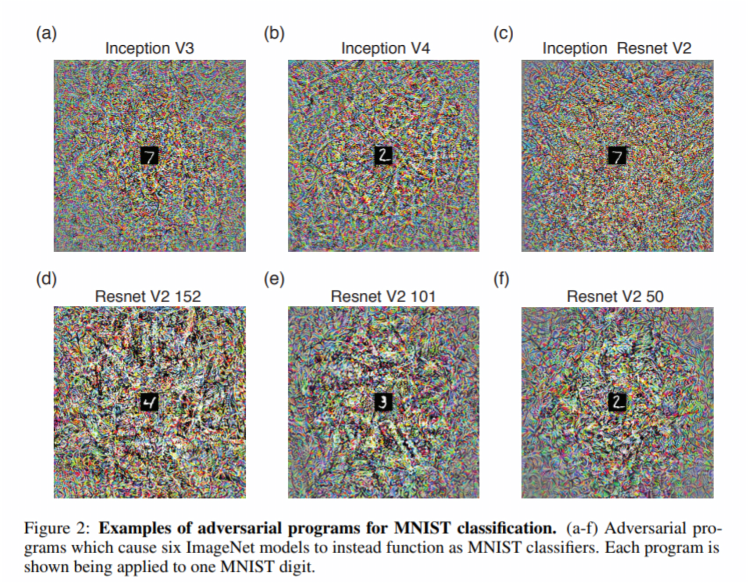

The scientists tested the method across six models. By embedding manipulated input images from the MNIST computer vision dataset (black frames and white squares ranged from 1 to 10), they managed to get all six algorithms to count the number of squares in an image rather than identify objects like “white shark” and “ostrich.” In a second experiment, they forced them to classify the digits. And in a third and final test, they had the models identifying images from CIFAR-10, an object recognition database, instead of the ImageNet corpus on which they were originally trained.

Bad actors could use the attack to steal computing resources by, for example, reprogramming a computer vision classifier in a cloud-hosted photo service to solve image captchas or mine cryptocurrency. And although the paper’s authors didn’t test the method on a recurrent neural network, a type of network that’s commonly used in speech recognition, they hypothesize that a successful attack could induce such algorithms to perform “a very large array of tasks.”

“Adversarial programs could also be used as a novel way to achieve more traditional computer hacks,” the researchers wrote. “For instance, as phones increasingly act as AI-driven digital assistants, the plausibility of reprogramming someone’s phone by exposing it to an adversarial image or audio file increases. As these digital assistants have access to a user’s email, calendar, social media accounts, and credit cards, the consequences of this type of attack also grow larger.”

It’s not all bad news, luckily. The researchers noted that random neural networks appear to be less susceptible to the attack than others, and that adversarial attacks could enable machine learning systems that are easier to repurpose, more flexible, and more efficient.

Even so, they wrote, “Future investigation should address the properties and limitations of adversarial programming and possible ways to defend against it.”