Watch all the Transform 2020 sessions on-demand here.

Apple’s HomePod hasn’t won much praise for the capabilities of its integrated digital assistant Siri, but it does have one undeniably impressive feature: the ability to accurately hear a user’s commands from across the room, despite interference from loud music, conversations, or television audio. As the company’s Machine Learning Journal explains today, HomePod is leveraging AI to constantly monitor an array of six microphones, processing their differential inputs using knowledge gained from deep learning models.

One of the biggest challenges in discerning a user’s commands over ambient noise is overcoming the HomePod itself: Apple’s speaker can perform at very high volumes, and its microphones are immediately adjacent to the noise sources. Consequently, the company explains, there’s no way to completely remove the HomePod’s own audio from the microphones — only part of it.

Instead, Apple used actual echo recordings to train a deep neural network on HomePod-specific speaker and vibration echoes, creating a residual echo suppression system that’s uniquely capable of cancelling out HomePod’s own sounds. It also applies a reverberation removal model specific to the room’s characteristics, as measured continuously by the speaker.

Another interesting trick uses beamforming to determine where the speaking user is located, focus the microphones on that person, and apply sonic masking to filter out noises from other sources. Apple built a system that makes judgments about local speech and noise statistics based solely on the microphones’ current and past signals, focusing on the speech while trying to cancel out interference. It then trained the neural network using a variety of common noises that ranged from diffuse to directional, speech to noise, so that the filtering could apply to a large number of interference sources.

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

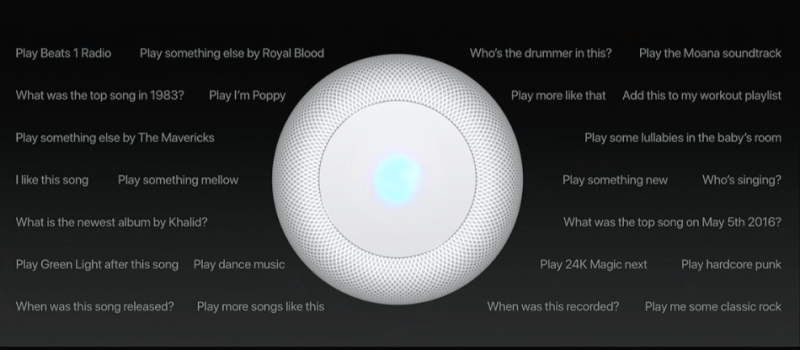

Above: Apple HomePod can answer questions about music, but it couldn’t answer or make phone calls until a post-release update.

HomePod’s other impressive capability is determining which of multiple speaking people is the correct target for commands, to steer the beamforming mics and isolate noise. One trick is using the required “Hey Siri” trigger phrase to determine who and where commands are coming from, but Apple also developed techniques to separate competing talkers into individual audio streams, then use deep learning to guess which talker is issuing commands, sending only the stream focused on that talker for processing.

The Machine Learning Journal’s entry does a great job of spotlighting how AI-assisted voice processing technologies are necessary but not sufficient to guarantee a great experience with far-field digital assistants. While all of the techniques above do indeed yield quick, reliable, and accurate Siri triggering, HomePod’s limited ability to actually respond fully to requests was a frequent target of complaints in reviews. If there’s any good news, it’s that the issues appear to be in Siri’s cloud-based brain rather than in the HomePod’s hardware or locally run services, so server-side patches could dramatically improve the unit’s functionality without requiring users to buy new hardware.