As messaging platforms continue to rack up massive monthly active users, conversational interfaces are in some cases becoming the primary interface between brands and consumers. The shift from graphic-led social networks to conversational-led interfaces introduces new interaction models that require brands to rethink how they connect with consumers.

There have been many best practice guides on how to design the right conversational experiences, but most, if not all, have forgotten a key element: Sound. Sound provides the experiential component that can add emotion to any experience without interfering with its functionality or utility. The key is to understand the right sounds to use and where to use them.

There is a broad universe of sounds, including music, environmental sounds, and short sound effects that are capable of creating strong linkages between brand identity, emotion, and memory. The sound of a voice can only go so far to distinguish a specific product or service.

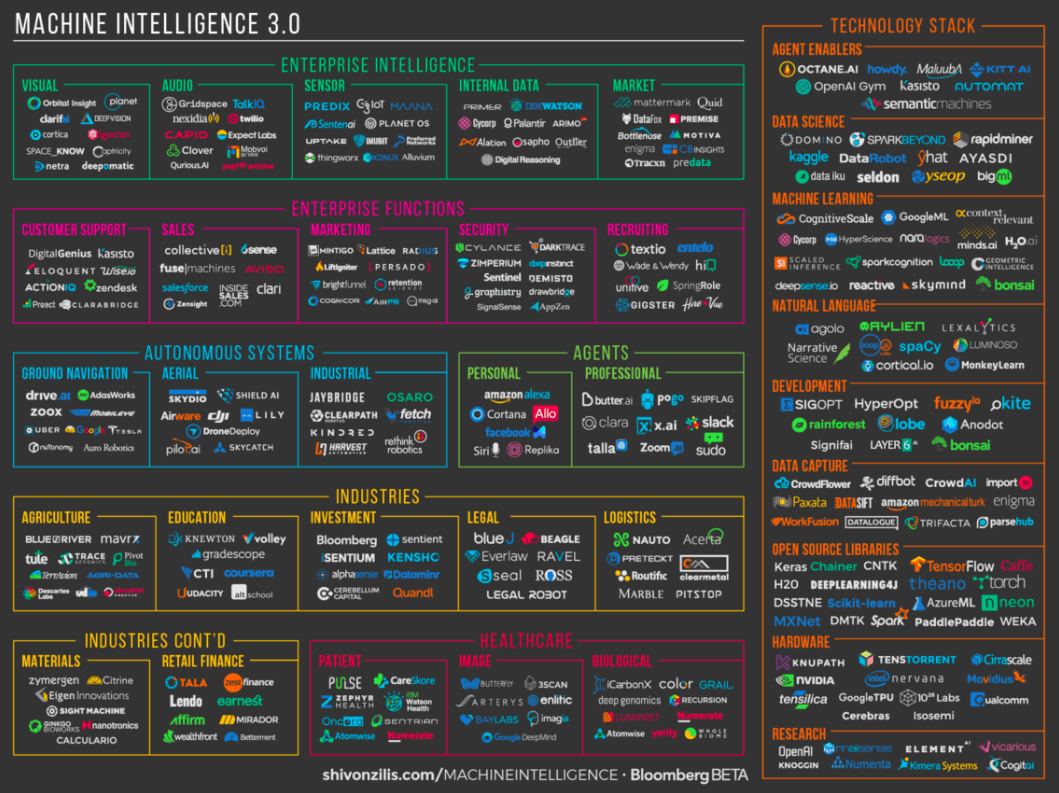

Users increasingly expect the function of an API to be reflected in the sounds the user actually hears. There are an increasing number of sound effect-related skills available to Amazon’s Alexa ecosystem, including dog-barking, air horns, and haunted house sounds. These skills engage Alexa as an advanced audio tool to enrich real-world play situations, providing a useful case study to brands on the kinds of auditory diversity being sought after and employed by early adopters.

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

Consider a smart-home API that uses weather sounds to signal a coming rainstorm, or a ride-hailing service that plays the characteristic tire whirr of a hybrid vehicle to signal that the driver is approaching. In both cases, sound forms a high-powered link that promotes user efficiency while delivering an invaluable emotional component to the AI user interface.

Human-level conversation is very difficult for an AI to achieve, though it is what users expect: AI systems are often anthropomorphized, with names like Siri and Alexa, and rely on voice-only forms of auditory communication. As interlocutors, we are very sensitive to slight misses, contextually inappropriate responses, awkward timing, and unusual phraseology. When users go off-script, these sources of friction quickly snowball, building up into negative associations that stick in the mind and are very difficult to counteract. An AI is judged by how it handles off-script interactions, and sound can greatly improve its success rate.

Yet sound is only one component to designing conversational voice experiences. As more brands leverage data, sound, and speech to build conversational experiences that accelerate business growth, it’s important to first consider the barriers to success: poor data strategy and speech usability framework.

Barrier #1: Data

Data is the fuel for scalable personalized customer experiences. With the right data strategy, brands can customize offers and content and acquire new high-value customers. Voice devices and experiences, however, introduce new barriers to capturing and leveraging data. Amazon, Apple, and Google won’t share user data from their personal assistants, which means brands need to think of alternative solutions to capture audience-level data. One solution is to extend the experience beyond the voice assistant to the consumers’ smartphones via a link with a discount offer. Once you capture their numbers and match against a network of unique identifiers, you can build audience-level profiles.

Barrier #2: Speech usability framework

The other barrier is a lack of visual prompts and auto-fill authentication on the part of websites and apps. This requires the consumer to manually fill in their information through voice prompts, which can quickly lead to fatigue. Until voice authentication becomes a feature, this barrier can also be solved with a promotional SMS link or by leveraging the companion app feature, where users can link their account.

Once brands have built a strong data capture engine, they need to identify the right engagement data to understand which entities and pathways perform best with specific user groups. For instance, with Alexa, brands can understand total sessions, voice events, pathways, and requests. With the right data capture and strategy, brands can begin to refine and optimize their voice experiences to drive results.

What makes auditory AI different from a human personal assistant? By embracing the full capability of a speaker system connected to a powerful computation engine, developers can begin to distinguish their brands with rich media, inspire users to interact with their AI products in more meaningful ways, and counteract sources of friction.

Steve Harries is the VP of Innovation and User Experience at Epsilon, a data driven marketing agency.

Jake Harper is the Founder and CEO of Ritmoji, which creates auditory emojis for bots like Alexa.