Watch all the Transform 2020 sessions on-demand here.

Instagram is launching a new feature designed to make users think twice before posting videos or photos with offensive captions.

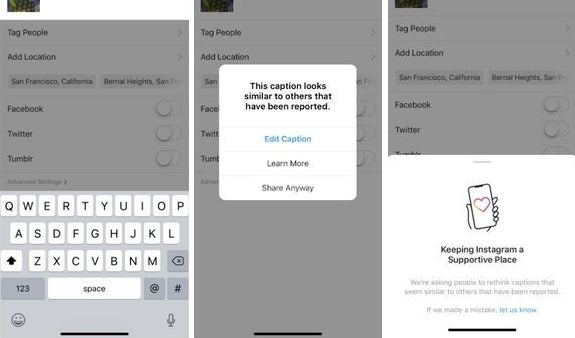

Moving forward, whenever someone tries to post a caption that Instagram detects as being potentially offensive, the user will see an alert asking them to reconsider their words. The alert system is entirely automated, with Instagram leaning on data garnered from previously bullying reports that contain similarly worded captions.

This is essentially an extension of a similar feature Instagram rolled out back in July that was aimed at the comment section — here again, Instagram uses AI to detect language, warn the user, and ultimately “encourage positive interactions.”

Above: Abuse alert for captions in Instagram

Of course, there is nothing stopping a user from going against the advice and posting an abusive comment or caption anyway, but the idea is that a gentle prompt could be all that’s needed to at least reduce abuse on Facebook’s mega-popular photo- and video-sharing platform.

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

“As part of our long-term commitment to lead the fight against online bullying, we’ve developed and tested AI that can recognize different forms of bullying on Instagram,” the company wrote in a blog post. “Earlier this year, we launched a feature that notifies people when their comments may be considered offensive before they’re posted. Results have been promising, and we’ve found that these types of nudges can encourage people to reconsider their words when given a chance.”

Online abuse and bullying is a perennial problem for most social platforms, and studies have shown that people under the age of 25 who are subjected to cyberbullying are more than twice as likely to self-harm or attempt suicide. Monitoring the comments and captions of billions of users is a near-impossible challenge for humans alone, which is why all the major platforms have increasingly turned to automated tools — Twitter recently reported that it now proactively removes half of all abusive tweets without anyone reporting them first.

Instagram’s latest bullying alert will be landing first in “select countries” before arriving in global markets in the months that follow.