Artificial intelligence is sweeping through almost every industry, layering a new level of intelligence on the software used for things like delivering better cybersecurity. McAfee, one of the big players in the industry, is adding AI capabilities to its own suite of tools that protect users from increasingly automated attacks.

A whole wave of startups — like Israel’s Deep Instinct — have received funding in the past few years to incorporate the latest AI into security solutions for enterprises and consumers. But there isn’t yet a holy grail for protectors working to use AI to stop cyberattacks, according to McAfee chief technology officer Steve Grobman.

Grobman has spoken at length about the pros and cons of AI in cybersecurity, where a human element is still necessary to uncover the latest attacks.

One of the challenges of using AI to improve cybersecurity is that it’s a two-way street, a game of cat and mouse. If security researchers use AI to catch hackers or prevent cyberattacks, the attackers can also use AI to hide or come up with more effective automated attacks.

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

Grobman is particularly concerned about the ability to use improved computing power and AI to create better deepfakes, which make real people appear to say and do things they haven’t. I interviewed Grobman about his views for our AI in security special issue.

Here’s an edited transcript of our interview.

Above: Steve Grobman, CTO of McAfee, believes in cybersecurity based on human-AI teams.

VentureBeat: I did a call with Nvidia about their tracking of AI. They said they’re aware of between 12,000 and 15,000 AI startups right now. Unfortunately, they didn’t have a list of security-focused AI startups. But it seems like a crowded field. I wanted to probe a bit more into that from your point of view. What’s important, and how do we separate some of the reality from the hype that has created and funded so many AI security startups?

Steve Grobman: The barrier to entry for using sophisticated AI has come way down, meaning that almost every cybersecurity company working with data is going to consider and likely use AI in one form or another. With that said, I think that hype and buzz around AI makes it so that it’s one of the areas that companies will generally call out, especially if they’re a startup or new company, where they don’t have other elements to base their technology or reputation [on] yet. It’s a very easy thing to do in 2019 and 2020, to say, “We’re using sophisticated AI capabilities for cybersecurity defense.”

If you look at McAfee as an example, we’re using AI across our product line. We’re using it for classification on the back end. We’re using it for detection of unknown malicious activity and unknown malicious software on endpoints. We’re using a combination of what we call human-machine teaming, security operators working with AI to do investigations and understand threats. We have to be ready for AI to be used by everyone, including the adversaries.

VentureBeat: We always talked about that cat and mouse game that happens, when either side of the cyberattackers or defenders turns up the pressure. You have that technology race: If you use AI, they’ll use AI. As a reality check on that front, have you seen that happen, where attackers are using AI?

Grobman: We can speculate that they are. It’s a bit difficult to know definitively whether certain types of attacks have been guided with AI. We see the results of what comes out of an event, as opposed to seeing the way it was put together. For example, one of the ways an adversary can use AI is to optimize which victims they focus on. If you think about AI as being good for classification problems, having a bad actor identify the most vulnerable victims, or the victims that will yield the highest return on investment — that’s a problem that AI is well-suited for.

Part of the challenge is we don’t necessarily see how they select the victims. We just see those victims being targeted. We can surmise that because they chose wisely, they likely did some of that analysis with AI. But it’s difficult to assert that definitively.

The other area that AI is emerging [in] is … the creation of content. One thing we’ve worried about in security is AI being used to automate customized phishing emails, so you basically have spear phishing at scale. You have a customized note with a much higher probability that a victim will fall for it, and that’s crafted using AI. Again, it’s difficult to look at the phishing emails and know if they were generated definitively by a human, or with help from AI-based algorithms. We clearly see lots going on in the research space here. There’s lots of work going on in autogenerating text and audio. Clearly, deepfakes are something we see a lot of interest in from an information warfare perspective.

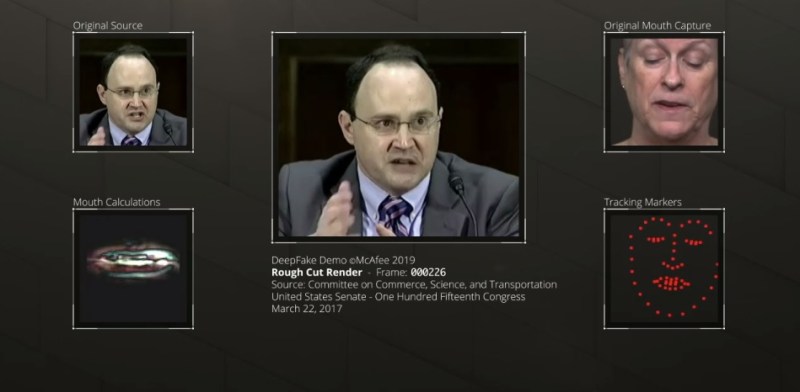

Above: Grobman did a demo of deepfakes at RSA in 2019.

VentureBeat: That’s related to things like facial recognition security, right?

Grobman: There are elements related to facial recognition. For example, we’ve done some research where we look at — could you generate an image that looks like somebody that’s very different [from] what a facial recognition system was trained on, and so fool the system into thinking that it’s that actual person that the system is looking for? But I also think there’s the information warfare side of it, which is more about convincing people that something happened — somebody said or did something that didn’t actually happen. Especially as we move closer to the 2020 election cycle, recognizing that deepfakes for the purpose of information warfare is one of the things we need to be concerned about.

VentureBeat: Is that something for Facebook or Twitter to work on, or is there a reason for McAfee to pay attention to that kind of fraud?

Grobman: There are a few reasons McAfee is looking at it. Number one, we’re trying to understand the state of the art in detection technology, so that if a video does emerge, we have the ability to provide the best assessment for whether we believe it’s been tampered with, generated through a deepfake process, or has other issues. There’s potential for other types of organizations, beyond social media, to have forensic capability. For example, the news media. If someone gives you a video, you would want to be able to understand the likelihood of whether it’s authentic or manipulated or fake.

We see this all the time with fake accounts. Someone will create an account called “AP Newsx,” or they slightly modify a Twitter handle and steal images from the correct account. Most people, at a glance, think that’s the AP posting a video. The credibility of the organization is one thing that can lend credibility to a piece of content, and that’s why reputable organizations need tools and technology to help determine what they should believe as the ground truth, versus what they should be more suspicious of.

VentureBeat: It’s almost like you’re getting ready for a day when deepfakes are used in some kind of breach because we’re getting used to the idea of virtual people. I went to a Virtual Beings Summit earlier this year, and it was all about creating artificial people that seem like they’re real. That includes things like virtual influencers that put on concerts in Japan. But using these for deception purposes is where it comes back to you …

Grobman: That’s the interesting point. The same technology can be used for good and for evil objectives. If you can make a person look and sound authentic, you can think about good uses for that. Someone in late stages of Parkinson’s disease or another disorder that challenges their ability to speak — if you can provide them with technology that allows them to communicate with their loved ones, even in the late stages of a debilitating disease, that’s clearly a positive use of this technology.

The flip side is having a CEO [appear to] make statements that their product is being recalled, or that earnings are at one level when they’re actually at a very different level, and making stock prices move on that information. That opens the avenue for all kinds of financial crimes, where instead of having to steal data, criminals can manipulate markets through misinformation.

Above: The Virtual Beings Summit drew hundreds to Fort Mason in San Francisco.

VentureBeat: You’ve identified something called “model hacking,” attacks on machine learning systems themselves?

Grobman: We’re doing a lot of work on adversarial AI techniques and defenses. We’re getting ready for criminals to be using techniques that make AI models less effective. Some of the research we’re doing is to best understand how those adversarial techniques work, but then we’re also working on mitigations to make our models more robust and less susceptible to some of those capabilities. That’s a very active area of focus.

VentureBeat: What are you finding there as far as how much activity is going on?

Grobman: There’s a lot of activity, especially in academia and the research community. Similar to some of the other points, it’s difficult to know how much in the wild is currently focused on using some of these adversarial techniques. But we always see things start in the research domain and then graduate to active exploitation, which is why it’s important for McAfee and other responsible companies in the industry to get in front of this and make sure that when attacks become more prevalent, our technology is ready to defend against those.

VentureBeat: Can you figure out what some of the danger is there, if those attacks succeed? What happens to the data they’re attacking?

Grobman: It’s mostly about circumventing what AI is intended to do. If you think about a typical cybersecurity use case, a file that’s never been seen before is analyzed by an AI model in order to classify it as malicious or benign. Using an adversarial AI technique would be putting specific characteristics into the file such that it confuses the model into falsely classifying it as benign, even if it is malicious.

Above: A few avatars generated by Neon.

VentureBeat: I followed the creation of some of the startups in this space, like Deep Instinct in 2017. They got some awards from Nvidia, and investment as well. Some things they said made sense. I wondered how broad their approach has become across the industry. I know there were a lot of companies that were raising money on the promise that if you put AI into cybersecurity, you’d get something much better than traditional approaches. Traditional security was more reactive — you find out something bad is out there and you put it in your database and protect against it. Whereas the AI part was meant to be used to get ahead of that and predict things that you’ve never seen before.

Grobman: What we’ve found is that the best effectiveness is combining AI with some traditional threat research, threat intelligence capabilities. If you combine threat intelligence and AI, you can do much better than either on their own.

They each have fundamental problems. If you just do threat intelligence, meaning that you’re going to look for threats based on your understanding of the threats that exist in the world, the problem with that is new threats pop up all the time. McAfee sees 500 new pieces of malware every minute. Trying to stay ahead of all the new threats is inherently difficult. At best, you’ll be able to detect about 90% of the threats if you only do your detection based on threat intelligence.

On the flip side, if you only use AI, you’re basically making the AI model decide something on those 90% of the scenarios where you know the answer. If you think about it, the good side of threat intelligence is, for the things you know, you have an almost perfect detection rate. If you know something is bad, you really know it’s bad. It’s a challenge about missing things. With AI, why would you take something you know is bad and make your model do an assessment on it?

The good news with AI is that it can detect things you’ve never seen before. But in order to get high detection rates, you generally have a high false-positive rate. You get a lot of false detections in return for that higher detection rate. The magic that McAfee has found is if you put both threat intelligence and AI together, you can get a much better detection rate and a lower false-positive rate than either technology working on its own.

Here’s why: If, for anything that you have in your threat intelligence perspective, you classify it using threat intelligence and then only things that are unknown do you pass to your AI classifiers, that puts you in a mode where you’re getting the two techniques to work together to provide an outcome that’s better than either one on its own.

VentureBeat: As far as how many people have the capability to do that combination — the traditional folks had the traditional technology. The question is, how many are able to pick up the AI part and do it well? I guess you’d argue that you have.

Grobman: We’ve done it well. What I’ll say is it’s not just about having great AI technology. It’s about having good data and good visibility as to what’s happening in the world. One thing that’s unique to McAfee is we have a billion threat sensors looking at threat telemetry from a wide variety of vantage points. We look at threat telemetry from devices, from networks, from organizational perimeters, and even the cloud, with our CASB [cloud access security broker] solution. Using that threat-sensing, threat telemetry information to help build our models is one of the key things you need beyond just understanding how to use AI effectively.

Above: IoTex’s Ucam is an encrypted, blockchain-based security camera.

VentureBeat: As far as where you picked this up, when deep learning started becoming a real thing, how quickly were you jumping on that?

Grobman: We use a wide range of AI techniques. Some of it is engineered learning. Some of it is deep learning. But we’ve been focused on this for almost five years now. It’s not that there was a big bang moment. The technology has evolved over a number of years. We’re looking at it as a tool in order to meet an objective, as opposed to AI in and of itself being the objective.

It’s a bit like — if you buy a car, what you really care about is that it goes zero to 60 in four seconds and doesn’t pollute the environment. The fact that it’s based on a lithium-ion battery that has microcells to mitigate the risk of fire — unless you’re a real nerd, you don’t really care about the implementation. You care about what it does for you. We’re in a similar situation. Our customers care about our ability to defend them from threats. Behind the scenes, we’ve found that AI is a powerful technology that helps us do that more effectively. But in general, customers don’t really care about how we’re doing it, as long as they get the outcome they desire.

VentureBeat: Did you find that you had to do a lot of hiring around AI to make this happen, rather than the traditional engineers you already had?

Grobman: Yeah, it’s a combination of both. We’ve hired a good number of data scientists. We have dedicated data science teams. At the same time, we’ve done a lot of cross-training throughout the company so that engineers that are not data scientists have a comfort level and are able to use the technology effectively.

VentureBeat: How done is this part? How far do you feel you have to go until you reach a full realization of AI cybersecurity integration?

Grobman: It’s already fairly ubiquitous in the industry. What’s going to happen, though, is the threat landscape will continue to evolve and become more sophisticated, which will continue to put pressure on the need to have more sophistication in the way we detect threats. I don’t necessarily see an endpoint, as much as a natural evolution of finding and evolving the best technology as the threat landscape continues to change and evolve as well.

VentureBeat: As far as the boom and bust in AI cybersecurity venture investment, did we see that? Has that played out?

Grobman: It’s a good question. I don’t know that I have a quantitative answer. Anecdotally, it seems like there was a lot of hype a couple of years ago. I don’t know that I see the same level of intensity because AI has become so commonly used across the tech industry. But I don’t know that that’s more than an anecdotal observation.

VentureBeat: In the future of cybersecurity, how big a part do you think this is versus other developments on your radar, other things you have to get to?

Grobman: The technology will be used in new ways. Think about it this way: Part of what we’re focused on is how we solve the existing problems we have better, and then the second part is how we take new approaches to defend against threats using entirely new techniques. AI can help us in both of those areas.

For example, in the first one, we can use improvements in AI to better detect malicious behavior and malicious content. At the same time, we can use AI to answer questions that we’ve never been able to answer before, such as: Is an attack something that’s crafted to focus on a specific organization? Is it a targeted attack? We’re able to look at using AI to detect the formation of a new campaign before someone realizes it’s a campaign. There are these questions we can now answer by using AI, and that’s a lot of what the future will be about.

Above: Cybersecurity

VentureBeat: I also wonder if blockchain has come to have a lot of importance. People pointed to it as a technology that could lead to assurances about identity. If you brought that in, then you could be more certain that something someone was giving you was verifiable. But the other problem I saw related to it was related to ransomware, where ransom payments were becoming untraceable. You had both the good actors and the bad actors using blockchain in some way.

Grobman: That’s exactly right. Blockchain is very well-suited for cryptocurrencies. Then you look at whether cryptocurrencies are good [or] are bad, and it’s really both. They make it so that individuals that don’t have the means to work with traditional financial institutions and instruments, such as in third-world countries, can work with a currency. The flip side is it’s a catalyst to make ransomware much more effective to operate. It provides criminals with a means for their victims to pay them directly with a currency that’s difficult to trace.

The broader comment on the blockchain is that it’s probably a little over-positioned. Blockchain, at the end of the day, uses well-understood cryptography principles and primitives that we’ve used for years. Those principles are very good at being able to digitally sign or encrypt information. I don’t think that blockchain per se is the holy grail.

VentureBeat: Is there anything else you think of as that holy grail?

Grobman: In cybersecurity defense, I think the only holy grail is that there is no holy grail. Whenever the industry has thought that some technology was going to be the game changer, shortly thereafter adversaries have figured out ways to work around it. I’m very cautious about thinking of anything as a holy grail, at least in cybersecurity.

Above: Finding passwords

VentureBeat: As far as what’s going on right now, Zynga had their Words With Friends database hacked, with almost 200 million personal records obtained there. That was just in the last quarter.

Grobman: Some prescriptive guidance for consumers — if you’re signing up for anything, the most important thing is to have a unique password. Zynga is a good example of that. Even though there’s nothing of tremendous value to steal from Zynga, if somebody used the same login and password for Zynga as they did for their bank, the adversary can take that information and get access to things that are valuable. If users are accessing recreational types of websites or other internet capabilities, either use a password manager or use unique passwords to make sure that if there is a breach, the stolen information can’t be used for more than the site that was impacted.

VentureBeat: Is there anything else in recent news in the space that’s caught your attention?

Grobman: A couple of things. 2019 was a year that ransomware continued to be major problem. We saw a campaign called Sodinokibi, which was using an affiliate business model. You think about — in the business world there are all sorts of franchise business models where one company has partners or affiliates that sell or execute their services, and there’s profit-sharing. We now see that approach in cyberattacks as well. With Sodinokibi, one group created the underlying ransomware technology, and then they made it available to different groups to affect individuals and organizations. When the ransom was paid, there was profit-sharing. We’ve also seen, in that particular case, interesting behaviors like — it was engineered to not impact certain parts of the world. It looked at the installed language pack on a machine. If it was a certain set of languages, it would not execute.

We saw a lot of focus on state and local governments being targeted by ransomware. We also saw 2019 as the year where we started to see attacks on financial institutions occur through the cloud. There was a major breach back in the summer, where it was a cloud infiltration leading to that. 2019 was a pivotal year in where these trends have gone.