Watch all the Transform 2020 sessions on-demand here.

State-of-the-art AI and machine learning algorithms can generate lifelike images of places and objects, but they’re also adept at swapping faces from one person to another — and of spotting sophisticated deepfakes. In a pair of academic papers published by teams at Microsoft Research and Peking University, researchers propose FaceShifter and Face X-Ray, a framework for high-fidelity and occlusion-aware face swapping and a representation for detecting forged face images, respectively. They say that both achieve industry-leading results compared with several baselines without sacrificing performance, and that they require substantially less data than previous approaches.

FaceShifter tackles the problem of replacing a person in a target image with that of another person in a source image, while at the same time preserving head pose, facial expression, lighting, color, intensity, background, and other attributes. Apps like Reflect and FaceSwap purport to do this fairly accurately, but the coauthors of the Microsoft paper assert that they’re sensitive to posture and perspective variations.

FaceShifter boosts face swap fidelity with a generative adversarial network (GAN) — an AI model consisting of a generator that attempts to fool a discriminator into classifying synthetic samples as real-world samples — called Adaptive Embedding Integration Network (AEI-Net) that extracts attributes in various spatial resolutions. Novelly, the generator incorporates what the researchers call Attentional Denormalization (AAD) layers, which adaptively learn where to integrate facial attributes, while a separate model — Heuristic Error Acknowledging Refinement Network (HEAR-Net) — leverages discrepancies between reconstructed images and their inputs to spot occlusions.

Above: More samples from FaceShift.

The team says that in a qualitative test, FaceShifter preserved face shapes and faithfully respected the lighting and image resolution of targets. Moreover, even on “wild faces” scraped from the internet, the framework learned to recover anomaly regions — including glasses, shadow and reflection effects, and other uncommon occlusions — without relying on human-annotated data.

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

“The proposed framework shows superior performance in generating realistic face images given any face pairs without subject specific training. Extensive experiments demonstrate that the proposed framework significantly outperforms previous face swapping methods,” wrote the team.

In contrast to FaceShifter, Face X-Ray attempts to detect when a headshot might be a forgery. As the researchers in the corresponding paper note, there’s a real need for tools like it — forged images might be abused for malicious purposes. In June 2019, a report revealed that a spy used an AI-generated profile picture to fool contacts in LinkedIn. And just last December, Facebook discovered hundreds of accounts with profile photos of fake faces synthesized using AI.

Unlike existing work, FaceShifter doesn’t require foreknowledge of manipulation methods or human supervision. Instead, it produces grayscale images revealing whether a given input image can be decomposed into the blending of two images from different sources. The team claims that this works because most face manipulation methods share a common step in blending altered faces into existing background images. Each image has its own distinctive marks introduced either from hardware (like sensors and lenses) or software components (like compression and synthesis algorithm), and those marks tend to present similarly throughout the image.

Face X-Ray, then, doesn’t need to rely on knowledge of the artifacts associated with a specific face manipulation technique, and the algorithm underpinning it can be trained without fake images generated by any methods.

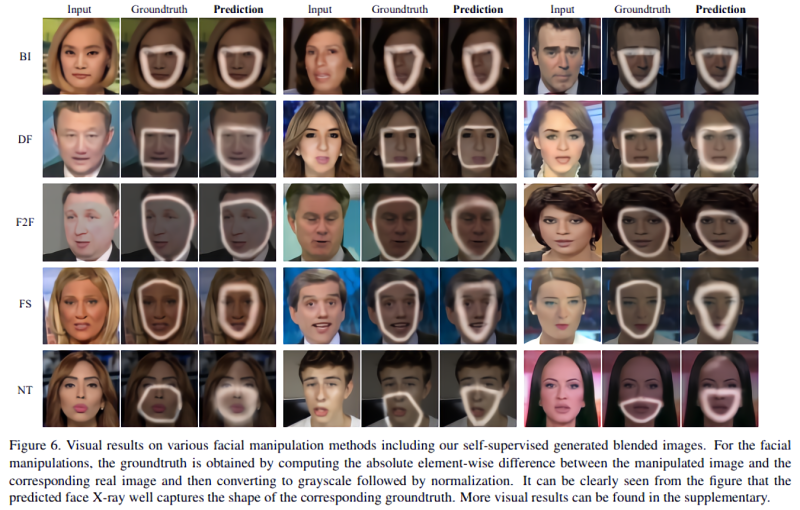

In a series of experiments, the researchers trained Face X-Ray on FaceForensics++, a large-scale video corpus containing over 1,000 original clips manipulated with four state-of-the-art face manipulation methods, as well as on another training data set comprising blended images constructed from real images. They evaluated Face X-Ray’s ability to generalize with four data sets, including a subset of the aforementioned FaceForensics++ corpus; a collection of thousands of visual deepfake videos released by Google to foster the development of deepfake detection methods; images from the Deepfake Detection Challenge; and Celeb-DF, a corpus including 408 real videos and 795 synthesized videos with reduced visual artifacts.

The results show that Face X-Ray managed to distinguish previously unseen forged images and reliably predict blending regions. The team notes that their method relies on the existence of a blending step, so that it might not be not applicable to wholly synthetic images, and that it could be defeated by adversarial samples. This aside, they say that it’s a promising step toward a general face forgery detector.