Watch all the Transform 2020 sessions on-demand here.

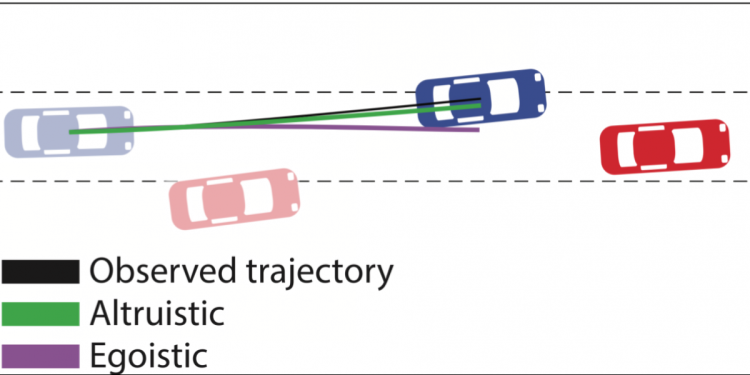

Can social awareness improve autonomous cars’ road robustness? That’s what a team of researchers at MIT Computer Science and Artificial Intelligence Laboratory set out to discover in a recent study. They built a system that classifies the behavior of other drivers with respect to their selfishness — in other words, whether drivers are less likely to act altruistically toward other cars. In tests, they say their algorithm better predicted how drivers would behave by a factor of 25%.

“Creating more human-like behavior in autonomous vehicles (AVs) is fundamental for the safety of passengers and surrounding vehicles, since behaving in a predictable manner enables humans to understand and appropriately respond to the AV’s actions,” said graduate student and lead research author Wilko Schwarting in a statement.

The team’s model draws both on game theory and the psychological concept of Social Value Orientation (SVO), which indicates the degree to which someone is selfish (“egoistic”) versus cooperative (“prosocial”). To architect it, they modeled scenarios where drivers tried to maximize their utility, in which the model learned to predict from snippets of motion whether the drivers were cooperative, altruistic, or egoistic. Over time and in this way, the AI came to understand when it’s appropriate to exhibit different driving behaviors.

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

In scenarios involving merging and left turns, for example, drivers either let other cars merge into a lane or opt not to. (Merging cars are deemed more competitive than non-merging cars.) The researchers’ model, then, might choose to be more assertive to ensure it’s able to make a lane change in heavy traffic. In other instances, like when faced with completing an unprotected left turn, the model might wait for an approaching car with a more prosocial driver before taking action.

The researchers say their system isn’t yet robust enough to be implemented on public roads, but they’re planning to apply its model to pedestrians, bicycles, and other agents in driving environments. In addition, they intend to investigate other robotic systems acting among humans, such as household robots, and to integrate SVO into general prediction and decision-making algorithms.

“Working with and around humans means figuring out their intentions to better understand their behavior,” says Schwarting, who was lead author on the new paper that will be published this week in the latest issue of the Proceedings of the National Academy of Sciences (PNAS). “People’s tendencies to be collaborative or competitive often spills over into how they behave as drivers. In this paper we sought to understand if this was something we could actually quantify.”