testsetset

A major consortium of AI community stakeholders today introduced MLPerf Inference v0.5, the group’s first suite for measurement of AI system power efficiency and performance. Inference benchmarks are essential to understanding just how much time and power is required to deploy a neural network for common tasks like computer vision that predicts the contents of an image.

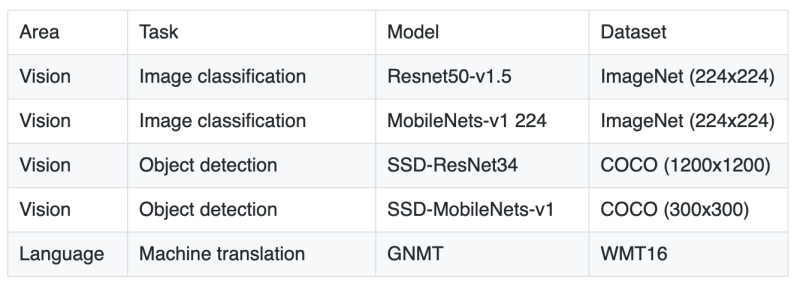

The suite consists of 5 benchmarks that include English-German machine translations with the WMT English-German data set, 2 object detection benchmarks with the COCO data set, and 2 image classification benchmarks with the ImageNet data set.

Submissions will be reviewed in September and MLPerf will share performance results in October, an organization spokesperson told VentureBeat in an email.

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

The inference standards were decided upon over the course of the past 11 months by partner organizations such as Arm, Facebook, Google, General Motors, Nvidia, and Toronto University, MLPerf said in a statement shared with VentureBeat.

MLPerf Inference Working Group cochair David Kanter told VentureBeat in a phone interview that benchmarks are important to using inference systems to definitively decide which solutions are worth the investment. They’re also important for engineers to understand what to optimize in the systems they’re making.

“If you’re a researcher or you’re an engineer designing these next generation systems, it’s important to know what are the workloads and metrics that matter, because ultimately at the end of the day, all the engineers working on this stuff are very smart and talented folks, but we’ve got to point them in the right direction and make sure they’re optimizing for the right things so that the solutions that come out — whether it’s 2 or 5 years from now — are designed for the workloads of today and tomorrow,” Kanter said.

Inference benchmarks introduced today follow the alpha release of the MLPerf Training benchmark in December 2018. Results found Google tensor processing units and Nvidia graphic processing units among the top hardware for accelerating machine learning.

MLPerf is a group of 40 organizations like Alibaba, Baidu, Facebook, and Google that are working together to create a set of benchmarks — agreed-upon common sets of models and data sets — used to determine how quickly hardware can train an AI model or deploy from environments like cloud computing platforms, on devices like PCs and smartphones, and on multi-stream systems for autonomous vehicles.

The MLPerf reference code implementations will be incorporated into popular tools like Facebook”s PyTorch, Google’s TensorFlow, and the ONNX consortium for interoperability between the hardware and frameworks used for machine learning.

Plans for MLPerf to measure AI energy efficiency rates come shortly after researchers found that training deep learning algorithms like OpenAI’s GPT-2 and Google’s BERT and Transformer can have a carbon footprint 5 times that of a car.

Last week, supercomputer analysis group TOP500 released its Green500 ranking of energy-efficient hardware, which found that Shoubu system B was the most efficient AI accelerator with 17.6 gigaflops/watt. Shoubu is followed by Nvidia’s DGX SaturnV Volta and Summit’s AI Bridging Cloud Infrastructure being built at the University of Tokyo.