Watch all the Transform 2020 sessions on-demand here.

While the most sophisticated driverless cars on public roads can handle haboobs and rainstorms like champs, certain types of precipitation remain a challenge for them — like snow. That’s because snow covers cameras critical to those cars’ self-awareness and tricks sensors into perceiving obstacles that aren’t there, and because snow obscures road signs and other structures that normally serve as navigational landmarks.

In an effort to spur on the development of cars capable of driving in wintry weather, startup Scale AI this week open-sourced Canadian Adverse Driving Conditions (CADC), a data set containing over 56,000 images in conditions including snow created with the University of Waterloo and the University of Toronto. While several corpora with snowy sensor samples have been released to date, including Linköping University’s Automotive Multi-Sensor Dataset (AMUSE) and the Mapillary Vistas data set, Scale AI claims that CADC is the first to focus specifically on “real-world” driving in snowy weather.

“Snow is hard to drive in — as many drivers are well aware. But wintry conditions are especially hard for self-driving cars because of the way snow affects the critical hardware and AI algorithms that power them,” wrote Scale AI CEO Alexandr Wang in a blog post. “A skilled human driver can handle the same road in all weathers — but today’s AV models can’t generalize their experience in the same way. To do so, they need much more data.”

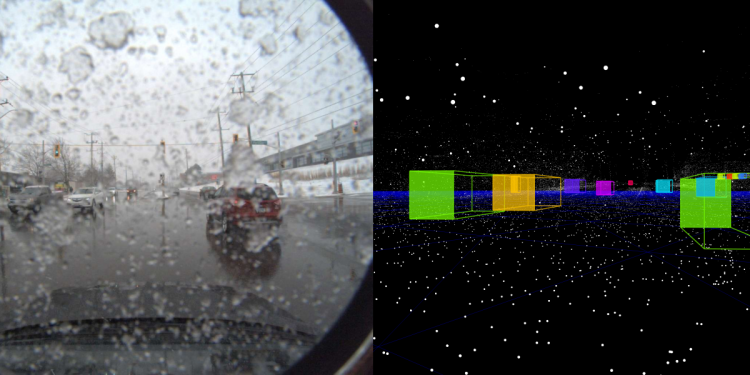

Above: A camera image from the data set.

Scale AI says that the routes captured in CADC were chosen depending on levels of traffic and the variety of obstacles (e.g., cars, pedestrians, trucks, buses, garbage containers on wheels, traffic guidance objects, bicycles, horses and buggies, and animals), and most importantly snowfall. Using Autonomoose, an autonomous vehicle platform created as a joint effort between the Toronto Robotics and AI Laboratory (TRAIL) and Waterloo Intelligent Systems Engineering Lab (WISE Lab) at the University of Waterloo, teams of engineers drove a Lincoln MKZ Hybrid mounted with a suite of lidar, inertial sensors, GPS, and vision sensors (including eight wide-angle cameras) along 20 kilometers (12.4 miles) of Waterloo roads.

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

Scale AI tapped its data annotation platform — which combines human work and review with smart tools, statistical confidence checks, and machine learning checks — to label each of the resulting camera images, 7,000 lidar sweeps, and 75 scenes of 50-100 frames. It claims that the accuracy is “consistently higher” than what a human or synthetic labeling technique could achieve independently, as measured against seven different annotation quality areas.

University of Waterloo professor Krzysztof Czarnecki hopes the data set will put the wider research community on equal footing with companies testing self-driving cars in winter conditions, including Alphabet’s Waymo, Argo, and Yandex. While both Argo and Waymo have released open source driving data sets, neither contain the volume of snow-covered sensor readings present in CADC.

“We want to engage the research community to generate new ideas and enable innovation,” Czarnecki said. “This is how you can solve really hard problems, the problems that are just too big for anyone to solve on their own.”