Watch all the Transform 2020 sessions on-demand here.

In many ways, Nvidia is the beneficiary of having been in the right place at the right time with regard to AI. A confluence of advances in compute, data, and algorithms led to explosive interest in deep neural networks, and our current approach to training these depends pretty heavily on mathematical operations that Nvidia’s graphic cards happened to be really efficient at.

That’s not to say that Nvidia hasn’t executed extremely well once the opportunity presented itself. To its credit, the company recognized the trend early and invested heavily before it really made sense to do so, beating the “innovator’s dilemma” that’s caused many a great (or formerly great) company to miss out.

A couple of weeks ago, I attended Nvidia’s GPU Technology Conference (GTC) 2018 and came away with a few thoughts. Two areas where Nvidia has really excelled have been in developing software and ecosystems that take advantage of its hardware and tailoring it deeply to the different domains in which users apply it. This was evidenced in full at GTC 2018, with the company rolling out a number of interesting new hardware, software, application, and ecosystem announcements for its deep learning customers.

Above: Nvidia CEO Jensen Huang presents on AI technology at GTC 2018.

As a machine learning and AI enthusiast, here are some of the announcements I found most interesting.

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

New DGX-2 deep learning supercomputer

After announcing the doubling of V100 GPU memory to 32GB, Nvidia unveiled the DGX-2, a deep learning-optimized server containing 16 V100s and a new high-performance interconnect called NVSwitch. The DGX-2 delivers 2 petaflops of compute power and offers significant cost and energy savings relative to traditional server architectures. In a challenging representative task, the DGX-2 trained a FairSeq neural machine translation (NMT) model in a day and a half, versus the previous generation DGX-1’s 15 days.

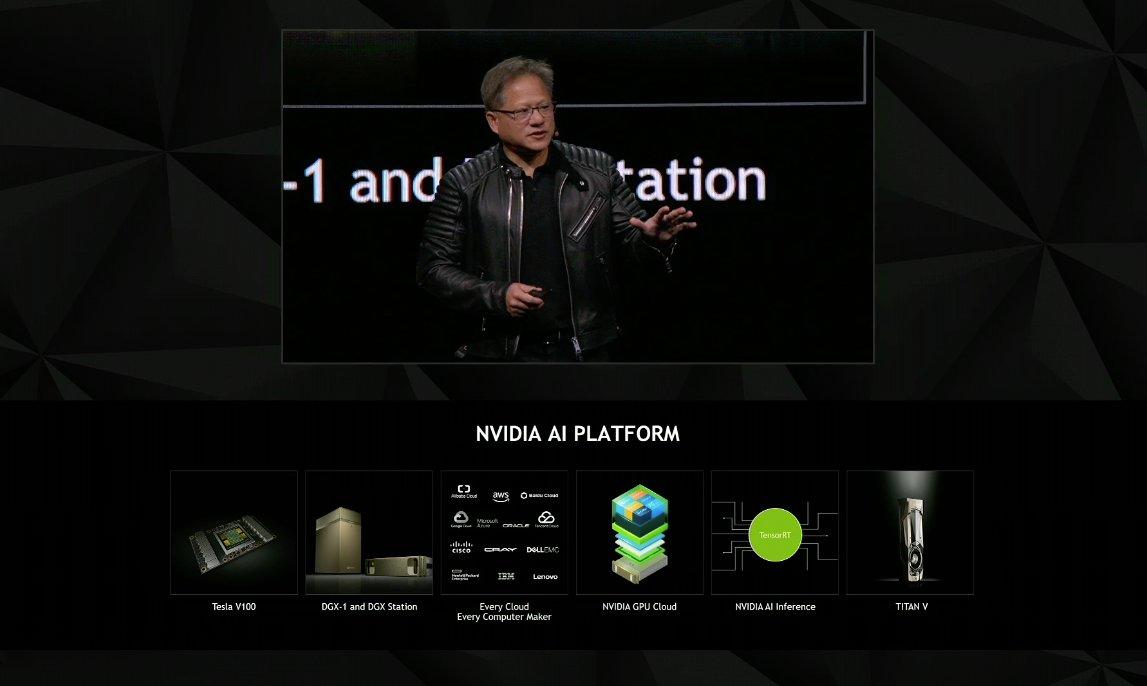

Deep learning inference and TensorRT 4

Inference (using DL models versus training them) was a big focus area for Nvidia CEO Jensen Huang. During his keynote, Huang spoke to the rapid increase in complexity of AI models and offered a mnemonic for thinking about the needs of inference systems both in the datacenter and at the edge: PLASTER, for programmability, latency, accuracy, size, throughput, energy/efficiency, and rate of learning. To meet these needs, he announced the release of TensorRT 4, the latest version of Nvidia’s software for optimizing inference performance on GPU cores.

The new version of TensorRT has been integrated with TensorFlow and also includes support for the ONNX interoperability framework, allowing for use with models developed with the PyTorch, Caffe2, MxNet, CNTK, and Chainer frameworks. Huang also highlighted performance increases for the new version, including an 8x performance increase under TensorFlow-TensorRT versus TensorFlow alone and 45x higher throughput versus CPU for certain network architectures.

New Kubernetes support

Kubernetes (K8s) is an open source platform for orchestrating workloads on public and private clouds. It came out of Google and is growing very rapidly. While the majority of Kubernetes deployments are focused on web application workloads, the software has been gaining popularity among deep learning users. (Check out my interviews with Matroid’s Reza Zadeh and OpenAI’s Jonas Schneider for more.)

To date, working with GPUs has been pretty frustrating. According to the official K8s docs, “support for NVIDIA GPUs was added in v1.6 and has gone through multiple backwards incompatible iterations.” Yikes! Nvidia hopes its new GPU Device Plugin (confusingly referred to as “Kubernetes on GPUs” in Huang’s keynote) will allow workloads to more easily target GPUs in a Kubernetes cluster.

New applications: Project Clara and Drive Sim

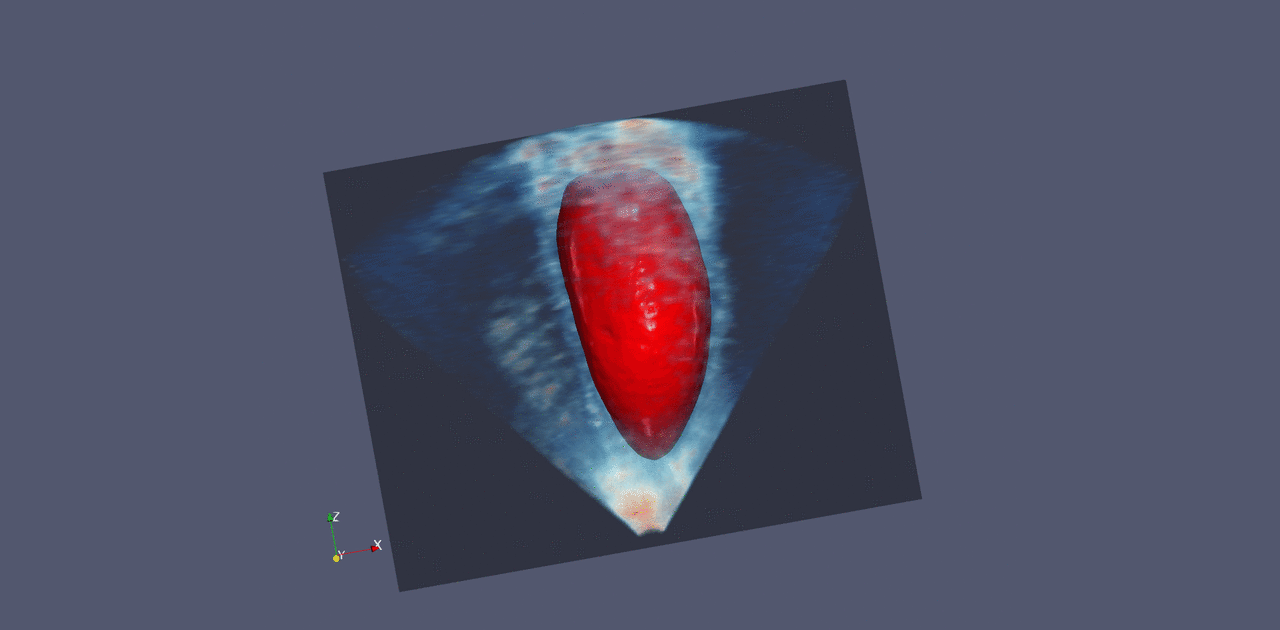

Combining its strengths in both graphics and deep learning, Nvidia shared a couple of interesting new applications it has developed. Project Clara is able to create rich cinematic rendering of medical imagery, allowing doctors to more easily diagnose medical conditions. Amazingly, it does this in the cloud using deep neural networks to enhance traditional images, without requiring updates to the three million imaging instruments currently installed at medical facilities.

Above: Project Clara visualization of a heart, from GTC 2018.

Drive Sim is a simulation platform for self-driving cars. There have been many efforts to train deep learning models for self-driving cars using simulation, including using commercial games like Grand Theft Auto. (In fact, the GTA publisher has shut down several of these efforts for copyright reasons). Training a learning algorithm on synthetic roads and cityscapes hasn’t been the big problem, though. Rather, the challenge has been that models trained on synthetic roads haven’t generalized well to the real world.

I spoke to Nvidia chief scientist Bill Dally about this, and he says the company has seen good generalization by incorporating a couple of research-supported techniques: namely, combining real and simulated data in the training set and using domain adaptation techniques, including this one from NIPS 2017 based on coupled GANS. (See also the discussion around a related Apple paper presented at the very first TWiML Online meetup.)

Impressively, for as much as Nvidia announced for the deep learning user, the conference and keynote also had a ton to offer graphics, robotics, and self-driving car users, as well as users from industries like health care, financial services, and oil and gas.

Nvidia is not without challenges in the deep learning hardware space, as I’ve previously written, but the company seems to be doing all the right things. I’m looking forward to seeing what the company is able to pull off in the next 12 months.

This story originally appeared in the This Week in Machine Learning & AI newsletter. Copyright 2018.

Sam Charrington is host of the podcast This Week in Machine Learning & AI (TWiML & AI) and founder of CloudPulse Strategies.