Watch all the Transform 2020 sessions on-demand here.

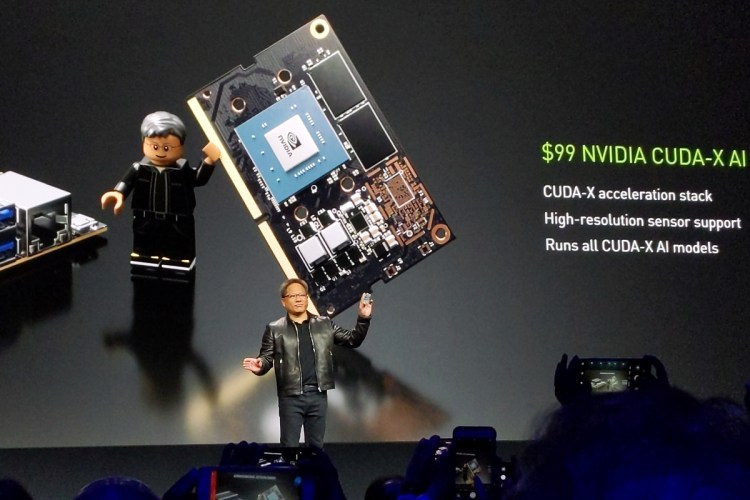

Nvidia is bringing a new embedded computer to its Jetson line for developers deploying AI on the edge — its smallest computer ever, according to CEO Jensen Huang. Named the Jetson Nano, the CUDA-X computer delivers 472 Gflop of compute power and 4GB of memory and can operate on 5 watts of power.

Jetson Nano and the Jetson Nano developer kit will make their debut today at the Nvidia GPU Tech Conference (GTC) in San Jose, California.

The Jetson Nano for deploying AI on the edge without an internet connection follows the release last year of the Jetson AGX Xavier chip and the Jetson TX2, which was released in 2017.

Above: Nvidia Jetson Nano

The Jetson Nano developer kit is available today for $100, while the $129 Jetson Mini computer for embedded devices will be available in June.

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

For comparison, the Xavier retails for $1,299 and TX2 for about $600.

“We’re bringing Jetson into the mainstream market,” Nvidia VP of autonomous machines Deepu Talla said today.

The Jetson TX2 runs on 7.5 watts of power and 8GB of memory, while the AGX Xavier can run on as little as 10 watts of power and comes with 32GB of memory.

Like its predecessors, the Jetson Nano will be able to work with Nvidia’s more than 40 CUDA-X AI deep learning libraries.

Above: Jetson Nano developer kit for $99

The Jetson system for edge computing on mobile or embedded devices is currently used by 200,000 developers, Talla said.

Edge computing helps power inference for robots, drones, security cameras, and many other devices that don’t want to rely on an internet connection.

Nano, TX2, and AGX Xavier can be used to create robots from Nvidia — like the Kaya, Carter, and Link robots — with the Isaac robot engine and Isaac Gym robot simulator. Nvidia opened its robotics research lab in Seattle in January.

Also announced today: Amazon’s AWS introduced instances powered by Tesla T4 GPUs, general availability of the Constellation platform for autonomous driving, the Safety Force Field for autonomous vehicles, and the reorganization of more than 40 Nvidia deep learning acceleration libraries under the new umbrella name CUDA-X AI.

In other recent edge machine learning news, Google released TensorFlow Lite 1.0 last month at the TensorFlow Dev Summit.