Above: Nvidia CEO Jensen Huang at CES 2018.

We also talked about a method for achieving SotIF, safety of the intended functionality. The way to do that is simulation. The best way to know whether something works is to simulate it. We can drive thousands of miles per car a week. A fleet of cars would have hundreds of thousands of miles per week. But society drives trillions of miles per year. 15 trillion miles per year is how far we all drive around the world. We’d like to have some percentage representation of that, and the only way to do that is through simulation.

We created a parallel universe, an alternative universe world, a virtual reality where we would have virtual reality cars driving in virtual reality cities. Each one of the cars would have an artificial intelligence, the entire stack of Drive inside every single car. Our AI stack, Drive, will be in all these VR cars, driving in VR cities, running on a huge supercomputer with many versions of those VR universes. We’re going to have thousands of parallel universes where all these VR cars are driving around, and as a result, we can test a lot more miles in VR than we could physically. Of course, in addition, we’ll also drive the cars. We have a large fleet of cars. We’ll be doing our software that way as well.

It’s called Autosim. It’s a bit like the movie Inception. We have VR. We have AR. We have AI. They’re all embedded inside. It’s a bit of a brain-teaser, but once you put on the HMD, you’ll feel exactly what it is. All of a sudden, using VR, we’ve fused our world with that virtual world. We’ve wormholed into that virtual world. Within that virtual world, you can see everything, and it works just fine.

We also announced several partnerships. We’ll be working with Uber to create their fleet of self-driving taxis. We announced that the hottest startup in self-driving cars today, Aurora, Chris Urmson’s company–they partnered with Volkswagen and Hyundai to create self-driving cars, and we’re partnering with Aurora to make that possible. We’re partnering with VW to infuse AI into their next-generation fleet of cars. It’s AI for self-driving and it’s AI for the cockpit. Your future car will basically become an AI agent. We announced Mercedes yesterday. Mercedes’ next-generation fleet of cars will have Nvidia AI in their digital cockpits.

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

Above: Jensen Huang, CEO of Nvidia, talks about gaming at CES 2018.

VB: Early on, you said that CPUs are really not good for AI, and that the GPU was much better, more naturally good at it. But it seems like with the latest design–it’s a 9 billion transistor chip. Last year’s was 15 billion. You’re almost getting away from the GPU to get ground-up AI chips. Is that a turning point? First CPUs weren’t as good at this, but now you’re going into AI chips that are fundamentally good at AI.

Huang: The initial proposition is that CPUs aren’t as good at AI, and I’m not going to disagree with that. I would agree that CPUs aren’t good for very high throughput parallel computing of almost anything. We did a lot of AI work on our GPUs. And then, now, in this new SOC, we actually have a specific accelerator dedicated to deep learning as well. Out of our Xavier, there are 30 trillion operations per second, which is basically four Pascal chips. It’s a combination of 16-bit floating point and 8-bit integers. Of the 30 trillion, 20 of it is in the GPU and 10 of it is in the specialized accelerator, a special processor like a TPU.

Does that mean there are new ways of designing deep learning and AI? You can see that we chose 20/10 for a specific reason. There are some networks that we know are going to be relatively well-known. Therefore, we can simplify its design even further. DLA is about, at the core level, 33 percent more energy-efficient than Cuda. If I design something that’s very limiting, very specialized, and I can get another third of energy efficiency, at the core level—at the chip level, when you add the overhead to it of the I/Os and all the stuff that’s needed to keep the chip going, you reduce that 33 percent by some. Call it maybe another third. You don’t get the full third. But the benefit is that if you know what you want to run, like CNN for some types of networks, where you know you don’t need the precision, we can put that into our DLA.

Unfortunately, there are so many different types of networks. There are CNNs, RNNs, networks where I don’t want to reduce the precision from 32-bit floating point. I want the flexibility that goes along with all these different networks. I want to run that on Cuda. I think the answer is, we believe deep learning can have the benefit of both. The incremental savings is likely to be less and less over time, because tensor core is becoming so efficient. But nonetheless, there’s some benefit to be had there. Both of them are much better than CPUs.

Question: Related to security concerns, I want our readers to be clear about what you’re saying on Spectre issues with GPUs. Are there concerns with your product, GPUs, around the Spectre and Meltdown array of issues? Is that what you’re fixing?

Huang: This morning there was some news that incorrectly reported—I forget exactly what the headline was, but it’s incorrect. Our GPUs are immune, not affected by these security issues. What we did was we released driver updates to patch the CPU security vulnerability. We’re patching the CPU vulnerability same way Amazon is going to, the same way SAP is going to, because we have software as well. It has nothing to do with our GPU at all. But our driver is just software. Anybody who has software needs to do patching.

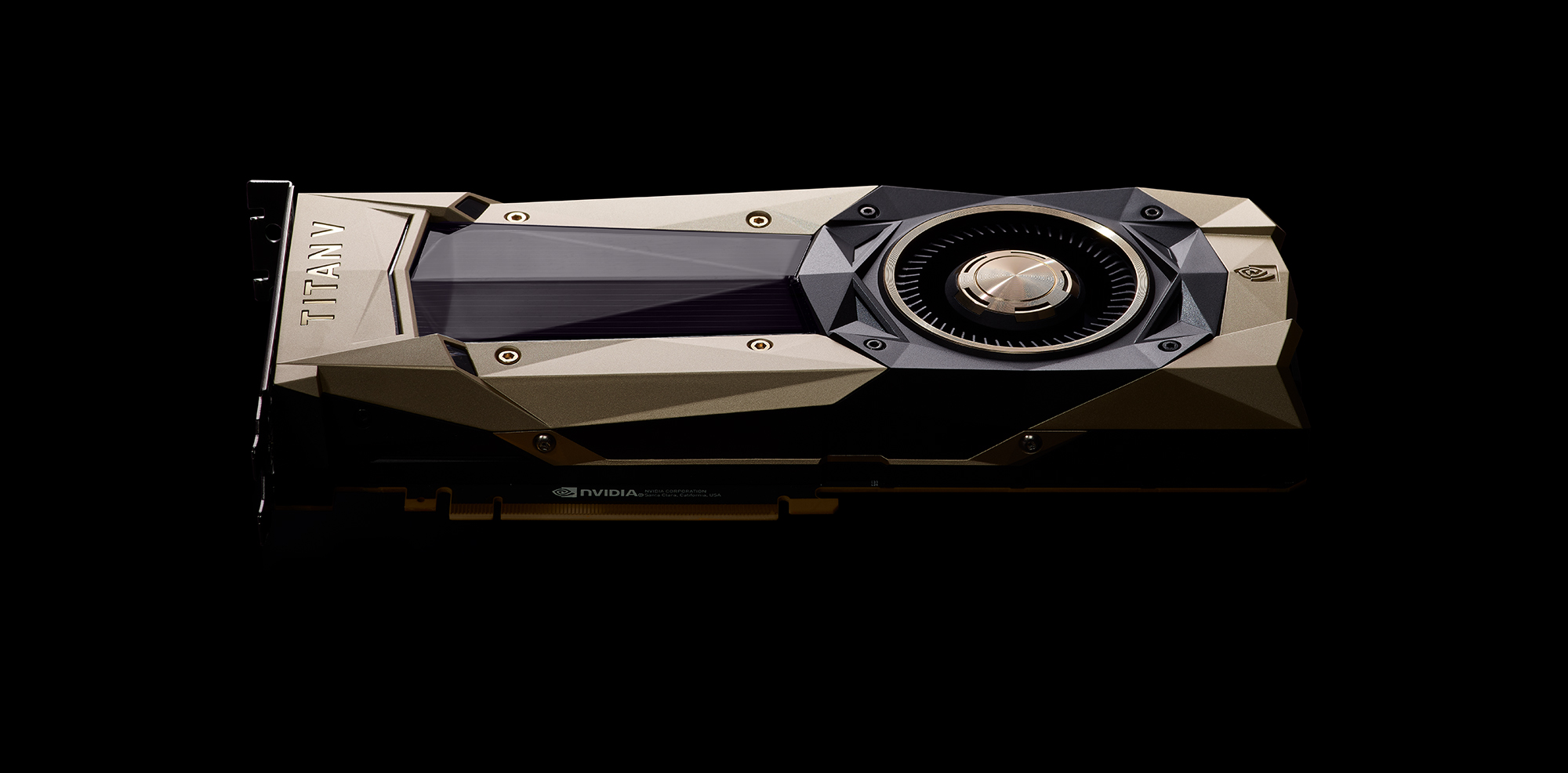

Above: A photo shows Nvidia’s Titan V desktop GPU.

Question: You’re absolutely certain that your GPUs are not vulnerable to these threats?

Huang: I’m absolutely certain our GPU is not affected. At this moment, I’m absolutely certain. The GPU does not need to be patched.

VB: What about the Tegra side, ARM-based CPUs?

Huang: First of all, we don’t ship that many Tegra chips. Most of our Tegra systems related to cars have yet to be shipped. But for Shield, for example, that’s an Android. Google will probably have to patch something.

Question: Can you talk more about your partnership with Blackberry and the definition of functional safety?

Huang: In order to achieve functional safety, we have to architect the entire system, from the architecture of our chip, to the design of our chip, to the system software, and then of course the OS. For the OS we chose QNX, which is Blackberry. Blackberry bought QNX. QNX is the first OS that’s certified for functional safety, ASIL D level, ISO 26262. It’s a very complicated set of things. It has to do with how they isolate multiple running processes inside the OS. QNX is functional safe.

TTTech is a company in Geneva. They’ve been in the automotive industry a very long time. They have a middleware, called a framework, that’s time triggered, not event triggered. All of our computers here are event triggered. Time triggered is like a clock. Every operation, every application, can have this much time. Then it has to give up the time, give up the process to another application. It has this much time. It’s a real time system, a framework for applications. Combined with QNX and all of our software that makes for the functional safety architecture.

Question: Is that an open platform?

Huang: Yes, it’s an open platform. People are using it now. All of our developers are using it. Nvidia’s Drive, the whole platform, has Xavier on the bottom. It has our Drive OS inside. It has QNX and TT Tech’s technology. Then there’s Drive Works, which is basically our multimedia and AI layer. Then there’s Drive AV, our self-driving car applications. All of this is tested with Autosim. It’s so complicated.

Self-driving cars are so complicated. That’s why we have several thousand engineers working on it. We have more engineers working on self-driving cars, developing all this end-to-end system, than any company in the world today, I believe. And we have 320 partners, from startups to sensor companies to tier ones to OEMs – buses, trucks, cars.

Question: Can you talk about Drive AV and when your proprietary software applications will be available for production?

Huang: I believe we will achieve–we’ll ship a car with level three capability, if you will—it has all the functionalities of a level four, but the functional safety level and the ability for the car to always be in control will probably be something along the lines of early 2020. And then a level four car will probably be late 2021.

A level five car, a robot taxi, will probably be 2019. I know that’s weird, that level five is earlier, but the reason for that is because level five has a lot more geo-fencing, a lot more sensors. At some level it’s easier to do. Your taxi service is only available in one part of town. You geo-fence it.

Question: Have specific OEMs signed on to use Drive AV?

Huang: Give us a chance to announce it. I’ve told you everything we’ve announced right now. If that’s not enough, well, I haven’t announced that.

Question: Max Q is great. Intel and Nvidia, there’s always been this dream team when it comes to performance machines. It was surprising to find, when Intel announced its hybrid chip with AMD to create these ultralight performance machines—it seemed like if anyone would do that, it would be Intel and Nvidia. What do you think about that partnership?

Huang: First of all, they didn’t ask me. I would have been delighted to do it. But we didn’t need to do that. I think they needed to do that chip because Max Q just took the market by storm. Everyone is building Max Q notebooks. It’s flexible because it supports 1050, 1060, 1070, and 1080. You can have affordable versions. You can have premium versions. Max Q covers the entire stack already.

I think that Max Q was just such a surprise and so effective that—you would think somebody needs to come up with a response. Maybe that’s their response. I just don’t think it’s a great response. One of the most important things about a gaming computer is the software stack. You have to support it for as long as you live. We support our software stack for as long as we live. We’re constantly, every month, updating it. I’m trying to figure out who’s going to update their software. Once you buy it, I think it’s going to be a brick. It looks good on day one, but I think somebody has to update that software, and I don’t know if it’s Intel or AMD, to tell you the truth.

In our case, we’d rather feel a sense of commitment and a sense of ownership for all the platforms we work on. Any initiative we work on has to cover the entire range, from 1050s to 1080s.