Above: Intel and AMD are teaming up.

Question: Intel didn’t ask you, as you said. Did that affect your relationship at all?

Huang: No, I love working with Intel. We work with Intel all the time, in all kinds of platforms. The two of us are very large companies. Without us working together in all these computing devices, the industry would be worse off. We give them early releases of our stuff. Before we announce anything, we make it available to Intel. We have a professional and cordial working relationship. In the marketplace people like to create a lot more drama than there actually is. I don’t mind it.

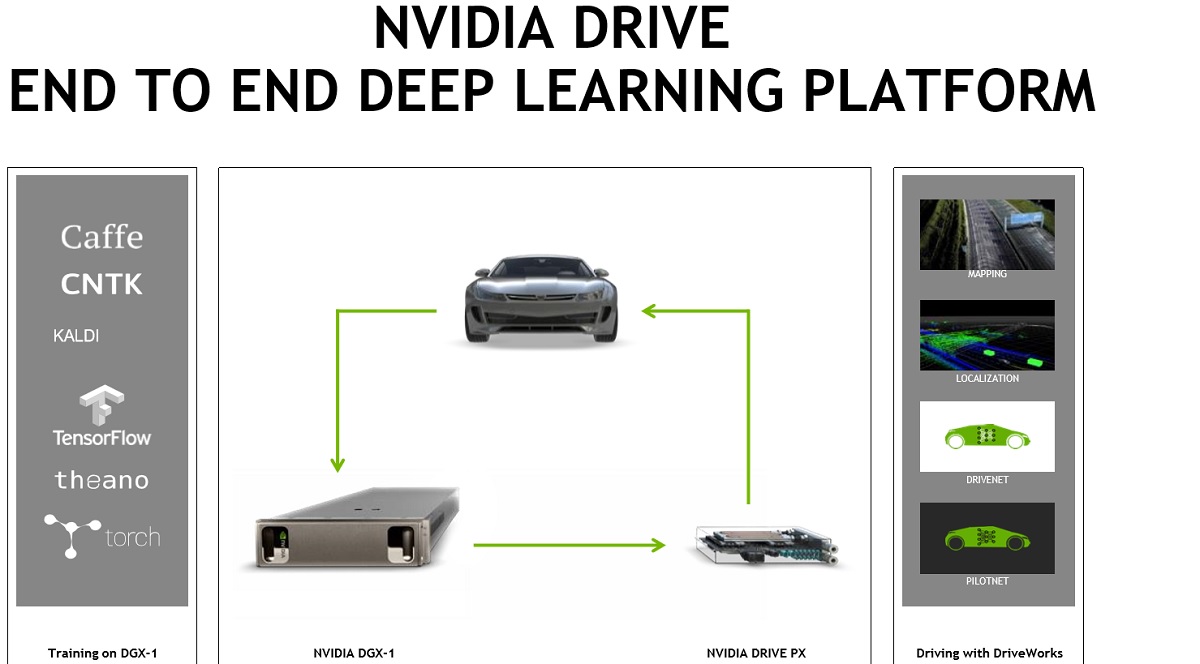

Question: Back to the autonomous car stuff, as you’re thinking about—to your point about Intel and AMD having to support their chips, same thing when you think about the replacement cycle of a car versus a PC or a gaming console. You’re talking about a much longer time on the market. How are you thinking about designing these new SOCs and chips for cars when they’re going to be out on the road for a decade plus?

Huang: For as long as we shall live. It’s something we’re very comfortable with. Think about this. The scale of software stacks on these computers is really complicated. By the time we ship the first self-driving car, my estimate would be we would have shipped along the lines of 50,000 engineering years of software. On top of all of the software that Nvidia has written up to now. Imagine 50,000 human years of car software shipping into the self-driving vehicle.

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

That car is going to have to be maintained, updated, fixed for probably 10 years. The life cycle of a car is about 10 years. Somewhere between six and 10 years. We’ll have to update that software for that long, for all of the OEMs that we support. We can do it because Nvidia has one architecture. We do it for GeForce, every version of it. We do it for Quadra, every version of that. We have one architecture.

Can you imagine how the industry is going to do it otherwise? Every OEM, every tier one, every startup, what are they going to do? Naturally the number of architectures the self-driving car industry can support will narrow, because the amount of software is too great. This happened in the PC industry, in the mobile industry. Wherever there’s a great amount of software, the number of architectures that can survive is reduced, so we can all focus on maintaining and supporting a few things.

At Nvidia we’ll continue doing exactly what we do in all the businesses we do today, which is support our software for as long as we live. That’s one of the things that made Nvidia special. 25 years ago that was not the sensibility. It was update the driver, ship it, move on. We did something different. We continued to maintain and test every version of every GPU against every driver, against every game that comes out over time.

That combination has a massive data center of computers. This data center is not just the state of the art. It has history in it. It has some fun old stuff in there. But we test it for as long as we live.

Question: 50,000 years of code. When do we get to the point where we have AI writing software?

Huang: The 50,000 human years includes a supercomputer, which we call Saturn V, with 460 nodes of one petaflop each. 460 petaflops of AI supercomputer sitting next to all of our engineers currently writing deep learning software with us. You’re absolutely right. I should add on top of that the efforts of this supercomputer that works night and day. It never complains.

VB: The ultimate employee!

Above: Cybersecurity hacking

Question: On security for a bit, I know it’s CPU and not GPU, but I want to get your perspective on the severity of these bugs. How is this going to affect the industry at large? Will chip companies take security more seriously?

Huang: Intel is a much bigger expert on this than I am, but at the highest level, the way to think about it is this: it’s taken this long to find it.

VB: Does that surprise you?

Huang: No. Systems are complicated. I think that it’s a statement about the complexity of the work we all do in the computer industry, the amount of technology that’s now embedded in the computing platforms all around us. It’s a statement that it’s taken this long to find it. Obviously it’s not a simple vulnerability. Obviously a lot of computer scientists were involved in finding it.

In terms of the patch to us, you can tell that from the time we found about it, about the same time a lot of people did, to the time we patched it—that was a very short period of time. From our perspective it’s not very serious, but for a lot of other people, I can’t assess.

Question: In contrast to what you said about consolidation of architectures in automotive and embedded, it seems like in AI and machine learning and data centers, software frameworks and ways of programming are proliferating. Do you think you can keep developers focused on Cuda and DLA with all these other approaches coming out of Google or Amazon and the like?

Huang: It’s the proliferation of frameworks, the number of networks, the architecture of networks, the application of deep learning–the combinations and permutations I’ve just described are huge. You have about 10 different frameworks now, probably. Some of it is good for computer vision. Some of it is good for natural language. Some of it is good for robotics, manufacturing. Some of it is good for cancer detection. Some of it is good for simple applications. Some of it is good for original, fundamental research.

All of these types of frameworks exist in the world today. They’re used by different companies for different applications. On top of that, the different industries are now adopting them, and on top of that, the architecture of these networks is evolving and changing. CNNs have how many different permutations now? RNNs, LCMs, GANs? The number of GANs alone, there were probably 50 GANs that came out last year. And they’re getting bigger. AlexNet was, what, eight levels deep? Now we’re looking at 152. It’s only three or four years. That’s way faster than Moore’s Law.

All of this is happening at the same time. That’s why having a foundational architecture like Cuda that remains constant is helpful to the whole industry. You can deploy to your data center, to your cloud, every single cloud. You can put it in your notebook or your PC. It’s all architecturally compatible. All of this just works. That’s really the benefit of having architectural stability. The fewer architectures, the better. That’s my story and I’m sticking to it.

Above: Nvidia’s Drive PX 2 is aimed at self-driving cars.

Question: What about self-flying taxis? What’s your perspective on the opportunity for that?

Huang: You might have seen that at GTC, in Munich, I talked about our work with Airbus. That’s a flying taxi. A flying taxi is going to take the benefits of helicopters—think of it as a 30-50 mile transportation vehicle. It’s perfect for someone who wants to live in the suburbs and commute to the city in about half an hour. I think that flying taxis, an autonomous electric helicopter, is really quite interesting.

We’ve worked with partners. The one that was the most visible was Airbus. They’re very serious about this. This is an area of exploration that makes a lot of sense. As cities get more sprawling, traffic is getting more dense. This could be a great way for people to move to the suburbs and just hop in. It doesn’t cost that much. It’s an EV.

Question: Do you see it as a significant portion of your business in, say, 10 years, though?

Huang: No, I don’t think it’ll be a significant portion of anybody’s business in 10 years. But it’s a form of transportation that we’re looking forward to.