Watch all the Transform 2020 sessions on-demand here.

Nvidia CEO Jensen Huang was back at CES 2018 with his signature black leather jacket. He unveiled the company’s announcements at a press event on Sunday at the big tech trade show in Las Vegas. They included the 9-billion transistor Xavier artificial intelligence chip, the beta launch of GeForce Now cloud gaming on Windows, and support for large format displays known as BGFD.

Then he returned for a Q&A with the press on Thursday, where he went into an unscripted monologue on what the company is doing in both artificial intelligence and gaming. When he finally stopped talking, the press got a chance to ask him some questions.

Here’s an edited transcript of our interview. I’ve also included three videos of the entire talk, if you prefer to watch it on video.

Above: Jensen Huang started out talking about gaming advances like the BFGD.

Jensen Huang: CES, as you guys know, has changed a lot over the years. It started out being about “consumer electronics.” About seven years ago or so, we invited Audi to CES for the first time. At the time, no German companies had ever come to CES. They said, “But this is consumer electronics.” I told them, “The car is the world’s largest consumer electronics.” Today it’s a mechanical device with these amazing engines and leather seats and industrial design, but in the future, the car will be packed with computing technology. It’s going to be packed with software. It’s going to be the ultimate consumer electronics device.

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

I wish all of you guys had seen it, but it was plastered—Audi had come to CES for the first time, Vegas for the first time. Their executives came to the Vegas for the first time. What they saw and they feedback they got was incredible. It was at exactly the right time, I think. Since then, this has become practically an automotive show.

The simplicity of it is that consumer electronics and the fusing of computing technology into almost everything we own—that particular trend is never going to stop. CES is getting larger and larger simply because computing technology is infusing into our lives more and more.

Here at CES we announced several things this week. I had a keynote. It was so packed with stuff that we couldn’t even include all our important gaming news. I’m going to start with gaming first. As you know, GeForce is the world’s largest game platform. It has 200 million active users, active gamers. It’s the number one gaming platform in a lot of different countries, from China to Russia to southeast Asia. It’s because GeForce, as you know, works incredibly well. Every game works great on it. The PC is an open, evolving, vibrant platform. It just gets better every day. It doesn’t get better every five years. It gets better every single day.

When technology is moving the way it is, it stands to reason that a platform that can evolve every day, over the course of time, would have a dramatic lead over other platforms. Anything that has to take a giant step every five years. That’s why GeForce today gives you the best possible gaming experience, if that’s what you like.

It’s also an open platform for different styles of play. A PC can support every single input and output peripheral. It doesn’t matter what it is. Whether it’s a game pad or a keyboard or a mouse or whatever it is, it’s able. It has such rich I/O. Because of where it sits, on your desk, you can enjoy it in any possible way.

As a result of all those different types of I/O and different ways to engage the computer, there are lots of styles of play. You can use it for first-person shooters, for esports, for social gaming. You can share your winning moments, create art, broadcast. The number of different ways you can enjoy games on a PC, the styles of games and how you can enjoy them, continues to evolve.

Now we know that gaming is not only a great game, but a great sport. It’s the largest sport, because it can be any sport. It can be a football game one day and a survivor game another day. PUBG, the latest game this last year, an incredible concept. When you see a great idea, you see it and you think, “Why didn’t I think of that?” It’s so obvious. PUBG is one of those great game ideas.

Not only would it be a great game to play, it’s also – just like Survivor or the Hunger Games – it’s a great game to watch. The number of people watching PUBG is obviously very high. All of a sudden a brand new genre came into gaming, and it did that on PC.

We announced several things. 10 new desktop PCs were created, of all different sizes and shapes, based around GeForce. Three new Max Q notebooks. We took energy efficiency and performance engineering to the extreme with Max Q. From architecture to chip design to system design, voltage, power regulation design, thermal design, software design, we took something that was already energy efficient and squeezed it into a thin notebook. Max Q notebooks are thrilling to look at. Imagine a computer that’s four times the performance of a Macbook Pro, almost twice the performance of the highest-end game console. It fits on your hand like this, in a 20mm notebook that weighs only five pounds. It’s crazy. We took our engineering skills to the limit and we delivered Max Q.

Then we said, “What else can we do to expand the reach of gaming and take the experience to a new level?” We always thought it would be great if we could take PC gaming, which is already amazing, and put it on the one display that we always wished it would be on: the largest display in your house. What would you call the largest display in your house? It’s the BFG display. So we called it BFGD, Big Format Gaming Display. [laughs] That’s what it stands for. It never gets old.

VentureBeat: Right.

Above: GeForce Now turns any PC into a gaming PC.

BFGD is G-Sync, 120Hz, and so there’s literally no stutter. If it’s running at 120Hz there’s no stutter, of course. You can’t feel it. If there’s any changes in framerate, G-Sync kicks in and there’s no stutter. It’s just smooth, low latency, high dynamic range, huge format. I can’t imagine a better way to game. The response from the reviewers and gamers I’ve seen is completely over the top. People just love it. You’re engulfed in this virtual world. Life is good.

What we did was we also thought—every display and every monitor should be a smart display, a smart monitor. How can you have something that’s electronic that isn’t connected to the internet? That somehow isn’t a streaming device? If it’s a display, it should be a streaming device. We took the Shield computer and we designed it right into the BFGD. You take it out of the box and it’s a wonderful streamer, the best on the planet. It supports Android TV. If you’d like to download games to play, boom, it comes from the Android store. If you’d like to stream games from GeForce Now, our cloud gaming service with the supercomputers up in the cloud that processes all the games, streams it to you, all you have to do is subscribe and enjoy games. Now you can enjoy games from the Android store, from GeForce Now, and from your PC.

We announced all that. We also added another platform to GeForce Now, our supercomputer at Nvidia with a whole bunch of GPUs in it. It’s running games and streaming them to devices that don’t have enough capability to play games natively. The performance is fantastic. It has our latest generation of GPUs. We’ve been supporting the Mac. The response has been incredible. We have a long waiting list. Recently we also opened it up for PC. Long term, we’d like to be able to make it possible for you to have incredible gaming experiences no matter what device you’re on. It could be a phone, a Chrome device, anything. We just want you to be able to enjoy great games, so long as you have a display.

The GeForce side did amazing work. Then we did one more thing, GeForce Experience. We updated GeForce Experience, our software platform that helps you enjoy games even more. The first thing it does is it automatically sets up your computer for all the various configurations and settings, so the moment you launch your game, you’re already enjoying it. But we also make it possible for you to share, so you can capture that amazing moment. It’s running in the background. It’s storing video, recording your gameplay, without affecting your performance. When you have an amazing moment, that’s been captured to share with all your friends.

We also made it possible to turn video games into the world’s first virtual camera. You can go in your video game and take amazing pictures of the scenery and the vistas, maybe the moment, and apply different effects to it. We call that Ansel. We have Shadow Play, which is recording you all the time, we have Ansel, and then we have this new thing we call Freestyle. Now that you’re playing your game, you can even apply special effects to it. Our filters, our image processing, is so powerful now that we can make it possible for you to enhance the imagery, or even change the mood of the imagery. Maybe for Battlefield, you could move it to a look that’s more vintage, more WWII. There are lots of ways we can allow you to enhance imagery. The response to that is incredible. It won Gadget of the Show at CES. It’s not even a gadget. It’s software. But it’s a great gadget as well. People love it.

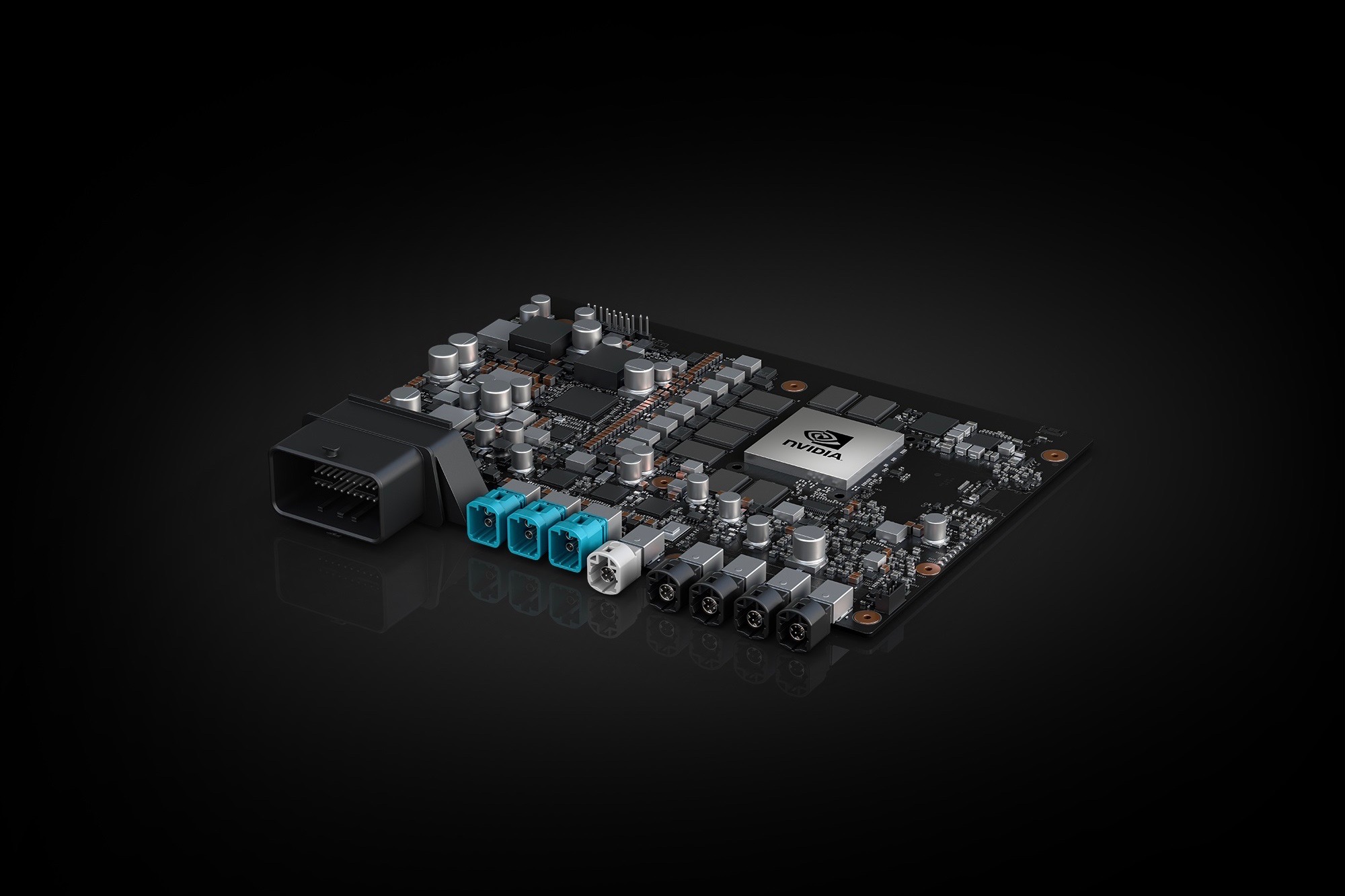

Above: Nvidia’s new Xavier system-on-a-chip.

That’s gaming. On the other side, we talked about the largest consumer electronics device, cars. We announced several things. We announced that Xavier, the most complex SOC the world has ever seen—2000 Nvidia engineers worked for three years already, and there’s another year to go, working on this incredible chip. This is the largest SOC the world’s ever made, 9 billion transistors. Think about all the processors inside. It has an eight-core custom CPU. We call it Carmela. ARM 64. Level one, level two, level three cache, all ECC, all parity. Designed for resilience. Server-class CPU.

It has the Volta GPU in it, with the brand new tensor core instructions. It has a brand new deep learning accelerator to complement our GPU, called DLA. 10 teraflops of performance. It has a brand new computer vision processor called PVA, so we can process stereo disparity, the parallax of your displays, to extract information like depth. Stereo disparity and optical flow, how pixels are moving from scene to scene. It has a brand new ISP, an image sensor processor, that allows us to process 1.5 gigapixels per second, so all of the cameras and sensors around your car can be in full high dynamic range. It has a brand new video processor that does encoding and decoding at a level where every single camera around your car can be processed and recorded, like a black box, while performing optical flow processing to it.

Did I get it all? It’s a crazy number of new processors. The entire processing pipeline of a self-driving car, from image processing, sensor calibration, perception, localization, and pathfinding—every single line of code of the entire self-driving car stack can run on Xavier. A one-chip self-driving car. We took all of the processing that was needed from our Drive PX2, a four-chip Pascal solution, and we compressed it into one. 9 billion transistors, 12nm finFET. Silicon is back. It’s working great. I showed it driving a car on stage.

Above: Nvidia’s BFGD could make big screens as fast as the newest monitors.

We have 320 developers, from tier one OEMs to startups to taxi service companies to mapping companies to research organizations, that are dying to get their hands on Xavier. We’ll start sampling it this month. That’s our first announcement.

We also announced–the single most important feature of a self-driving car is safety. Safety is not just about trying really hard and being really careful. You have to design technology that makes it possible for a computer to be safe. If you haven’t had a chance to think about safety, this is a fun area. Over the last two or three years, I’ve dedicated myself to this field. I’ve learned a lot. We changed the architecture and infused safety technology into our drive stack, from the architecture through the chip design, through all of the software, through all of the algorithms, into the system, into the cloud.

You have to solve three fundamental problems. In order to do functional safety, number one, you have to have safety of the intended features. It’s called SotIF. Whatever you decide is the intended outcome, you have to somehow validate that you’re designing something according to that. Number two, it has to be resilient to hard failures, hard faults. If a wire were to break, if a chip wire open circuits, if a solder join wears out, a hard failure, it has to be resilient to that. And it has to be resilient to soft failures. It could be a noise glitch. The temperature’s too high. The memory forgot a bit. We got hit by an alpha particle somewhere. All of a sudden we have soft failure.

These three fundamental failure types – functional failure type, otherwise known as systematic failure, hard failure, and soft failure – the architecture in totality has to be able to deal with them. In the final analysis, what you’re looking for is the ability to have redundancy and diversity. Everything you do has to have backups. Everything you back up with has to be different from what you backed up originally. Another way of saying it is, I need to be able to do computer vision in multiple ways. I need to do localization in multiple ways. I need to sense my environment in multiple ways. Not just many ways, not just more than one way. It has to be redundant and diverse.

We’re going to design future cars the way people design airplanes. Except we have to use so much technology and ingenuity to reduce its cost and its form factor. We can’t afford to have a jet plane. That would be great if could. It would be the brute force way. But unfortunately the cost is too high for society to bear. We have to apply all kinds of technology and innovation to do that. We announced that the Nvidia drive stack will be the world’s first top to bottom functional safe drive stack, ISO 26262, with full functional safety. It’s a big achievement.

Above: Nvidia CEO Jensen Huang at CES 2018.

We also talked about a method for achieving SotIF, safety of the intended functionality. The way to do that is simulation. The best way to know whether something works is to simulate it. We can drive thousands of miles per car a week. A fleet of cars would have hundreds of thousands of miles per week. But society drives trillions of miles per year. 15 trillion miles per year is how far we all drive around the world. We’d like to have some percentage representation of that, and the only way to do that is through simulation.

We created a parallel universe, an alternative universe world, a virtual reality where we would have virtual reality cars driving in virtual reality cities. Each one of the cars would have an artificial intelligence, the entire stack of Drive inside every single car. Our AI stack, Drive, will be in all these VR cars, driving in VR cities, running on a huge supercomputer with many versions of those VR universes. We’re going to have thousands of parallel universes where all these VR cars are driving around, and as a result, we can test a lot more miles in VR than we could physically. Of course, in addition, we’ll also drive the cars. We have a large fleet of cars. We’ll be doing our software that way as well.

It’s called Autosim. It’s a bit like the movie Inception. We have VR. We have AR. We have AI. They’re all embedded inside. It’s a bit of a brain-teaser, but once you put on the HMD, you’ll feel exactly what it is. All of a sudden, using VR, we’ve fused our world with that virtual world. We’ve wormholed into that virtual world. Within that virtual world, you can see everything, and it works just fine.

We also announced several partnerships. We’ll be working with Uber to create their fleet of self-driving taxis. We announced that the hottest startup in self-driving cars today, Aurora, Chris Urmson’s company–they partnered with Volkswagen and Hyundai to create self-driving cars, and we’re partnering with Aurora to make that possible. We’re partnering with VW to infuse AI into their next-generation fleet of cars. It’s AI for self-driving and it’s AI for the cockpit. Your future car will basically become an AI agent. We announced Mercedes yesterday. Mercedes’ next-generation fleet of cars will have Nvidia AI in their digital cockpits.

Above: Jensen Huang, CEO of Nvidia, talks about gaming at CES 2018.

VB: Early on, you said that CPUs are really not good for AI, and that the GPU was much better, more naturally good at it. But it seems like with the latest design–it’s a 9 billion transistor chip. Last year’s was 15 billion. You’re almost getting away from the GPU to get ground-up AI chips. Is that a turning point? First CPUs weren’t as good at this, but now you’re going into AI chips that are fundamentally good at AI.

Huang: The initial proposition is that CPUs aren’t as good at AI, and I’m not going to disagree with that. I would agree that CPUs aren’t good for very high throughput parallel computing of almost anything. We did a lot of AI work on our GPUs. And then, now, in this new SOC, we actually have a specific accelerator dedicated to deep learning as well. Out of our Xavier, there are 30 trillion operations per second, which is basically four Pascal chips. It’s a combination of 16-bit floating point and 8-bit integers. Of the 30 trillion, 20 of it is in the GPU and 10 of it is in the specialized accelerator, a special processor like a TPU.

Does that mean there are new ways of designing deep learning and AI? You can see that we chose 20/10 for a specific reason. There are some networks that we know are going to be relatively well-known. Therefore, we can simplify its design even further. DLA is about, at the core level, 33 percent more energy-efficient than Cuda. If I design something that’s very limiting, very specialized, and I can get another third of energy efficiency, at the core level—at the chip level, when you add the overhead to it of the I/Os and all the stuff that’s needed to keep the chip going, you reduce that 33 percent by some. Call it maybe another third. You don’t get the full third. But the benefit is that if you know what you want to run, like CNN for some types of networks, where you know you don’t need the precision, we can put that into our DLA.

Unfortunately, there are so many different types of networks. There are CNNs, RNNs, networks where I don’t want to reduce the precision from 32-bit floating point. I want the flexibility that goes along with all these different networks. I want to run that on Cuda. I think the answer is, we believe deep learning can have the benefit of both. The incremental savings is likely to be less and less over time, because tensor core is becoming so efficient. But nonetheless, there’s some benefit to be had there. Both of them are much better than CPUs.

Question: Related to security concerns, I want our readers to be clear about what you’re saying on Spectre issues with GPUs. Are there concerns with your product, GPUs, around the Spectre and Meltdown array of issues? Is that what you’re fixing?

Huang: This morning there was some news that incorrectly reported—I forget exactly what the headline was, but it’s incorrect. Our GPUs are immune, not affected by these security issues. What we did was we released driver updates to patch the CPU security vulnerability. We’re patching the CPU vulnerability same way Amazon is going to, the same way SAP is going to, because we have software as well. It has nothing to do with our GPU at all. But our driver is just software. Anybody who has software needs to do patching.

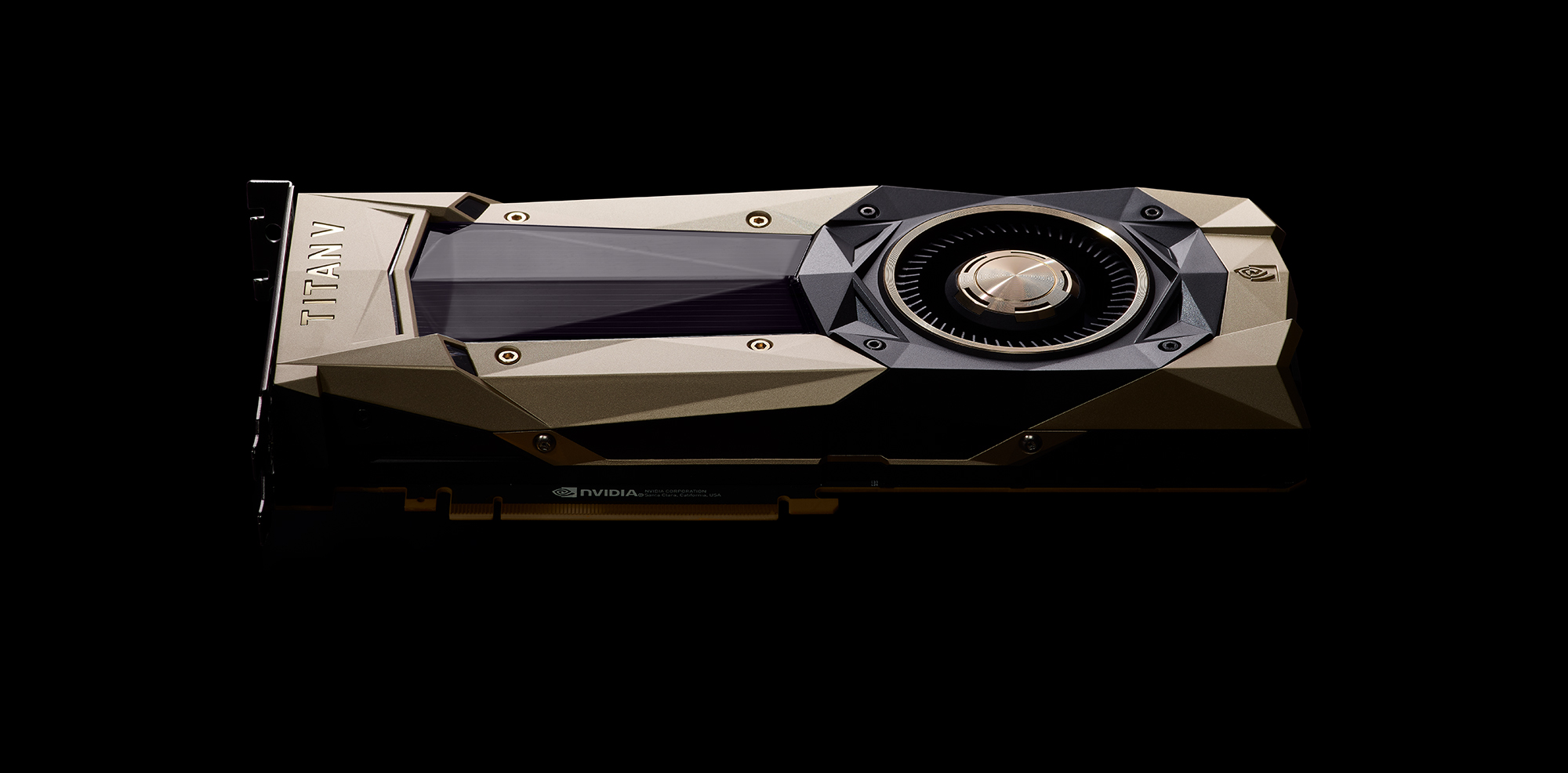

Above: A photo shows Nvidia’s Titan V desktop GPU.

Question: You’re absolutely certain that your GPUs are not vulnerable to these threats?

Huang: I’m absolutely certain our GPU is not affected. At this moment, I’m absolutely certain. The GPU does not need to be patched.

VB: What about the Tegra side, ARM-based CPUs?

Huang: First of all, we don’t ship that many Tegra chips. Most of our Tegra systems related to cars have yet to be shipped. But for Shield, for example, that’s an Android. Google will probably have to patch something.

Question: Can you talk more about your partnership with Blackberry and the definition of functional safety?

Huang: In order to achieve functional safety, we have to architect the entire system, from the architecture of our chip, to the design of our chip, to the system software, and then of course the OS. For the OS we chose QNX, which is Blackberry. Blackberry bought QNX. QNX is the first OS that’s certified for functional safety, ASIL D level, ISO 26262. It’s a very complicated set of things. It has to do with how they isolate multiple running processes inside the OS. QNX is functional safe.

TTTech is a company in Geneva. They’ve been in the automotive industry a very long time. They have a middleware, called a framework, that’s time triggered, not event triggered. All of our computers here are event triggered. Time triggered is like a clock. Every operation, every application, can have this much time. Then it has to give up the time, give up the process to another application. It has this much time. It’s a real time system, a framework for applications. Combined with QNX and all of our software that makes for the functional safety architecture.

Question: Is that an open platform?

Huang: Yes, it’s an open platform. People are using it now. All of our developers are using it. Nvidia’s Drive, the whole platform, has Xavier on the bottom. It has our Drive OS inside. It has QNX and TT Tech’s technology. Then there’s Drive Works, which is basically our multimedia and AI layer. Then there’s Drive AV, our self-driving car applications. All of this is tested with Autosim. It’s so complicated.

Self-driving cars are so complicated. That’s why we have several thousand engineers working on it. We have more engineers working on self-driving cars, developing all this end-to-end system, than any company in the world today, I believe. And we have 320 partners, from startups to sensor companies to tier ones to OEMs – buses, trucks, cars.

Question: Can you talk about Drive AV and when your proprietary software applications will be available for production?

Huang: I believe we will achieve–we’ll ship a car with level three capability, if you will—it has all the functionalities of a level four, but the functional safety level and the ability for the car to always be in control will probably be something along the lines of early 2020. And then a level four car will probably be late 2021.

A level five car, a robot taxi, will probably be 2019. I know that’s weird, that level five is earlier, but the reason for that is because level five has a lot more geo-fencing, a lot more sensors. At some level it’s easier to do. Your taxi service is only available in one part of town. You geo-fence it.

Question: Have specific OEMs signed on to use Drive AV?

Huang: Give us a chance to announce it. I’ve told you everything we’ve announced right now. If that’s not enough, well, I haven’t announced that.

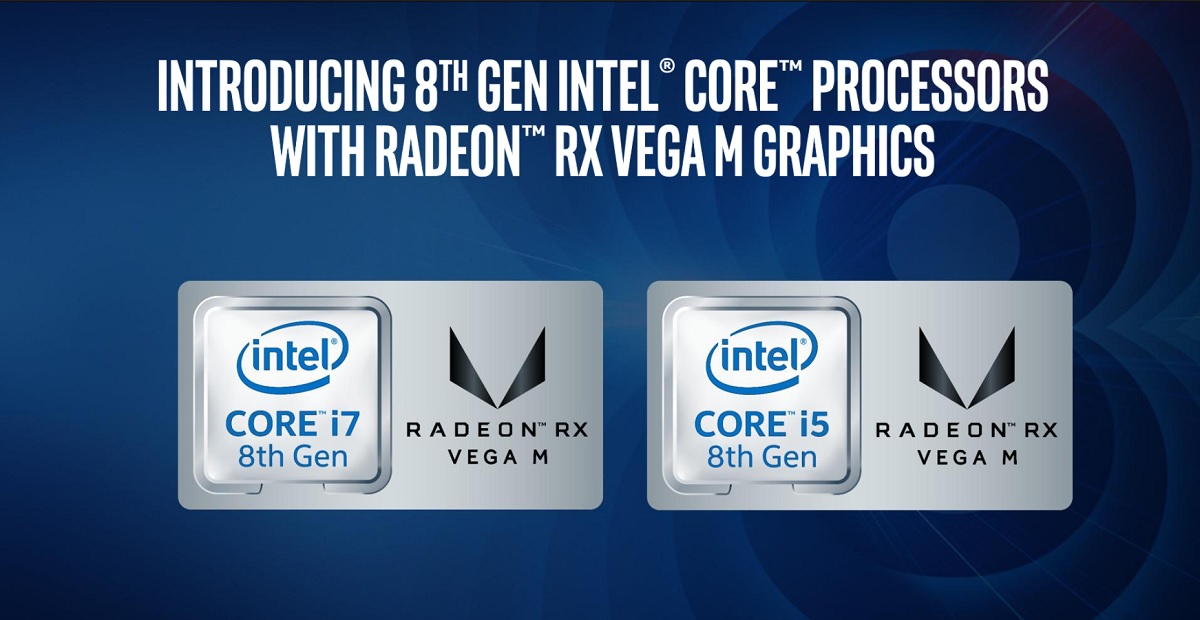

Question: Max Q is great. Intel and Nvidia, there’s always been this dream team when it comes to performance machines. It was surprising to find, when Intel announced its hybrid chip with AMD to create these ultralight performance machines—it seemed like if anyone would do that, it would be Intel and Nvidia. What do you think about that partnership?

Huang: First of all, they didn’t ask me. I would have been delighted to do it. But we didn’t need to do that. I think they needed to do that chip because Max Q just took the market by storm. Everyone is building Max Q notebooks. It’s flexible because it supports 1050, 1060, 1070, and 1080. You can have affordable versions. You can have premium versions. Max Q covers the entire stack already.

I think that Max Q was just such a surprise and so effective that—you would think somebody needs to come up with a response. Maybe that’s their response. I just don’t think it’s a great response. One of the most important things about a gaming computer is the software stack. You have to support it for as long as you live. We support our software stack for as long as we live. We’re constantly, every month, updating it. I’m trying to figure out who’s going to update their software. Once you buy it, I think it’s going to be a brick. It looks good on day one, but I think somebody has to update that software, and I don’t know if it’s Intel or AMD, to tell you the truth.

In our case, we’d rather feel a sense of commitment and a sense of ownership for all the platforms we work on. Any initiative we work on has to cover the entire range, from 1050s to 1080s.

Above: Intel and AMD are teaming up.

Question: Intel didn’t ask you, as you said. Did that affect your relationship at all?

Huang: No, I love working with Intel. We work with Intel all the time, in all kinds of platforms. The two of us are very large companies. Without us working together in all these computing devices, the industry would be worse off. We give them early releases of our stuff. Before we announce anything, we make it available to Intel. We have a professional and cordial working relationship. In the marketplace people like to create a lot more drama than there actually is. I don’t mind it.

Question: Back to the autonomous car stuff, as you’re thinking about—to your point about Intel and AMD having to support their chips, same thing when you think about the replacement cycle of a car versus a PC or a gaming console. You’re talking about a much longer time on the market. How are you thinking about designing these new SOCs and chips for cars when they’re going to be out on the road for a decade plus?

Huang: For as long as we shall live. It’s something we’re very comfortable with. Think about this. The scale of software stacks on these computers is really complicated. By the time we ship the first self-driving car, my estimate would be we would have shipped along the lines of 50,000 engineering years of software. On top of all of the software that Nvidia has written up to now. Imagine 50,000 human years of car software shipping into the self-driving vehicle.

That car is going to have to be maintained, updated, fixed for probably 10 years. The life cycle of a car is about 10 years. Somewhere between six and 10 years. We’ll have to update that software for that long, for all of the OEMs that we support. We can do it because Nvidia has one architecture. We do it for GeForce, every version of it. We do it for Quadra, every version of that. We have one architecture.

Can you imagine how the industry is going to do it otherwise? Every OEM, every tier one, every startup, what are they going to do? Naturally the number of architectures the self-driving car industry can support will narrow, because the amount of software is too great. This happened in the PC industry, in the mobile industry. Wherever there’s a great amount of software, the number of architectures that can survive is reduced, so we can all focus on maintaining and supporting a few things.

At Nvidia we’ll continue doing exactly what we do in all the businesses we do today, which is support our software for as long as we live. That’s one of the things that made Nvidia special. 25 years ago that was not the sensibility. It was update the driver, ship it, move on. We did something different. We continued to maintain and test every version of every GPU against every driver, against every game that comes out over time.

That combination has a massive data center of computers. This data center is not just the state of the art. It has history in it. It has some fun old stuff in there. But we test it for as long as we live.

Question: 50,000 years of code. When do we get to the point where we have AI writing software?

Huang: The 50,000 human years includes a supercomputer, which we call Saturn V, with 460 nodes of one petaflop each. 460 petaflops of AI supercomputer sitting next to all of our engineers currently writing deep learning software with us. You’re absolutely right. I should add on top of that the efforts of this supercomputer that works night and day. It never complains.

VB: The ultimate employee!

Above: Cybersecurity hacking

Question: On security for a bit, I know it’s CPU and not GPU, but I want to get your perspective on the severity of these bugs. How is this going to affect the industry at large? Will chip companies take security more seriously?

Huang: Intel is a much bigger expert on this than I am, but at the highest level, the way to think about it is this: it’s taken this long to find it.

VB: Does that surprise you?

Huang: No. Systems are complicated. I think that it’s a statement about the complexity of the work we all do in the computer industry, the amount of technology that’s now embedded in the computing platforms all around us. It’s a statement that it’s taken this long to find it. Obviously it’s not a simple vulnerability. Obviously a lot of computer scientists were involved in finding it.

In terms of the patch to us, you can tell that from the time we found about it, about the same time a lot of people did, to the time we patched it—that was a very short period of time. From our perspective it’s not very serious, but for a lot of other people, I can’t assess.

Question: In contrast to what you said about consolidation of architectures in automotive and embedded, it seems like in AI and machine learning and data centers, software frameworks and ways of programming are proliferating. Do you think you can keep developers focused on Cuda and DLA with all these other approaches coming out of Google or Amazon and the like?

Huang: It’s the proliferation of frameworks, the number of networks, the architecture of networks, the application of deep learning–the combinations and permutations I’ve just described are huge. You have about 10 different frameworks now, probably. Some of it is good for computer vision. Some of it is good for natural language. Some of it is good for robotics, manufacturing. Some of it is good for cancer detection. Some of it is good for simple applications. Some of it is good for original, fundamental research.

All of these types of frameworks exist in the world today. They’re used by different companies for different applications. On top of that, the different industries are now adopting them, and on top of that, the architecture of these networks is evolving and changing. CNNs have how many different permutations now? RNNs, LCMs, GANs? The number of GANs alone, there were probably 50 GANs that came out last year. And they’re getting bigger. AlexNet was, what, eight levels deep? Now we’re looking at 152. It’s only three or four years. That’s way faster than Moore’s Law.

All of this is happening at the same time. That’s why having a foundational architecture like Cuda that remains constant is helpful to the whole industry. You can deploy to your data center, to your cloud, every single cloud. You can put it in your notebook or your PC. It’s all architecturally compatible. All of this just works. That’s really the benefit of having architectural stability. The fewer architectures, the better. That’s my story and I’m sticking to it.

Above: Nvidia’s Drive PX 2 is aimed at self-driving cars.

Question: What about self-flying taxis? What’s your perspective on the opportunity for that?

Huang: You might have seen that at GTC, in Munich, I talked about our work with Airbus. That’s a flying taxi. A flying taxi is going to take the benefits of helicopters—think of it as a 30-50 mile transportation vehicle. It’s perfect for someone who wants to live in the suburbs and commute to the city in about half an hour. I think that flying taxis, an autonomous electric helicopter, is really quite interesting.

We’ve worked with partners. The one that was the most visible was Airbus. They’re very serious about this. This is an area of exploration that makes a lot of sense. As cities get more sprawling, traffic is getting more dense. This could be a great way for people to move to the suburbs and just hop in. It doesn’t cost that much. It’s an EV.

Question: Do you see it as a significant portion of your business in, say, 10 years, though?

Huang: No, I don’t think it’ll be a significant portion of anybody’s business in 10 years. But it’s a form of transportation that we’re looking forward to.