Watch all the Transform 2020 sessions on-demand here.

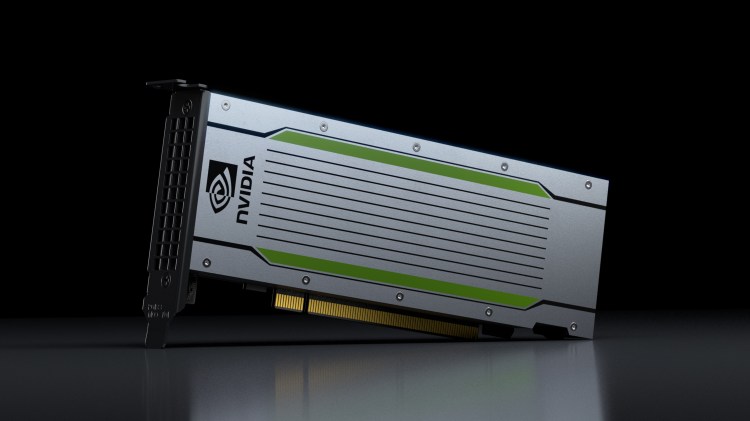

Nvidia today debuted the Tesla T4 graphics processing unit (GPU) chip to speed up inference from deep learning systems in datacenters. The T4 GPU is packed with 2,560 CUDA cores and 320 Tensor cores with the power to process queries nearly 40 times faster than a CPU.

Inference is the process of deploying trained AI models to power the intelligence imbued in services like visual search engines, video analysis tools, or questions to an AI assistant like Alexa or Siri.

As part of its push to capture the deep learning market, two years ago Nvidia debuted its Tesla P4 chip made especially for the deployment of AI models. The T4 is more than 5 times faster than its predecessor, the P4, at speech recognition inference and nearly 3 times faster at video inference.

Analysis by Nvidia found that nearly half of all inference performed with the P4 in the span of the past two years was related to videos, followed by speech processing, search, and natural language and image processing.

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

Unlike the Pascal-based P4, the T4 utilizes the Turing Tensor Core for GPUs, an architecture expected to fuel a series of Nvidia chips that Huang referred to as the “greatest leap since the invention of the CUDA GPU in 2006.”

Since making its debut last month, the Turing architecture has also been utilized to power GeForce RTX graphics chips for real-time ray tracing in video games.

The news was announced onstage today by Nvidia CEO Jensen Huang in a presentation at the GTC conference in Japan.

Also announced today was the launch of the TensorRT Hyperscale Inference Platform, an upgrade to the TensorRT that includes the inference optimizing TensorRT5, and the NVIDIA TensorRT inference server, a containerized software that works with popular frameworks like TensorFlow and can integrate with Kubernetes and Docker.