Watch all the Transform 2020 sessions on-demand here.

Neural networks learn differently from people. If a human comes back to a sport after years away, they might be rusty but they will still remember much of what they learned decades ago. A typical neural network, on the other hand, will forget the last thing it was trained to do. Virtually all neural networks today suffer from this “catastrophic forgetting.”

It’s the Achilles’ heel of machine learning, OpenAI research scientist Jeff Clune told VentureBeat, because it prevents machine learning systems from “continual learning,” the ability to remember previous tasks. But some systems can be taught to remember.

Before joining OpenAI last month to lead its multi-agent team, Clune worked with researchers from Uber AI Labs and the University of Vermont. This week, they collectively shared ANML (a neuromodulated meta-learning algorithm), which is able to learn 600 sequential tasks with minimal catastrophic forgetting.

“This is relatively unheard-of in machine learning. To my knowledge, it’s the longest sequence of tasks that AI has been able to do, and at the end of it, it’s still pretty good at all the tasks that it saw,” Clune said. “I think that these sorts of advances will be used in almost every situation where we use AI. It will just make AI better.”

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

Clune helped cofound Uber AI Labs in 2017, following the acquisition of Geometric Intelligence, and is one of seven coauthors of a paper called “Learning to Continually Learn” published Monday on arXiv.

Teaching AI systems to learn and remember thousands of tasks is what the paper coauthors call a long-standing “grand challenge” of AI. Such systems can enable the creation of AI systems that can handle and remember a range of tasks, and Clune believes AI like ANML is key to achieving a faster path to the greatest challenge: artificial general intelligence (AGI).

In another paper Clune wrote before joining OpenAI — a startup with billions in funding aimed at creating the world’s first AGI — he argued a faster path to AGI can be achieved through improving meta-learning algorithm architectures, the algorithms themselves, and the automatic generation of training environments.

“If you had a system that was searching for architectures, creating better and better learning algorithms, and automatically creating its own learning challenges and solving them and then going on to harder challenges … [If you] put those three pillars together … you have what I call an ‘AI-generating algorithm.’ That’s an alternative path to AGI that I think will ultimately be faster,” Clune told VentureBeat.

ANML has made progress by meta-learning solutions to problems instead of manually engineering solutions. This is in keeping with a move toward searching for algorithm architectures to find state-of-the-art results rather than hand-coding algorithms from scratch.

Last week, an MIT Tech Review article argued that OpenAI lacks a clear plan for reaching AGI, as some of its breakthroughs are the product of computational resources and technical innovations developed in other labs. The average OpenAI employee, the article said, believes it will take 15 years to reach AGI.

An OpenAI spokesperson told VentureBeat that Clune’s vision of AI-generating algorithms is in line with the organization’s research interests and previous work, like a model for a robotic hand to solve a Rubik’s Cube. But the individual did not share an opinion on Clune’s theory about a faster path to AGI.

The spokesperson also declined to answer questions about a roadmap to AGI or comment on the MIT Tech Review article, but said that Clune, as head of the multi-agent team, will focus on AI-generating algorithms, multiple interacting agents, open-ended algorithms, automatically generating training environments, and other versions of deep reinforcement learning.

“They hired me to pursue this vision and they’re interested — a lot of their work is very aligned with this vision, and they like the idea and hired me to keep working on it in part because it’s aligned with work they’ve published,” Clune said.

ANML, neuromodulation, and the human brain

ANML achieves state-of-the-art continual learning results by meta-learning the parameters of a neurmodulation network. Neuromodulation is a process found in the human brain, where neurons can inhibit or excite other neurons in the brain, including inciting them to learn.

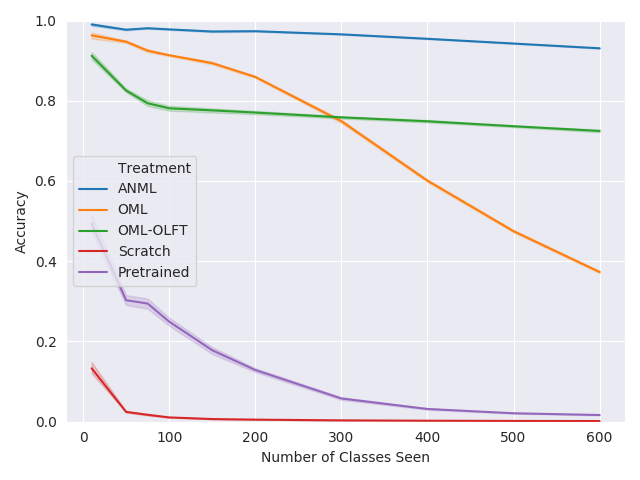

Above: Meta-test training classification accuracy

In his lab at the University of Wyoming, Clune and colleagues demonstrated that they could completely overcome catastrophic forgetting, but only for smaller, simpler networks. ANML scales catastrophic forgetting reduction to a deep learning model with 6 million parameters.

It also expands upon OML, a model that was introduced by Martha White and Khurram Javed at NeurIPS 2019 and is capable of completing up to 200 tasks without catastrophic forgetting.

But Clune said ANML differs from OML because his team realized turning learning on and off is not enough on its own at scale; it’s also necessary to modulate the activation of neurons.

“What we do in this work is we allow the network to have more power. The neuromodulatory network can kind of change the activation pattern in the normal brain, if you will, the brain whose job it is to do tasks like ride a bike, play chess, or recognize images. It can change that kind of activity and say ‘I only want to hear from the chess-playing part of your network right now,’ and then that indirectly allows it to control where learning happens. Because if only the chess-playing part of the network is active, then according to stochastic gradient descent, which is the algorithm that you use for all deep learning, then learning will only happen more or less in that network.”

In initial ANML work, the model uses computer vision to recognize human handwriting. For next steps, Clune said he and others at Uber AI Labs and the University of Vermont will scale ANML to try to accomplish more complex tasks. Work on ANML is supported by DARPA’s Lifelong Learning machines award.