Watch all the Transform 2020 sessions on-demand here.

In the aftermath of the magnitude 9.0 earthquake that struck off the coast of Japan’s Honshu Island in 2011, primary power and cooling systems at Tokyo Electric Power Company’s Fukushima Daiichi nuclear power plant were knocked offline. Three of the reactors melted within the first three days, mainly as a result of flooding from the nearly 50-foot tidal wave generated by the earthquake. And in the months that followed, an estimated 600 tons of radioactive fuel leaked from storage units. The reactors are expected to take 30 to 40 years to decommission.

Inspecting damaged nuclear plants poses a unique challenge. Radiation inside one of the Fukushima Daiichi reactors was recorded at 73 sieverts, more than 7 times the fatal dose. (Ten sieverts would kill most people exposed to it within weeks.) That’s why researchers at the University of Pennsylvania’s GRASP Lab and New York University’s Tandon School of Engineering propose that robots undertake the job instead. In a paper published on the preprint server Arxiv.org, they describe an autonomous quadcopter designed to inspect the interior of hazardous sites.

Autonomous machines have been used to inspect containment buildings before — the paper’s authors note that wall-climbing robots are a popular choice among cleanup crews. But they’re remotely teleoperated, physically large, and heavy. Some commercially available models measure 10 meters in length and weigh more than 1000 kilograms.

By contrast, the researchers say, airborne drones are well-suited to the cluttered, confined interiors of post-meltdown reactors, which often lie beyond the range of GPS and local wireless. They’re also compact enough to squeeze into tight spaces like entry latches, which can measure less than 0.3 meters in diameter.

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

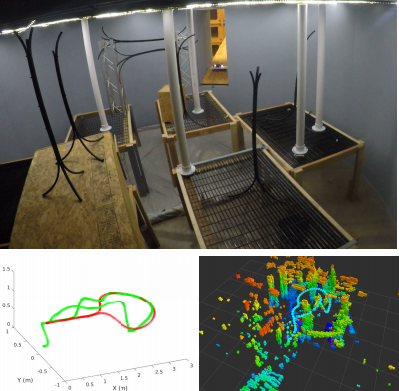

Above: One of several test environments the drone navigated.

“The primary goal of this work is to develop a fully autonomous system that is capable of inspecting inside damaged sites,” the researchers write. “The proposed solution opens up new ways to inspect nuclear reactors and to support nuclear decommissioning, which is well known to be a dangerous, long, and tedious process.”

The team’s solution comprises two parts: an autonomous system with state estimation, control, and mapping modules running on custom-designed hardware, and a map-based obstacle avoidance and navigation system. Their UAV — a 0.16-meter diameter, 236-gram quadcopter using Qualcomm’s Snapdragon Flight system-on-chip — leverages visual inertial odometry combined with data from an inertial measurement unit (IMU) and a downward-facing camera to localize its position, and it taps an algorithm to create an environment map with a stereo front-facing camera, which it employs for real-time obstacle avoidance and trajectory planning.

In three experiments carried out in a full-scale mockup of a primary containment vessel (PCV) — an obstacle avoidance course, luminance tests, and inspection — the quadcopter performed well, the researchers say. It was successfully able to detect and avoid obstacles just 0.25 meters in diameter and to safety maneuver itself away from the mockup’s clutter.

“We showed the possibility to concurrently run [mapping and planning algorithms] onboard [a drone] with limited computational capability to solve a complex nuclear inspection task,” the researchers wrote. “To the best of our knowledge, this is the first fully autonomous system of this size and scale applied to inspect the interior of a full-scale mockup PCV for supporting nuclear decommissioning.”

They leave to future work smaller-scale platforms with faster sensors, improved algorithms, and onboard LEDs.