testsetset

Not all voice platforms are created equal. Google Home speakers are 3 percent less likely to give accurate responses to people with Southern accents than those with Western accents, according to studies commissioned by the Washington Post. And voice validation datasets like Switchboard have been shown to favor speakers from particular regions of the country.

A report published this week by Vocalize.ai more or less confirmed that the “accent gap” is alive and well, but it placed one competitor ahead of the others: Google.

In a series of three tests, Vocalize.ai, a lab that develops test suites for automated speech recognition systems, evaluated the performance of a Google Home speaker, an Amazon Echo device, and Apple’s HomePod across the accents of foreign-born residents living in the U.S. It used three English datasets — one in an Indian accent, one in a Chinese accent, and one in a U.S. accent — recorded by voiceover professionals.

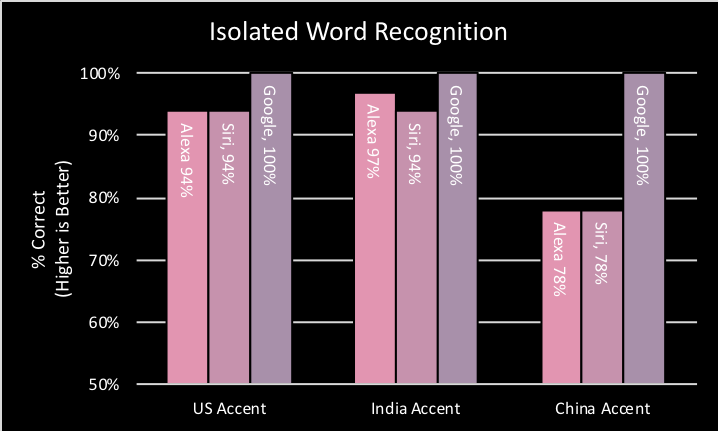

Above: Isolated word recognition performance.

The first test measured the speakers’ responses to 36 spondaic words at a constant volume (50db) and distance (1 meter). The Google Home speaker recognized words spoken in the U.S. accent, Indian accent, and Chinese accent 100 percent of the time, while the HomePod and Alexa managed to catch about 94 percent of words in the U.S. and Indian datasets and 78 percent in Chinese dataset.

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

“[It’s] a clear indication that people speaking English with a Chinese accent may have to make an extra effort to carefully annunciate each word,” Vocalize.ai wrote.

In the second test, which measured the speech recognition threshold of each speaker, the firm found that while the Google Home and and Echo speakers had maximum ranges of between 1dB and 2db, the HomePod’s was 6dB, suggesting that accented speech had a noticeable impact on its recognition.

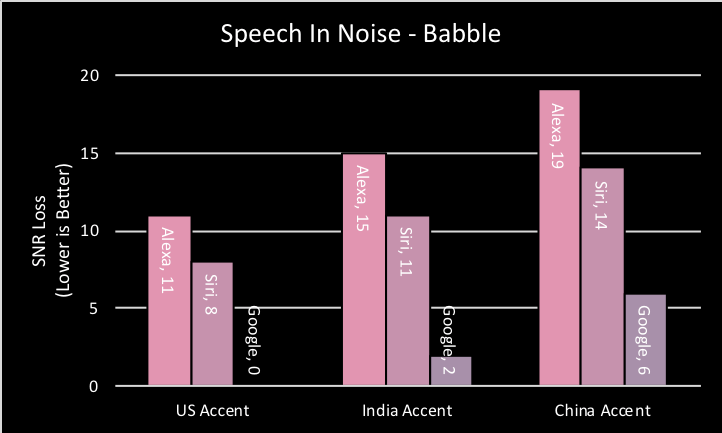

Above: Speech in noise performance.

The third and final test — modeled after the SIN tests used to check human hearing — tasked the three smart speakers with picking up on words in sentences with background noise. The Google Home speaker had the lowest signal-to-noise ratio across all three datasets, rarely exceeding 5dB; the HomePod’s ranged from 8dB to 14dB; and the Echo device, the worst performer, hit 19dB on the Chinese-accented English database.

“The future is bright for conversational computing and the voice first-generation. Nevertheless, there are challenges unique to voice which need to be addressed,” the Vocalize.ai team wrote in conclusion. “Developing new tools, driving consensus and expanding datasets are all critical for ensuring speech recognition works well for everyone, regardless of gender, age or accent.”

Prejudicial voice recognition systems are nothing new, but the good news is that some firms are trying to address them. Speechmatics, a Cambridge tech firm that specializes in enterprise speech recognition software, developed a language pack that supports all major English accents for speech-to-text transcription. And Burlington, Massachusetts-based Nuance employs a machine learning model that switches automatically between several different dialect models depending on the users’ accent.