Above: Is AR simpler than reality?

VentureBeat: The 50-button remote on your TV.

Ficklin: Right. Or the 12-button control for Vive. We have gone to a place where, can we get to that single Home button simplicity and use integrated reality to fill in for the complexity, along with gesture and voice, which is coming to the system? So yes. We’re going for a very approachable simplicity. A lot of it’s there. I’ve put this thing on my eight-year-old son. He started playing Create and he just understood the system. It was very natural to him.

VentureBeat: But you want to be able to do very complex things with these simple controls.

Ficklin: Yes, absolutely. There are ways to accomplish that by using the spatial as well. But there’s also an idea that with a layer–the mobile companion app is a control. Gestures can be control. Voice can be control. With these layers, you start being able to build combinations that are powerful.

VentureBeat: Like when you had a button on your iPhone to start an app, that turned half a dozen line commands that you had to put in and turning it into one button.

Ficklin: Right. The way they accomplished that was by controlling context. When you pressed the Home button you knew what it was going to do. We control context slightly differently because we’re in spatial computing. It’s what you’re looking at. Look, grab, and place. Look, swipe, and select. I can have four apps up, look at one, hit that button, and it’ll go back. I can look at empty space, hit the button, and it’ll spawn a home button. I can look at this, hit the bumper, and get a context menu. What you’re looking at does that same thing as having a single screen here.

That eliminates an entire click in every system that we do on our laptops. There, before we do anything we have to locate the window, put it in focus, and then we begin interacting. It’s still a simple model. It’s powerful. But with this one we were able to do another level of simplicity because we know those things. A laptop doesn’t know what you’re looking at. We know where you’re looking.

That’s going to get more and more powerful as we advance the system. Right now we’re using head pose rays, right? But there’s nothing, with further software development, to stop us from even knowing where your pupils are looking and using that to make it even more intuitive and powerful.

Above: Technology has to be easy to use.

VentureBeat: Do you think we’re already there as far as what we need, or are there things we haven’t invented yet. We have VR hand controls with some finger detection going on, but some people just want to get rid of those and say, “Give me 10 fingers in VR.” That’s pretty complex, though, a lot of things to keep track of.

Ficklin: What’s complex about the 10 fingers is there’s not a convention for it in computing. You have to learn and make up this whole new model. Or you can go to pure direct manipulation, but that’s tiresome. It goes back to how we’re tool-using creatures for a reason. Imagine picking a Netflix movie with a 10-finger model. Unless I can get down to real micro-gestures, you’re having to push all these things around to find a video. Do that for 30 seconds and you’re exhausted. You just want a mouse or a control.

To answer the broader question, instead of just the example, all the parts and pieces are there. What needs to be built is the coordinated model and the technology that allows these things to be strung together. In my conversational computing example, that’s a combination of gesture and voice. The user intuitively knows the system, but technology needs to be able to string those moments together and capture them as a command. That’s where the work lies. There’s a lot to be done.

VentureBeat: What’s ahead for you, then? How much exploratory work or research is there, relative to actually building things?

Ficklin: That is the work ahead. There’s also just the work of knowing what we know, but needing to turn it on. Remember that the iPhone launched without copy and paste. It was quite some time before we got copy and paste. That was two things: bringing the user along into this new model of computing to the point where they could understand, and getting the technology ready. It ended up being an over-the-air update quite a long ways in. Switching between apps, the hold button, that wasn’t there the first day. It took a while. These are systems that need building. What’s up next, there’s a lot of little moments that are going to come in with each update. It’ll just get simpler and simpler.

I’m really excited. There’s a lot here in this device. It’s why we signed up for this long-term journey. It’s an opportunity to build the next pattern for computing. There’s a lot of promise here. From a guy who’s a product designer – I spent the last 20 years working on just about every breaking technology – this one is really exciting, because with the convergence of features and sensors, the amount of platform in one place, it has a lot of promise to be more than just a console. It really will be a mobile wearable computer. I think people will have a lot of reasons to put it on for their digital lifestyle.

We live a digital lifestyle now. We love it. I joked in my keynote, people have walked into ponds in the middle of their digital lifestyle. You see these funny videos on YouTube all the time. It’s not that phones are going away, but we’re ready for another pattern where we can be more heads-up present and aware of our environment, and where the environment can be more present and participatory in our computing. Now our digital lifestyle can come out.

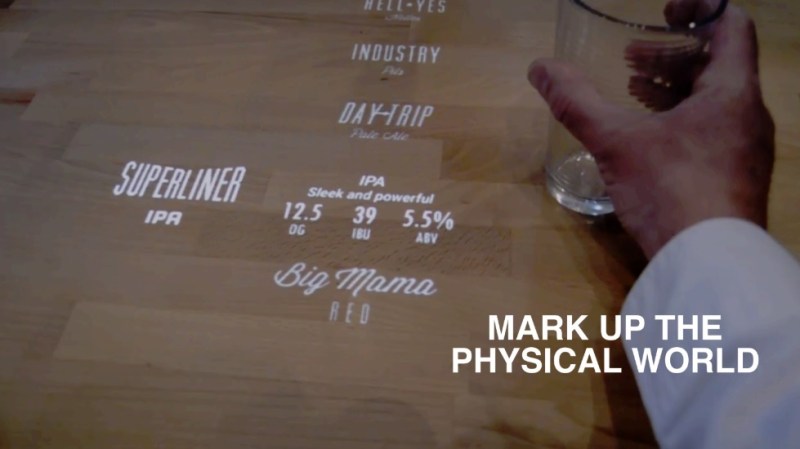

Augmented reality has been treated as a marketing trick for a little too long. Put a Heineken logo on that wall, put up some arrows that tell you where to buy a Stella Artois. It’s all about putting information in the real world. But the truth is, what we want out of AR is our digital lifestyles coming into the world, and we want the world to participate with the digital as much as the digital participates with the world.

Above: The look and feel of augmented reality

VentureBeat: I think about my 85-year-old mother, who has dementia. She could never figure out how to use an iPhone. She remembers how to do things the old way — how to use a rotary-dial or touch-tone phone – but a touchscreen phone, she had no idea how to use it. I constantly get calls from her because she touches my name on the screen. It has all these specific features to make it easier for her, like voice recognition that turns my voice into captions on the screen, because she’s hard of hearing, but she can’t get the basics of using the phone.

Ficklin: You just illustrated the power of convention. It’s funny. The “mixed” in mixed reality, as much as it might be the digital and physical world turning into a new “real” world, may also be the mixing of conventions. You could have a bell curve of computer users being able to approach the device. From my point of view, this is not something that’s designed only for early adopters. This looks like a platform that’s designed, at some point, to reach out to all computer users.

It breaks out of the console model, right? Consoles have always had trouble leaving early adopters, even though they offer amazing experiences for entertainment and socialization. The way it’s locked to the TV – what we used to call the 10-foot computing experience – has always stopped it.

VentureBeat: It’s like, why can’t you use that 16-button controller? What’s the matter with you? That could be the measure of success in the future, what slice of humanity can use this thing.

Ficklin: Exactly. A lot of living rooms have that Xbox One, but then they watch their streaming through a Roku or an Apple TV that has just a couple of buttons. It’s fascinating. The model is important. That’s what we’ve circled back around to.