One of the interesting talks during Magic Leap’s laborious three-hour keynote presentation last week was by Jared Ficklin, creative technologist and partner at Argodesign, a product design consultancy. Ficklin’s company signed on to help Magic Leap, the creator of cool new augmented reality glasses, in the creation of the next generation user interface for something called “spatial computing,” where digital animations are overlaid on the real world and viewable through the AR glasses.

At the Magic Leap L.E.A.P. conference, Ficklin showed a funny video of people looking at their smartphones and walking into things because they aren’t seeing the world around them. One guy walks into a fountain. Another walks into a sign. With AR on the Magic Leap One Creator Edition, you are plugged into both the virtual world and the real world, so things like that aren’t supposed to happen. You can use your own voice to ask about something that you see, and the answer will come up before your eyes on the screen.

For Ficklin, this kind of computing represents a rare chance to remake our relationship with the world. He wants this technology to be usable, consistent, compelling — and human. Ficklin spent 14 years at Frog Design, creating products and industrial designs for HP, Microsoft, AT&T, LG, SanDisk, Motorola, and others. For many years Jared directed the SXSW Interactive opening party which served as an outlet for both interactive installations and a collective social experiment hosting over 7,000 guests.

After his talk last week, I spoke with Ficklin at the Magic Leap conference. Here’s an edited transcript of our interview.

Above: Magic Leap One will make possible “spatial computing.”

VentureBeat: Tell me what your message was in the talk today.

Jared Ficklin: Yesterday there was an announcement that Argodesign is now a long-term strategic design partner with Magic Leap. One of the things we were brought in to work on was creating the interaction model for this kind of mixed reality computing, for the Magic Leap One device. How is everyone going to use the control and the different interaction layers in a consistent manner that’s simple and intuitive for the user?

In a lot of ways it’s the model that plays a big role in what attracts people. We had futurephones for 10 years. They had all kinds of killer apps on them. But iPhone and iOS came out with a new model for handheld computing and everyone jumped on board. We had computers for 40 years before the mouse really came along. It suddenly had a model that users could approach and everyone used it.

Right now, in the world of VR and AR, you don’t have a full interface model that matches the type of computing that people want to do. It’s in the hands of specialists and enthusiasts. What we’re trying to do with Magic Leap is invent and perfect that model. The device has all the sensors to do that. LuminOS is a great foundation, a great start for that. It’s going to be simple, friction-free, and intuitive. We’re using a lot of social mimicry for that, looking at the way people interact with computers and the real world today, how we communicate with each other, both verbal and non-verbal cues. We’re building a platform-level layer that everyone can use to build their applications.

VentureBeat: What’s an example of something that helps a lot now as far as navigating or doing something in Magic Leap?

Ficklin: A great example that’s coming, that’s going to be different—we announced we’re turning on six degrees of freedom in LuminOS. This means you have pitch, yaw, and roll from the control, inside out. You don’t need any other peripherals, just the control. That means you can invoke a ray off the end of the control and use it to point at objects, grab them with the trigger, and place them. You can interact with digital objects in the same way you do in the real world. But you can also calmly swipe and select objects.

The use cases for this are anywhere from going through a menu interface, like picking a Netflix show, to moving objects that you’ve used to decorate your space. It’s going to be really important when you start combining depth in the context of a room. Think of Spotify as a mixed reality app. You may have a menu where you’re going to put your playlist together, and then you could collapse the thing down to be this cool little tree that you set on your coffee table. When go to leave you pick it up and it follows you like the Minecraft dog. You always have your music with you. We need that kind of model.

Another thing we have to handle here is, there’s a bit of an old-is-new-again situation that I think is fascinating. There are entire generations that have grown up and never done multi-function computing. They’ve had smartphones. It’s always one app at a time. Multi-function is a layer over that where you copy and paste.

Above: Augmented reality could simplify and bring order to the real world.

VentureBeat: Having 20 browser tabs open, that kind of thing.

Ficklin: Right. If you think of the classic fear of missing out, I was talking to someone last night, someone younger than me, telling a story. They were a very smartphone-centric person. I said, “Imagine having Facebook, Twitter, Instagram, and WhatsApp open at the same time!” You and I laugh, because we grew up with personal computing, laptops and desktops. But there are kids like my son – he’s eight – who have never done it. The idea that you could have all four of those open at once is revolutionary.

We have handheld mobile computing right now. Another way to describe this is wearable mobile computing. It’s really convenient, really cool. We’ve seen the game market, the engaging 3D mixed reality market, but I think there will be another reason people put this on. That’s when they remember the delights of multi-function computing. That’s why this input model is so important. They’re going to be moving between apps.

On our laptops, one of the common things we do is we’re always minimizing and maximizing and refocusing windows. In spatial computing, you do a lot more shifting around in the room. You have to spread stuff out and bring it together, like you do on a desktop. Any time you’re working at a desk, it’s a matter of putting this over here and bringing that close to where I’m working. We have to make those types of maneuvers really easy for people. Then they’ll engage in the ways they’re familiar with, swiping through a menu and pulling a trigger to select.

Above: Magic Leap overlays digital animations on the real world via AR glasses.

VentureBeat: John Underkoffler, the designer who created the computer interface for Minority Report, spoke at our conference recently. He put out this call to game developers: you’ve been working in 3D worlds for so long, so can you help us invent the next generation of interaction with computers? He was struggling in some ways to come up with how to navigate this kind of computing.

Ficklin: There’s a couple of reasons for that, I would offer. One is that we’re not dolphins. We see stereographically. We don’t really see in 3D. Take a library. We don’t arrange our libraries in 3D. A 3D library would be a cube of books, and we wouldn’t be able to see the books in the middle. We’re not bats or dolphins. We arrange our libraries in two dimensions. We translate ourselves around these lined-up shelves of books.

That’s one challenge. We have to deal with our perception of what 3D is, from a human standpoint, versus what’s possible in a digital space. They don’t always line up. You have to respect the real world, real world physics. But at the same time, what’s magical about these flat screens is how low-friction they can make the data interchange. I don’t have to walk around a library to get all the books anymore. There’s a line where you want to bring that magic, that science fiction to the user, that low friction, and then a certain line where you want to be truly more 3D.

We were just talking about spatial arrangement. That’s going to be really important for shuffling where your focus is at the time. The second thing we like to say is, Dame Judi Dench will not be caught fingering the air. What that means, there’s a certain social acceptance to what people will do with their hands. We have to be very respectful of that, so they feel comfortable having the device on and interacting with it. Those layers will be ones they feel comfortable doing in front of other people or with other people. It can’t be too tiring. You need comfortable moments of convenience.

It’s a very powerful gesture system, because it’s from the perspective of the user. We’ll continue to advance it. They don’t have to be a conductor so much as they can just be human. The combination of all of this is something that I would call conversational computing. It sounds like voice computing, except we’re having a conversation now, and both you and I are using non-verbal gestures to communicate. You’re slowly nodding your head right now. You’re looking in different directions. I could say, “Hey, could you go get me that?” and you know what I’m talking about because I pointed at it.

Above: Is this more human?

When you take the work Underkoffler did, which has a lot of gesture manipulation, and you begin combining that with voice, the context comes together into a really human, intuitive interface. The device uses your gestures and non-verbal cues to establish half the context. You only have to establish the other half with voice. That’ll be a really interesting interface for navigating these spaces.

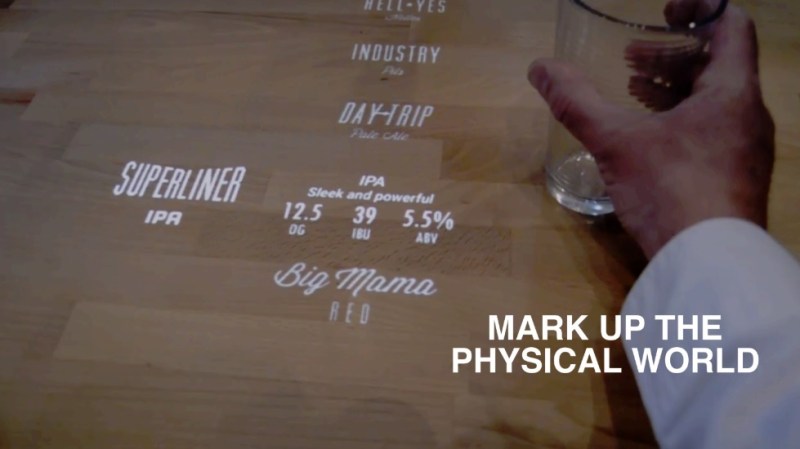

The other thing is that this is room-sized. I can point across the room, even though I can only reach as far as my hand goes, before I have to begin using a tool or projecting a ray. That will be done. I can say, “Hey, bring that over here.” When you combine that with the verbal command structures, it’ll be a really intuitive interface. Objects are going to participate, which was a disadvantage you saw in the Minority Report concept. It was a great system, but it was pinned to a wall. Why don’t we start playing with more objects? I have this little terrarium here. My system can recognize what it is and hang a lot of cool stuff on that. You could have all kinds of digital information about the plants in here.

I can also look at it as a control. This thing is round, so it qualifies as a spinner, a dial. I could have some UI here, move it near my Spotify tree, and use it to turn the volume up and down. I can take another object that’s more rectangular and use it as an on-off switch. We can look at objects and start allowing them to participate in the digital environment as control surfaces. We can start arranging intuitive interfaces wherever we need them out of the objects at hand. We can also begin using them as anchors for the experience. This becomes the new shortcut on the desktop, where I might take my Spotify and plant it here. Acknowledging the role of the physical and digital will also help solve how to interact with 3D interfaces.

VentureBeat: You also want to minimize the number of mistakes people make with these interfaces, right? The most common problem I have with this touchpad is I’ll accidentally highlight something and delete it all.

Ficklin: Absolutely. I have 25 screen captures in my iPhone because this button is opposite that button, and so every time I try to lock my phone or adjust the volume I end up taking a screenshot. There are two parts to that. One is a really good feedback loop, to make the user confident they’ve done the correct action. Second, if it’s not perceived to be working correctly, you may want to decide you should edit it back. This is important in the world of voice and gesture. In voice computing, 90 percent accuracy is almost not good enough. You need good accuracy and good feedback. When the feedback loop is connected to the user’s actions, they’re far more forgiving and far more confident. They’re not afraid of using the interface.

VentureBeat: Do you think that game interfaces have solved some of these problems? Are there some examples to be avoided there? You can easily get lost in 3D worlds.

Ficklin: You’ve played Minecraft, I’m sure. Have you ever gotten lost in Minecraft?

Above: Bringing together the real and the virtual.

VentureBeat: No, because they’ve kind of 2D’d what goes on in 3D there.

Ficklin: I get super lost in Minecraft. I’ll get down into a new mine and I guess I’m bad at leaving torch trails. Then you have to dig to the surface. Maybe I don’t know all the tricks the kids know. But to your point, yes, what you really want is a simple hierarchy. There’s a select-back context principle to LuminOS, where it’s going to be multitasking. That’s very familiar to us. We use these types of navigation trees every day. Hyperlinking on the web established them for us. Smartphones made them a cardinal law. That system is important because you don’t get lost in it. You can always home yourself.

A lot of video game principles do want you to get lost, or they have magic navigators that move you through the experience. That’s going to work in gaming. A lot of this interface stuff I’m talking about is only important to gaming in the parts where it looks like an application. Games will invent amazing stuff with all of these systems, but when it comes to living your digital life – productivity, entertainment, socialization – depth is going to be really cool, but context is going to be cooler. Having my Spotify in the room with me is cool. Having 3D-ness help that happen is cool. But there still needs to be that familiar friction-free computing experience, so we don’t have to walk around the library, as I was talking about earlier, and potentially get lost.

Before Argo I worked at Frog Design for 14 years. We were asked to make a lot of 3D interfaces over the years. It was always the same call: “How do we make a 3D interface?” That’s why talk about being humans and not dolphins. It’s a very difficult challenge.

We have a big advantage here, because the computer is wearable and mobile. The context of the room and having access to the surfaces will give us a giant leap forward. It’ll be cool to just take something out and slap it on that wall. It has a lot of semantic meaning. It means I want that here on this wall when I come back. I might have a copy of Spotify in the kitchen, a copy in the living room, a copy hanging in the bedroom. As I move between the three, the app will do a seamless handoff. I’ll always know that when I look over there, there’s Spotify.

If you use 3D-ness to connect to the physical space, in the computing interface it’ll be really awesome. In the gaming interface, somebody has to make a new version of Oregon Trail now, right? You start in the kitchen and you go to the living room and it procedurally makes this cool trail for you. [laughs]

VentureBeat: Are you simplifying things that are more complex? What’s an example there?

Ficklin: Look at the control. Look at Magic Leap and the control compared to a lot of other systems.

Above: Is AR simpler than reality?

VentureBeat: The 50-button remote on your TV.

Ficklin: Right. Or the 12-button control for Vive. We have gone to a place where, can we get to that single Home button simplicity and use integrated reality to fill in for the complexity, along with gesture and voice, which is coming to the system? So yes. We’re going for a very approachable simplicity. A lot of it’s there. I’ve put this thing on my eight-year-old son. He started playing Create and he just understood the system. It was very natural to him.

VentureBeat: But you want to be able to do very complex things with these simple controls.

Ficklin: Yes, absolutely. There are ways to accomplish that by using the spatial as well. But there’s also an idea that with a layer–the mobile companion app is a control. Gestures can be control. Voice can be control. With these layers, you start being able to build combinations that are powerful.

VentureBeat: Like when you had a button on your iPhone to start an app, that turned half a dozen line commands that you had to put in and turning it into one button.

Ficklin: Right. The way they accomplished that was by controlling context. When you pressed the Home button you knew what it was going to do. We control context slightly differently because we’re in spatial computing. It’s what you’re looking at. Look, grab, and place. Look, swipe, and select. I can have four apps up, look at one, hit that button, and it’ll go back. I can look at empty space, hit the button, and it’ll spawn a home button. I can look at this, hit the bumper, and get a context menu. What you’re looking at does that same thing as having a single screen here.

That eliminates an entire click in every system that we do on our laptops. There, before we do anything we have to locate the window, put it in focus, and then we begin interacting. It’s still a simple model. It’s powerful. But with this one we were able to do another level of simplicity because we know those things. A laptop doesn’t know what you’re looking at. We know where you’re looking.

That’s going to get more and more powerful as we advance the system. Right now we’re using head pose rays, right? But there’s nothing, with further software development, to stop us from even knowing where your pupils are looking and using that to make it even more intuitive and powerful.

Above: Technology has to be easy to use.

VentureBeat: Do you think we’re already there as far as what we need, or are there things we haven’t invented yet. We have VR hand controls with some finger detection going on, but some people just want to get rid of those and say, “Give me 10 fingers in VR.” That’s pretty complex, though, a lot of things to keep track of.

Ficklin: What’s complex about the 10 fingers is there’s not a convention for it in computing. You have to learn and make up this whole new model. Or you can go to pure direct manipulation, but that’s tiresome. It goes back to how we’re tool-using creatures for a reason. Imagine picking a Netflix movie with a 10-finger model. Unless I can get down to real micro-gestures, you’re having to push all these things around to find a video. Do that for 30 seconds and you’re exhausted. You just want a mouse or a control.

To answer the broader question, instead of just the example, all the parts and pieces are there. What needs to be built is the coordinated model and the technology that allows these things to be strung together. In my conversational computing example, that’s a combination of gesture and voice. The user intuitively knows the system, but technology needs to be able to string those moments together and capture them as a command. That’s where the work lies. There’s a lot to be done.

VentureBeat: What’s ahead for you, then? How much exploratory work or research is there, relative to actually building things?

Ficklin: That is the work ahead. There’s also just the work of knowing what we know, but needing to turn it on. Remember that the iPhone launched without copy and paste. It was quite some time before we got copy and paste. That was two things: bringing the user along into this new model of computing to the point where they could understand, and getting the technology ready. It ended up being an over-the-air update quite a long ways in. Switching between apps, the hold button, that wasn’t there the first day. It took a while. These are systems that need building. What’s up next, there’s a lot of little moments that are going to come in with each update. It’ll just get simpler and simpler.

I’m really excited. There’s a lot here in this device. It’s why we signed up for this long-term journey. It’s an opportunity to build the next pattern for computing. There’s a lot of promise here. From a guy who’s a product designer – I spent the last 20 years working on just about every breaking technology – this one is really exciting, because with the convergence of features and sensors, the amount of platform in one place, it has a lot of promise to be more than just a console. It really will be a mobile wearable computer. I think people will have a lot of reasons to put it on for their digital lifestyle.

We live a digital lifestyle now. We love it. I joked in my keynote, people have walked into ponds in the middle of their digital lifestyle. You see these funny videos on YouTube all the time. It’s not that phones are going away, but we’re ready for another pattern where we can be more heads-up present and aware of our environment, and where the environment can be more present and participatory in our computing. Now our digital lifestyle can come out.

Augmented reality has been treated as a marketing trick for a little too long. Put a Heineken logo on that wall, put up some arrows that tell you where to buy a Stella Artois. It’s all about putting information in the real world. But the truth is, what we want out of AR is our digital lifestyles coming into the world, and we want the world to participate with the digital as much as the digital participates with the world.

Above: The look and feel of augmented reality

VentureBeat: I think about my 85-year-old mother, who has dementia. She could never figure out how to use an iPhone. She remembers how to do things the old way — how to use a rotary-dial or touch-tone phone – but a touchscreen phone, she had no idea how to use it. I constantly get calls from her because she touches my name on the screen. It has all these specific features to make it easier for her, like voice recognition that turns my voice into captions on the screen, because she’s hard of hearing, but she can’t get the basics of using the phone.

Ficklin: You just illustrated the power of convention. It’s funny. The “mixed” in mixed reality, as much as it might be the digital and physical world turning into a new “real” world, may also be the mixing of conventions. You could have a bell curve of computer users being able to approach the device. From my point of view, this is not something that’s designed only for early adopters. This looks like a platform that’s designed, at some point, to reach out to all computer users.

It breaks out of the console model, right? Consoles have always had trouble leaving early adopters, even though they offer amazing experiences for entertainment and socialization. The way it’s locked to the TV – what we used to call the 10-foot computing experience – has always stopped it.

VentureBeat: It’s like, why can’t you use that 16-button controller? What’s the matter with you? That could be the measure of success in the future, what slice of humanity can use this thing.

Ficklin: Exactly. A lot of living rooms have that Xbox One, but then they watch their streaming through a Roku or an Apple TV that has just a couple of buttons. It’s fascinating. The model is important. That’s what we’ve circled back around to.