Sitting in a press Q&A with Jensen Huang, the CEO of Nvidia, is like listening to a comedy routine. I appreciate that because so few tech CEOs are willing to engage in a dialogue and crack jokes with the media.

Huang’s sessions are unique because he’s one of those billionaires who doesn’t muzzle himself in the name of corporate propriety. He also has a unique command on today’s relevant technologies, including self-driving cars and the finer points of real-time raytracing and their applications in games like Battlefield V. He cracks a funny snide remark about rival Advanced Micro Devices’ “underwhelming” graphics chip, and gives a serious homily about chip designer and former Stanford University president John Hennessy in the next moment.

Huang engaged with the press again, as he did last year, at CES 2019, the big tech trade show in Las Vegas last week. It came after Nvidia’s press event where it disclosed the Nvidia GeForce RTX 2060 graphics chip and dozens of design wins for gaming laptops, as well as after rival AMD’s keynote talk at CES. He was at his bombastic best.

I was at the press Q&A and asked a couple of questions, but it was interesting to hear Huang field questions from the larger press corps at Morel’s restaurant in the Palazzo hotel in Las Vegas. He touched on the blockchain mining crash that shaved $22 billion from Nvidia’s market value and Nvidia’s new autopilot for Level 2+ autonomous vehicles.

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

Here’s an edited transcript of the Q&A session.

Above: Jensen Huang, CEO of Nvidia, answers questions at CES 2019.

Jensen Huang: We announced four things. The first thing we announced was that [the Nvidia GeForce] RTX 2060 is here. After so much anticipation, the 2060 is here. Raytracing for the masses is now available. That’s the first news that we announced. It’s available from every single add-in card manufacturer in the world. It’s going to be built into every single PC manufacturer in the world. It’ll be available in every country in the world. This is a gigantic launch. Next-generation gaming is on.

The graphics performance is obviously very good. It’s designed to address about a third of Nvidia’s installed base, a third of the gaming installed base. That part of the installed base has GPUs like 960s, 970s, and 1060s. The 2060 is a huge upgrade for them. It’s priced at $349, incredibly well-priced. We’ll do incredibly well with this. We announced that it’s also going to be bundled with either Battlefield V, with raytracing, or Anthem, which is about to come out.

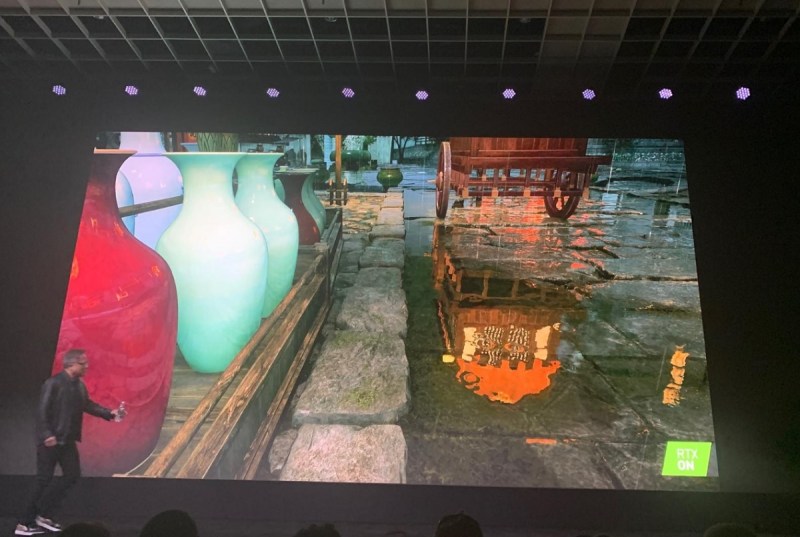

We showed, for the very first time, that it’s possible to play with raytracing turned on and not sacrifice any performance at all. That’s something nobody thought was possible. The reason for that is, when you turn on raytracing, the amount of computation that’s necessary goes way up. Instead of processing triangles, you’re now processing light rays. The amount of computation necessary for raytracing, we’ve known for a long time, is many orders of magnitude more intensive than rasterization.

Above: The Alienware Area-51m with Legend design.

The solution we created was to use AI to learn from a whole volume of wonderfully rendered images. We taught this AI that if you solve this image with fewer pixels completed, you can predict the remaining pixels with incredible fidelity. We showed this technology – it’s called DLSS, Deep Learning Super Sampling – rendering two images, and the image rendered by DLSS is just — you’re sitting there, 1440P, gigantic screen, and the beauty pops out. For the first time we can raytrace, turn on AI, and boost the performance at the same time.

As a result, when we turned on raytracing on Battlefield V, versus no raytracing, the performance was the same. You get beautiful images and you can still play at very high speeds. That was the magic of RTX, a brand new way of doing computer graphics. This is the beginning of the next generation for us.

The second thing we announced was a record number of notebooks built for RTX GPUs. 40 brand-new notebooks are available starting January 29. This cycle, we have designed into more laptops than at any time in the history of our company. Not only that, but we embedded this technology two years ago, called Max-Q. Max-Q is the point where you get maximum efficiency and maximum performance at the same time. It’s that knee of the curve.

It’s not exactly the knee in the curve in the sense that there’s some plot and we’re running at the knee of the curve. It simply means we’re optimizing for maximum performance and maximized efficiency at the same time. It’s a GPU architecture solution, chip design, system design, system software, and a connection to the cloud where every single game is perfectly optimized for every laptop to operate at the Max-Q point. The cloud service is called GeForce experience. It has Max-Q optimization.

Working with all the notebook vendors, we’ve now announced 17 Max-Q notebooks. Last year we had seven. Just in the first month of this year we have 17. The number of Max-Q notebooks coming out is incredible. What you get is essentially a very powerful notebook, but it’s also thin and light, 18mm thick. It’s a 15” bezel-less notebook with a 2080 inside. It’s kind of crazy. It’s twice the performance of a PlayStation 4 at one-third the volume, and you get a keyboard and a battery to go with it.

Max-Q has made it possible for gamers to basically have a powerful gaming platform they can take with them wherever they go. The notebook gaming market is one of the fastest growing game platforms in the world. It’s one of our fastest growing businesses. The vast majority of the world’s gamers don’t have gaming notebooks today. That’s going to change with RTX and Max-Q. It’s going to change starting January 29.

The third announcement we made yesterday — that was the gaming side. There are other announcements we made. For example, we think that building a gaming laptop is different from building a laptop for gamers. The reason for that is, a gamer is a human too. They want to do things like 3D design and video editing and photo editing. They want to do all the things we love to do, and they just happen to be a gamer. It turns out that a lot of gamers love design, love to create in digital. We made it possible for the RTX laptops to not only be great gaming machines, but to render like a workstation, to do pro video like a workstation. For the first time you can edit raw 6K video, cinematic video, in real time, on a notebook. Impossible at any time before now.

We talked about the work we did with OBS to integrate the professional quality video encoder inside the RTX and hook it up to the OBS gaming broadcast system. People love to broadcast their games to Twitch and YouTube. Eight million broadcasters around the world. We’ve made it possible for them to broadcast without having two large PCs. They can now broadcast on just one RTX card, or even on the notebook. They can use their laptop as a broadcasting workstation.

Above: Justice, a game in the works in China, shows off RTX.

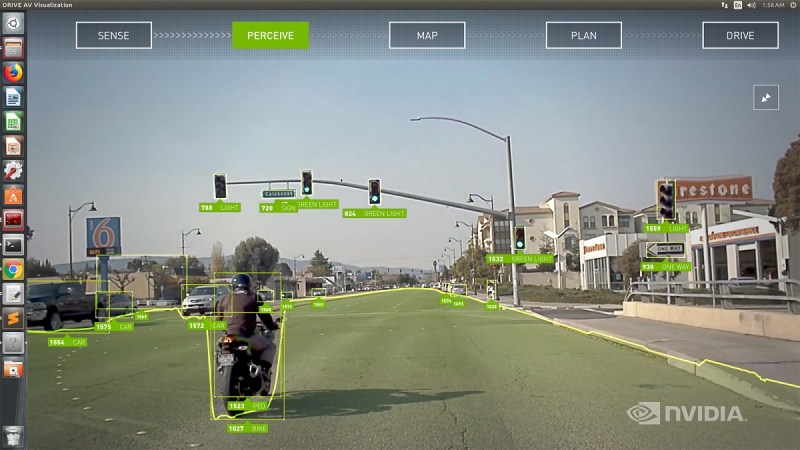

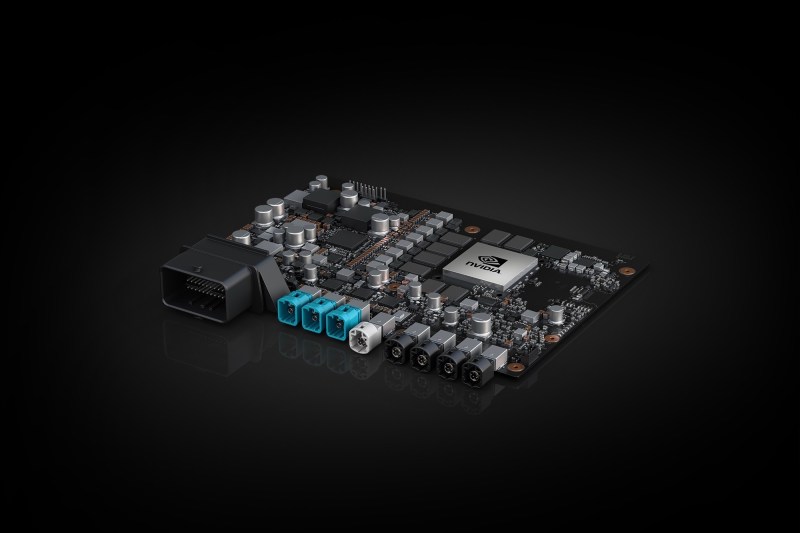

That’s all the gaming stuff we announced on the first day. The second day we announced two things. We announced a commercially available Drive Autopilot level 2+ system, with fully integrated chip, computer, software stack, and partners in ZF and Continental who are going to take it to market. It will be available in cars starting in 2020. It’s powered by Xavier, the world’s first single-chip autonomous driving computer. It will be able to achieve level 2+. Level 2+ is all the functionality you would expect an autonomous car to have, except a person has to be in the loop. The driver is still responsible.

However, this car should be able to do things like — you’ll say, “Take me to work.” It pulls out of the garage, goes down the driveway, gets on the highway, stops at signs, stops at lights, does automatic lane changes, and brings you all the way to work. This should be able to do that. We also demonstrated — if you come to our booth you can see the demonstration. We drove 50 miles around Silicon Valley, the four highways that define Silicon Valley, a whole lot of lane changes, traffic everywhere, crazy on-ramps and off-ramps. Completely hands-off.

We’re taking that to market now. The Xavier chip is in full production. It’s the first auto-grade self-driving car system. The first customer for our autopilot system is Volvo, and there are others to be announced.

We also announced yesterday that Daimler has asked us to partner with them to build their next-generation car computing system. The car computing system will consist of two things: one computing system for autonomous driving and one computing system for the entire AI user experience and user interface system. This computer system, this computer architecture, will be unified in such a way that software can be easily updated. It means that future cars will be AI supercomputers. It will be software-defined. It will remove an enormous number of ECUs that are currently inside cars and unify it into just two computers. Software will define next-generation cars.

Future car companies are going to have to be software companies. They have to have access to great batteries. They have to be great at design. They have to understand the market. But from an engineering perspective, future car companies have to be software companies. Daimler knows this. They’re partnering with us to create their next-generation car computing architecture. We’ll work with them to ship it as soon as we can.

Question: To clarify on the Mercedes-Benz move, should we think of that as Drive plus another system, or is it going to be the next-generation of Drive plus a next-generation–

Huang: We’re already partnering with Daimler today on two things. We worked with them on the NBUX AI user experience, which is shipping today. We also partnered with them on robot taxis. We’re already working with them on AV and UX, the cockpit AI driving unit. What we announced is their next-generation architecture, which will likely have two computers, two processors in one unified computing architecture. It will be beyond Xavier, beyond what we’re currently doing. The next generation of that.

Question: What’s your reaction to AMD’s announcement on Radeon 7?

Huang: Underwhelming, huh? Wouldn’t you guys say?

Question: Why do you think so?

Huang: Because the performance is lousy. It’s nothing new. No raytracing, no AI. It’s a 7nm chip with HBM memory. That barely keeps up with a 2080. If we turn on DLSS, we’ll crush it. If we turn on raytracing we’ll crush it. It’s not even available yet. Our 2080 is all over the world already. RTX is offered by every AIC in the world. I think theirs is going to be available on their website? It’s weird. It’s a weird launch. Maybe they thought of it this morning. Okay, that was too — you know what? Can I take all that back?

Look. I’m not going to make any more jokes. You guys know how funny I can be. I make myself laugh. But it’s underwhelming.

Question: Can you talk about how tariffs and relations with China are possibly affecting your supply chain and selling into that market?

Huang: The tariff thing is not an issue for us. The reason for that is because all of our system assembly is largely done in Taiwan. It doesn’t really affect us. Our business in China — I hate to bring this word up. I’m not going to. No.

Our business, as you guys know, was affected because we have excess inventory. That’s largely been a near-term business factor for us. We announced that, because of the post-crypto decline, we had excess inventory approximately equal to something that would have taken us another one to two quarters to sell. That affected our business and our earnings, the guidance for this last quarter. What’s going to happen is, we will sell through all of our inventory post-crypto in the channel between one to two quarters from last quarter. The excess inventory in the channel has been reported so excessively that I hope you guys don’t need to report it again.

When you have something like that happen in China, happening all over the world — as far as we’re concerned, it’s not a factor in the longer term. Gaming is just fine. Gaming laptops had a record year in China last year. As far as I’m concerned, it’s fine.

Above: Real-time ray tracing scene on Nvidia RTX.

Question: Do you see any pickup in crypto?

Huang: God, I hope not. That’s my wish for this year. Can we all please — I don’t want anybody buying cryptocurrencies, okay? Stop it. Enough already. Or buy Bitcoin, don’t buy Ethereum.

Question: Have considered moving the manufacturing of some of your components to Mexico?

Huang: We manufacture wherever it makes sense. We manufacture with our partners. We don’t do manufacturing ourselves. We outsource all manufacturing. Wherever our major manufacturers manufacture, we love it. Manufacturing all over the world has the benefit of efficiency. That’s great. I support it.

Question: There’s been a lot of talk about 5G this year.

Huang: I’m happy to get 3G sometimes! I was making a phone call just now, was that 2.2G?

Question: How do you see the opportunity around that for Nvidia?

Huang: I don’t think it’s going to change anything. The reason for that is because you’ll never have perfect coverage everywhere. You’ll never have instant coverage, perfection, everywhere. You’ll always have to have hybrid cloud. That’s why your laptop has hybrid cloud. That’s why your phone has hybrid cloud. It’s essential. I don’t think it’s going to change anything. But when you download something, it will be a little faster. When you want to stream a movie, it’ll turn on faster. It’s good. I’m in favor of fast.

Question: John Hennessy and David Patterson have recently spoken about domain-specific computing as a new form of computer architecture. You recently added IOT Core and Tensor Core, these new domain-specific architectures, as well as Xavier. Are you going to add more and more domain-specific architectures into your GPUs or SOCs?

Huang: First of all, both Hennessy and Patterson, they were dual recipients of the Turing Award. It’s essentially the Nobel Prize for computer science. I can’t imagine two people, two friends that I consider more deserving.

The fundamental premise of domain-specific computing is that Moore’s Law has ended. That’s the beginning of the sentence. It’s not possible to continue to achieve performance without increasing cost or power, which means if you’d like two have two times the performance, it’ll cost you twice as much, or consume twice the power. Moore’s Law used to mean 10x growth every five years, 100x every 10 years. Right now we’re growing at a few percent per year. Every 10 years, maybe only 2x, maybe only 4x. It used to be 100x. Moore’s law has finished.

If you look at what a GPU is, the G is not “general.” The G is “graphics.” It was a domain-specific processor. We took the GPU, which is very good at linear algebra and simultaneous equations. Simultaneous multi-variable equation-solving is essentially what computer graphics is about. You could apply the basic approach of this domain processor, the GPU, to some other domains. Over the years, with Cuda, we very carefully opened up the domains a little bit at a time. But it’s not general-purpose. In fact, the GPU is the world’s first — Cuda is the world’s first domain-specific processor. That’s the reason why, in every single talk, Hennessy and Patterson referenced the GPU. We’re in every single one of their talks, because we’re the perfect example of a first in that area.

We’re very careful to select what domains we’ll build to serve. I completely believe in domain-specific architectures. We have three basic domain languages. With a domain-specific architecture you need a domain-specific language. The world’s first DSL was invented by a company called IBM. The name of that language was SQL. SQL is probably today the most popular domain-specific language still in IT. It’s designed for one purpose: file systems and storage. It’s domain-specific computing. That language is very powerful and very useful.

Then we invented a domain-specific language called cuDNN. That’s the SQL of deep learning. We invented another domain-specific language recently called Rapids. Rapids, you should look it up. It’s really important. It’s the DSL of machine learning. Not deep learning, but machine learning. Then we invented another DSL called Optics, which is the language of RTX. Optics is what inspired Microsoft’s DXR and Vulkan RT. We created these domain-specific languages.

Above: Nvidia’s autopilot can sense pedestrians and hazards.

Every market we go to, we have a domain-specific language. Every domain-specific language, underneath, has an architecture.

Question: Are you going to expand your domain-specific languages?

Huang: Yes, but very carefully. We don’t want to build a general-purpose chip. A CPU is a general-purpose chip.

Question: I’d like to get some of your thinking on why you named the new self-driving product Autopilot. Tesla has faced recriminations for naming its system, which has similar features, Autopilot. It conveys a level of autonomy beyond what it actually provides. Why that name? Are you worried about conveying something that it doesn’t actually provide?

Huang: We created the autonomous vehicle computing system. You can’t actually drive it. You still need wheels and other body parts. Whenever those cars come out, the manufacturers will figure out how they want to name things. The reason why we named it Autopilot is because it defines — today’s Model 3 and Model S that use Nvidia’s Drive PX, which is a few years old, somewhere between one-fifth and one-tenth the performance of Xavier — it defines the state of the art in level 2 driving today.

Most people, when you say level 2, it’s hard to imagine what it is. There’s no popular version of level 2. But Autopilot is such a well-known entity that we thought it was a good way to calibrate people’s standards. We also think that every single future EV will have to have at least the capabilities that today’s Model 3 and Model S are shipping with respect to AD. Could you imagine where the Model 3 and the Model S are going to be in 2021, in terms of software sophistication? It’s going to be incredible.

Question: The problem, as you say, is that people don’t understand it, so you’re trying to formulate a term that works. But I think that term provides — it conveys more autonomy than it actually provides right now at level 2. Level 2 is basically ADAS, a more advanced ADAS. Calling it Autopilot….

Huang: First of all, level 2 is not meant to be just ADAS.

Question: It’s not meant to be, but that’s what it is at this point.

Huang: I know, which is a tragedy.

Question: But you’re saying it’s okay to call that Autopilot. I don’t see why that’s the case. If people are confused….

Huang: Well, what would you call it? ADAS, basically? I completely disagree. It is completely nonsense to compare what Tesla has in the Model S, in terms of autopilot capability, and then ADAS level 2. It’s completely nonsense. We have to respect that company a little more than that. It drives me to work every day. It’s the only driver assistance software in a car that actually works, in my opinion. It’s getting better all the time.

Question: But “Autopilot” conveys a higher level of autonomy.

Huang: It’s just depends on what — they call it Autopilot. I’m fine with it. If you have another idea, that’s fine. But I think ADAS is wrong. Level 2 has been contaminated, has been destroyed by ADAS. Today’s L2, if you read the specifications of L2, it doesn’t say “ADAS Plus.” But unfortunately some people took an ADAS chip and added a bit of software to it and called it level 2. It’s a tragedy, and it’s going to change. Quite frankly I think that if everybody raised their car’s driving assistance functionality to the level of a Model S, it would be a very good thing.

Above: Nvidia’s new Xavier system-on-a-chip.

Question: In your autonomous driving work, why aren’t you yet aiming for level 3 or higher?

Huang: Because we should go to level 3 by walking through level 2+. The reason for that — the next-generation level 2+ will have tactile as well as AI driver monitoring to make sure you stay alert. There’s going to be a lot of functionality to make sure that when you are in charge, you stay in charge. You’re not dozing off or pretending to hold on to the steering wheel by hanging a pair of fake hands on there, something like that. You’re really going to have to pay attention.

Paying attention with Autopilot turned on is still wonderful. I do it every day. If you haven’t had the chance to enjoy it every day, you owe it to yourself to try it and then write about it. Try it every day and then write about it.

Level 5 is going to be a lot easier than level 3 and level 4. The reason for that is, at level 5 the speed is reduced. They’re taxis. They travel 30 miles per hour, which is fine in most cities. Level 3 and level 4, the car could be on a highway, because it’s a passenger-owned vehicle. I believe level 4 will happen. There’s no question about it. But we have to go step by step.

Question: How do you feel about the level of game support for RTX? It seems to be lagging a little.

Huang: With every single generation, we’ll start from zero. When we announced programmable shaders, we started from zero. When we announced tessellation we started from zero. When we announced displacement mapping we started from zero. It just has to go step by step.

The thing we’ve done with RTX is, baseline performance is so much better than the last generation. It’s the right GPU to buy no matter what. And number two, with every single new game that comes out, we’re either going to add DLSS to it, to enhance the imagery, or we’ll add DLSS and raytracing, turn all of raytracing on, as soon as we can. We’ll work with every developer for future games. As they get released we’ll add more and more.

Question: In Latin America, in places like Argentina, where I’m from, the value of the US dollar is shooting up. I’d love to have an Nvidia card, but they’re really expensive. What do you think about markets like this, where it’s becoming really difficult for us to afford video cards? Even a 2060 is still really expensive for us.

Huang: I don’t know the answer to that. The import tariffs are very high. I’ve heard of people flying to the United States to buy a camera or a TV and import it themselves. So I don’t know the answer. It’s really a question about everything in Latin America. I wish I had an answer.

Question: Can you talk more about the possibility of level 5 autonomous driving? We’ve heard people saying level 5 is impossible, but would you agree?

Huang: It depends on what level 5 is. Level 5 to me is a robot taxi. As far as I’m concerned, it’s not impossible. It’s not perfect. They have to keep refining it. But there’s every sign it will work. Some descriptions of level 5, they say you can drive it anywhere. You can’t drive under the ocean. In Arizona, within the digital fence, you can drive anywhere. That, to me, is level 5.

Question: What do you think about the viability of HBM (high bandwidth memory) in consumer products?

Huang: It’s just way more expensive for consumer products. But memory prices will come down. There’s nothing wrong with HBM. I love HBM. But I love GDDR6 (graphics memory) way more.

Above: Nvidia’s headquarters

Question: Can you tell us more about your partnership with Volkswagen?

Huang: There’s not much to go into. We’ve worked with them on a lot of projects. They’re a long-term partner of ours. It’s relatively well-known that we’re partnering with them on future products. There’s nothing to be announced right now, so I hesitate to say much more.

Question: With autonomous driving becoming a reality, do you have a next target as far as major areas for your company to move into?

Huang: I think you should come to GTC. [laughs] It’s in just a few months. We have some nice surprises for you.

Question: Can you talk more about how you’re training AI to work in autonomous vehicles?

Huang: Let me first explain how deep learning works. The benefit of deep learning is that it can have a great deal of diversity. Meaning, the way we teach our AI — we teach the AI examples of cars and examples of pedestrians and motorcycles and bicycles and trucks and signs. We give it positive examples. We also give it a lot of noisy examples. We also give it a lot of tortured examples. We change the shapes and sizes. We add a lot of noise. Then we try to fake it. We try to put all kinds of random things in to teach it to be more robust.

We’re trying to make the network as general as possible, as diverse as possible, and as robust as possible, just like humans. With our brains, we can see a fruit and say, “This isn’t exactly the same as every other fruit, but it’s also a fruit.” We generalize. The brain has a great deal of diversity. With many different types of fruits in many different environments, I can still recognize a fruit. Your brain is also robust. When I show you a plastic fruit, you’re not tricked into think it’s real. We have to teach AI to be robust, not to be easily confused.

The teaching of AI is an art in itself. Robustness, diversity, and generalization are all characteristics we’re trying to teach.

Question: One of the big surprises at the press conference on Sunday was support for adaptive sync. Can you elaborate on that decision and why you’re supporting it after competing against it so long?

Huang: We never competed against it. It was just never proven to work. As you know, we invented the whole area of adaptive sync. We invented the technology. As we were building G-Sync monitors, we were also calibrating and improving the performance of every panel maker and backlight maker and controller maker. We were lifting the whole industry. We could see that even the monitors that just generically went out the door were improving in quality.

Unfortunately, improving in quality does not make it work for adaptive sync, for G-Sync. In fact, we’ve been testing it for a long time. In all of the monitors we tested, the truth is that most of the FreeSync monitors do not work. They don’t even work with AMD’s graphics cards, because nobody tested it. We think that it’s a terrible idea to let a customer buy something believing in the promise of the product and then have it not work.

Our strategy is this: we will test every single card against every single monitor, against every single game. If it doesn’t work, we will say it doesn’t work. When it does work, we’ll automatically leverage it. Even when it doesn’t work, we’ll let people decide, “You know what, I’ll roll the dice.” But we believe that you have to test it to promise that it works. Unsurprisingly, most of them don’t work.

Question: Can you talk about the future of Shield and Tegra, especially now that Nintendo is using the Tegra in their Switch?

Huang: As you know, Shield TV is still unquestionably the best Android TV the world makes. We’ve updated the software now over 30 times. People are blown away by how much we continue to enhance it. There are more enhancements coming. We’re committed to it.

On mobile devices, we don’t think it’s necessary. We wouldn’t only build things to compete for share. If you’ve been watching us over the years, Nvidia is not a “Take someone else’s market share” company. I think that’s really angry. It’s an angry way to run a business. Creating new markets, expanding the horizon, creating things that the world doesn’t have, that’s a loving way to build a company. It inspires employees. They want to do that.

It’s really hard to get people — if you say, “What we are as a company, we’re going to build one just like theirs, but we’ll sell it cheaper,” it’s a weird sensibility. That’s not our style.

Question: Is there any possibility of a new Shield portable or tablet, then?

Huang: Only if the world needs it, or if the customer is underserved. At the moment I just don’t see it. When we first built it — I still have the original Shield portable. Every so often I pick it up and I think, “The world needs this.” But I don’t think so. Maybe not yet. I think Nintendo’s done such a great job. It’s hard to imagine doing that. I love what they did with it.

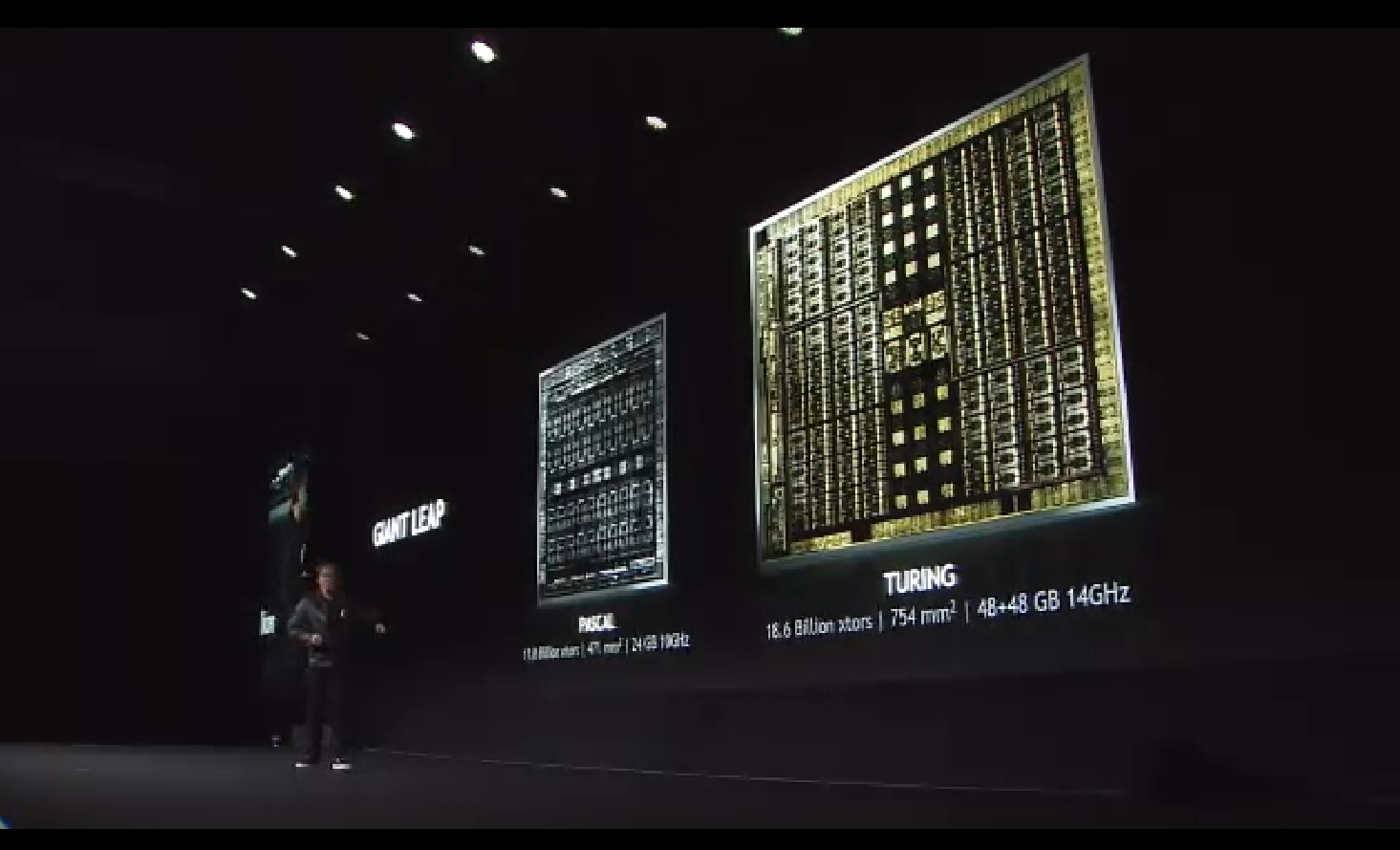

Above: Turing is 754 millimeters square.

Question: Can you talk a bit more generally about AI? It’s a big theme of this show, and it’s obviously embedded in RTX. What is the crossover like to other verticals for you, and what’s your general strategy for leadership in AI amongst chip companies?

Huang: As you know, we were the world’s first computing company to jump into and start building entire systems for deep learning, for AI. I believe that AI has three basic chapters. The first chapter is about creating the algorithms, the tools, and the computing systems for AI. The computing system we created for AI has a processor called V100, an interconnect called NVLink, a system called VGX, a domain-specific language called cuDNN, and it’s integrated into every framework in the world.

That entire stack, the full stack, is chapter one of deep learning. We’ve made it possible everywhere it’s available, every single cloud, every single computer maker in the world. We’ve democratized deep learning. Every company in the world can use it. We have customers in every country, every university, all over the place.

The second chapter is about deep learning being applied to services in the cloud. This is why the internet service companies went first, because they had the data. Google went first, and then Facebook and Amazon and Microsoft. All of the other people who have a lot of internet services — it could be LinkedIn or Netflix — they have lots of data, and they went second. The third phase is enterprise use. The health care industry, insurance industry, financial industry, transportation industry — all of those industries are now really red hot.

And then of course the next generation is where AI comes out of the cloud and into the physical world. It’s the robotics revolution. The first robots will be cars. The reason why is because the industry is large. We can make a difference. We can save lives. We can lower costs. We can make people’s lives better. It’s a very obvious use case. That’s where we’ll focus first.

The second is now manufacturing robots, service robots. The Amazon effect, people buying everything online and in the cloud, is causing delivery channels to change all over the world. There aren’t enough truck drivers, not by any stretch of the imagination. There’s no way to deliver a hamburger to a dorm room for millions of people. It’s just not possible. Now delivery and logistics robots are going to come out — small ones, medium-sized ones — delivering groceries and so on. The robot revolution, that autonomy, that’s the next chapter.

We’re involved in all of this. Our strategy here, from a hardware perspective, is our GPUs and DGX. Our strategy here is all of our cloud systems that we offer to our cloud customers. We call them HGX, for hyperscale. Next is all of our enterprise, all of our partners there. Next is our Drive system and our robotics system, Isaac. We’re just methodically going through building out the future of AI as the technology evolves.

Question: Can you tell us more about your problem with excess inventory and how that will be resolved?

Huang: It’s completely a crypto hangover issue. Remember, we basically shipped no new GPU in the market, to the channel, for one quarter. But the amount of excess inventory and market demand, channel velocity — you just have inventory divided by velocity, and that’s time. We said that it would take one to two quarters for all the channel inventory to sell out. 1080Ti has sold out. 1080 has sold out. 1070 has sold out. 1070Ti has sold out. In several more weeks 1060s will sell out. Then we can go back to business.

I think the world would be very sad if Nvidia never shipped another GPU. That would be very bad.

Question: What do you think about Intel’s efforts in AI, as well as their effort to get into graphics in 2020?

Huang: Intel’s graphics team is basically AMD, right? I’m trying to figure out who’s AMD’s graphics team. There’s a conservation of graphics teams out there. Damn, I’m funny.

We respect Intel a great deal. We work with Intel. You guys don’t see it, because our collaboration is obviously very deep. We build servers together. We build laptops together. We build a lot of things together. The two companies are coordinating and working with each other all the time. There’s a lot less competition between us than there is collaboration. We have to be respectful of the work that they do.

AI is going to be the future of computing. AI, as you know, is not just deep learning. It’s machine learning. It’s data processing. These three areas of computing will define the future of computing. We want to create an architecture that can accelerate all of that, plus the past of computing. People still do things the traditional way. We want to make sure we have a computing architecture that takes the industry forward. I think we’re doing good work there. It’s the future of computing, so it stands to reason that a lot of people are pursuing it.

Question: There have been incidents of vandalism targeting autonomous car test fleets. Do you have any comment on that?

Huang: You should ask the people that vandalized them.

Question: But you also have a test fleet.

Huang: Our test fleets always have people inside. We’ve never experienced any vandalism, partly because I think the Nvidia brand is really loved. People love our brand.

Above: Nvidia CEO Jensen Huang at the new robotics research lab in Seattle, Washington

Question: Is that a serious answer?

Huang: It’s true. Nobody has ever vandalized our cars. That’s why they’re always so clean. I’m telling you the truth. No one has ever vandalized our cars.

Question: Can we get an update on cloud gaming efforts and where you see us on the road to true streaming of video games? There’s a lot going on out there with Microsoft, Intel, Google….

Huang: Stop. There’s a lot of efforts out there, starting with Nvidia.

Question: Convince me, then.

Huang: Convince you? What do you want? Who’s operating a service right now?

Question: And how is it going?

Huang: It’s fantastic. We have hundreds of thousands of concurrent users. Our strategy is ongoing. First of all, if your question is, “How long before streaming can be as good as a PC?” the answer is never. The reason for that is because there’s one problem we haven’t figured out how to solve, and that’s the speed of light. When you’re playing esports, you need the response in a few milliseconds, not a few hundred milliseconds. It’s a fundamental problem. It’s just the laws of physics.

However, we believe in it so much that we’ve been working on this for a decade. Our strategy is this: we believe PC gaming is here to stay. We believe everyone will at least need a PC, because apparently knowledge is still important. You can’t do everything on TV. You can’t live with TV online. But you could live with a PC alone. PCs are used by young people all over the world. It’s their first computing device, or maybe second after a mobile device. Between those two devices, those are the essential computing platforms for society. We believe that’s here to stay.

When people buy their games for PC, on Steam or Uplay or Origin or Battle.net, what we want to do with GeForce Now is take that experience, checkpoint it, and take it somewhere else, wherever they happen to be. If they’re in front of a TV, fantastic. If they’re in front of a mobile device, fantastic. But our core starts with PC. That’s our center point. That’s why GeForce Now plays PC games. That’s why GeForce Now allows you to take the PC games you’ve purchased and play them anywhere. That’s why GeForce Now runs every game that’s available. No porting is necessary.

Our strategy is very different. There are other strategies around streaming games, like Netflix or something like that. I think that’s terrific. The more expansive the gaming market is, the better. It will never replace the PC.

Above: Jensen Huang, CEO of Nvidia, at CES 2019.

Question: Are you going to take DLSS to broader adoption in the industry?

Huang: The answer is yes. The way we’ve done it, it’s in several ways. We do it by writing papers about it. We’re taking our knowledge of AI and publishing it. It’s a great way to spread knowledge on AI.

Question: DLSS is still its own network, its own model.

Huang: It’s a product manifestation of the technology we’ve described. It won’t help unless the network is optimized. It’s not super helpful otherwise. But the technology is helpful for everyone.

Question: You mentioned your cloud business and the data center. Can you talk more about what’s happening there?

Huang: I’d love to. Do you have another hour? The reason why I haven’t is, today is CES. Talking about enterprise in Las Vegas — I was laughing with the guys earlier. I said, “You know what I’m going to talk about today? I’m going to announce all of our new enterprise virtualization technology!”

Question: I don’t think I’ve seen the PC gaming community, in 20 years, react so negatively to an Nvidia launch. Is there anything you can say to the community to make them feel better about RTX? It’s been so polarizing.

Huang: I’d like to say, “2060.” They’re right. They’re right in the sense that — we just weren’t ready to launch it. We weren’t ready to launch it. All we had available to launch were the extreme high-end versions. For new architectures, quite frankly, that’s a good place to launch. But nonetheless, they were right. They were anxious to get RTX in the mainstream market. They were chomping at the bit. They were frustrated that, when I was in Germany at Gamescom, when I announced it, we didn’t have a price point in the mainstream. We just weren’t ready.

To that, I’d like to say that now, we’re ready. It’s called 2060. $349. Twice the performance of a PlayStation 4. It’s lower-priced than a game console. They get a next-generation game console today, and they get AI, and they get to do 3D modeling, and they can do broadcasting and professional video editing. If they like it on the desktop, great. If they’d like it in a laptop, great. We have 40 different laptops to choose from. My answer to all of their frustration is the 2060. It’s here.