This article is part of the Technology Insight series, made possible with funding from Intel.

________________________________________________________________________________________

When you turn the key in your car’s ignition, plug an appliance into a wall socket, or double-click a document stored on your hard drive, you know what’s going to happen next. These things just work. But in the cloud, your valuable files are in someone else’s hands. Are you sure you can count on the outcomes every time?

Before you hand over the reins to your object storage, your potential cloud storage providers should answer a few questions. Can you be certain that the IT foundation upon which your business depends is well-protected? And what are the chances of your data unexpectedly being unavailable?

Durability: So good, it’s almost academic

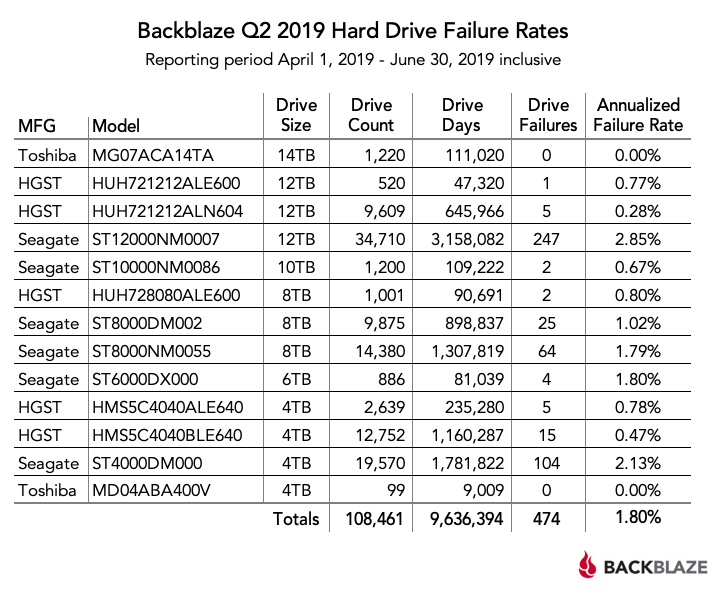

Hard drives die, and in a datacenter loaded with hard drives, they die often. According to cloud storage provider Backblaze’s latest published hard drive statistics, 474 of its 108,461 disks failed in the second quarter of 2019 — an annualized failure rate of 1.8%. But none of those failures affected customers because durability, or the health of your data, is of the utmost importance to companies like Backblaze, Microsoft, Amazon, and Google. They take extreme measures to prevent hosted files from getting lost or becoming corrupted.

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

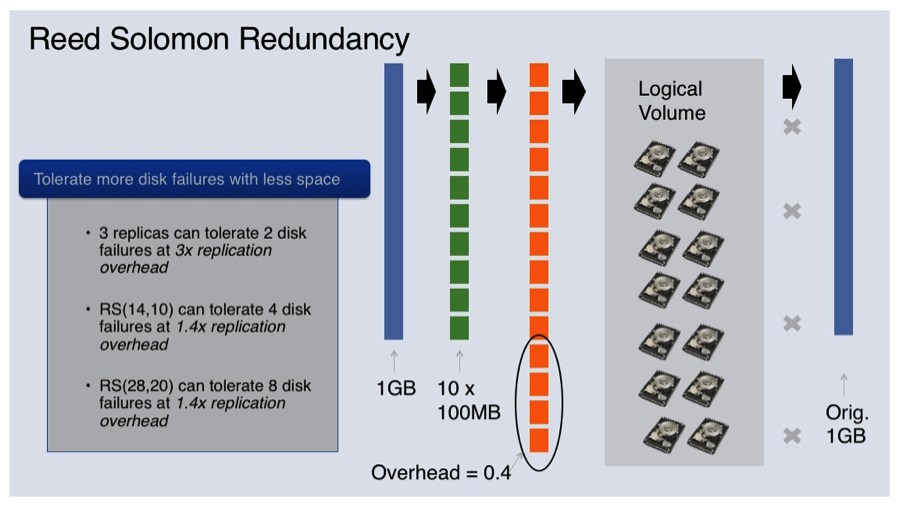

Every major cloud service provider uses a forward error correction technology called erasure coding, which takes a file and breaks it into many pieces. Then it calculates additional parity pieces used to reconstruct files in the event any of the originals are lost. According to Ahin Thomas, VP of marketing at Backblaze, the company’s Reed-Solomon-based Java library breaks each file up into 17 shards, then calculates three additional pieces to hold parity. Those shards then distribute across 20 different drives.

“At any given moment, we could lose three hard drives and everything’s still fine,” Thomas said. “Then the question is, can you replace and rebuild the three drives you lost before losing a fourth?”

Facebook also uses Reed-Solomon erasure coding across its cold storage racks, while Microsoft Azure Storage has its own Local Reconstruction Codes that purportedly reduce the number of erasure coding fragments needed for reconstruction.

Armed with hard drive failure rate statistics, intimate knowledge of a given cloud provider’s erasure coding architecture, and a general idea of how long it takes to rebuild after a failure, we can calculate data durability using some complex math. Cloud services (other than Backblaze) generally don’t share that information, though, so we’re left to rely on their durability claims reflected in percentages. It’s not uncommon to see durability specified in terms of nines — up to 16 of them in the case of Microsoft’s geo-redundant Azure Storage plan. The 11-nine figure we see more commonly translates to 99.999999999% durability. At that level, debates about one extra nine or even fewer nines shift from practical to academic.

“The probability that any cloud service loses your data is profoundly low,” continued Backblaze’s Thomas. “It’s much more likely that there’s an issue with your credit card and you’re not checking your email and you don’t get the account notices.”

In fact, there’s a greater chance that the Earth will be hit by a hazardous asteroid in the next century than of random data loss from a major cloud provider. The point is that providers know their hardware will fail eventually, and they design with failure in mind. At any serious cloud storage vendor, the durability of your data is all but guaranteed.

Note, however, that durability calculations do not take humans into account. Introducing a bug through an errant line of code could wipe the whole system out. An armed conflict might result in the loss of a datacenter. Or a string of natural disasters could theoretically affect multiple datacenter locations that are keeping your files geographically distributed. Those are all factors outside the scope of a durability calculation, which is why IT managers need to think about them.

“One of our biggest customers is a genomics company that keeps a copy of its data on-site, a copy in [Amazon] S3 on the east coast, and a copy in [Backblaze] B2 on the west coast,” said Backblaze’s Thomas. “In doing that, they’ve achieved both vendor and geographic diversity.”

Regardless of the durability specs you’re being quoted, maintaining three copies of important data is a best practice. While most organizations fall short of that ideal, CIOs and home users alike should consider all the variables affecting durability.

Will your files be there when you need them?

So, rest easy knowing that your data is safe in the cloud. But do you have the same assurance you’ll be able to access it anytime you need? Not with the same certainty, though modern datacenters can get close to ubiquitous uptime. “Availability” quantifies the amount of time your data is accessible. It doesn’t account for any of the other variables affecting your connection to the cloud storage provider, such as issues with your ISP, failed networking hardware, or power outages. And yet it’s still a much lower percentage than the durability spec. Availability guarantees of 99%, 99.9%, or 99.99% are common, allowing for occasional datacenter maintenance.

Your desired availability level is determined by what you’re doing with your data and how much you’re willing to pay. At 99%, you’d accept up to 3.65 days per year of downtime. Busy ecommerce sites aren’t going to tolerate those lost sales. Adding a nine (99.9%) gets you to 8.75 hours of unavailability per year, while 99.99% limits downtime to about 53 minutes in the same interval.

Cloud service providers publish and guarantee availability in their service level agreements (SLAs). If the vendor underperforms this commitment, it may refund a percentage of its fees in the form of service credits applicable to the next month’s bill. Usually, these are tiered to get more aggressive as service slips. For example, Amazon’s S3 Standard object storage class, designed for 99.99% availability, grants a 10% service credit percentage if your monthly uptime falls between 99.9% and 99.0%. This increases to 25% if you experience less than 99.0% availability but more than 95.0% availability in a given month. And it ramps up to a 100% credit if service falls below 95%.

Of course, you’re not in this for credits. You want a provider that satisfies the availability set forth in its SLA. The agreement simply speaks to the vendor’s confidence in its ability to deliver.

Does it have to be all or nothing?

It isn’t always necessary to shop for the best possible availability or failproof durability. Cloud storage has evolved to the point where you can specify storage classes, determine monthly availability on the fly, or scale back durability for noncritical data that can live with fewer than 11 nines.

Google Cloud Storage can be broken up into Standard, Nearline, and Coldline Storage classes. Standard is designed for frequently accessed (or “hot”) data stored for brief periods of time. The company lets you drill down even further and define single-region, dual-region, or multi-region to fine-tune performance and geo-redundancy. Of course, it should come as no surprise that storing data in separate locations gives Google the flexibility to increase its SLA from 99.0% to 99.95% availability, with typical monthly availability greater than 99.99%. Nearline storage is better for data you plan to read or modify once a month or less, such as backup, archiving, and long-tail multimedia content. Meanwhile, coldline storage is described as infrequently accessed data held onto for legal or regulatory reasons and disaster recovery. Coldline costs a lot less, but it offers slightly lower availability, is subject to a 90-day minimum storage duration, and incurs costs for data access.

Amazon’s Simple Storage Service (S3) has its own range of storage classes that cater to different use cases. At one end of the spectrum, S3 Standard offers high durability, availability, and performance for frequently accessed data. At the other, S3 One Zone-Infrequent Access (IA) sheds geographic redundancy and offers a significant discount on storage fees, but imposes higher prices on data retrievals.

Amazon, Google, and Microsoft offer ample flexibility for configuring bespoke cloud storage solutions, which can be both good and … challenging. As you build a service based on storage, requests, management, transfers, acceleration, and cross-region replication, it’s easy to get lost in parsing their equally sophisticated pricing tables. A provider like Backblaze is simpler and more affordable than any of the big three, so long as you’re looking for a pure cloud storage play with 11 nines of durability and 99.9% availability. As you get into more compute-intensive workloads or millisecond-class disaster recovery points, then it makes sense to explore services beyond object storage that might be better for computing at the edge, high-end AI, or machine learning.

With a solid grasp on the concepts of durability and availability, it becomes easier to trust the services that cloud storage providers offer. Shedding the equipment, maintenance, power, and staffing costs associated with on-premise storage could be key to saving your organization money as you protect its most valuable files.