This article is part of the Technology Insight series, made possible with funding from Intel

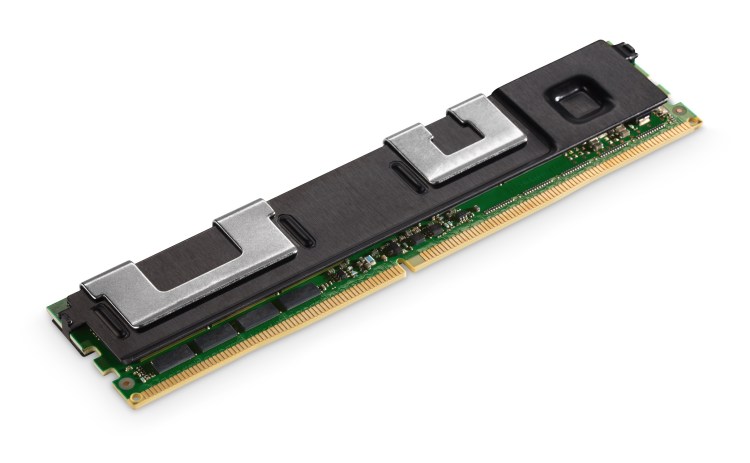

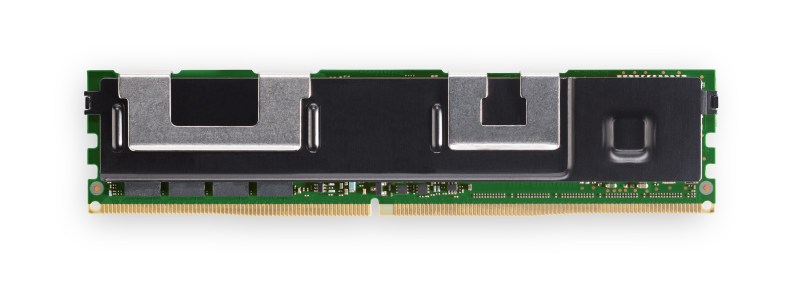

Companies looking to capitalize on the performance promise of Intel’s Optane persistent memory have been trying to vet the performance claims and calculate TCO for years now.

VentureBeat started covering Optane (or, more accurately, the 3D XPoint media underlying Optane) four years ago. At that time, Intel boasted that the new memory technology was 10 times denser than conventional DRAM, 1,000 times faster than the fastest NAND SSD, and 1,000 times the endurance of NAND. However, at the same IDF presentation it was announced, Optane as demonstrated was only 7.23 times faster than the fastest available NAND SSD chips.

A year and a half later, following the announcement of the first Optane-based SSDs, tech analyst Jack Gold noted, “Because it is significantly less expensive than DRAM and can have 15X – 20X the memory capacity per die (e.g., 8GB vs. 128GB) while achieving speeds at least 10X that of NAND, it is an ideal ‘intermediary’ memory element where adding more relatively fast memory can significantly increase overall system performance at a lower cost than stacking it with large amounts of DRAM.”

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

Two and a half years later, that remains Optane’s promise: near-DRAM performance for less cost per gigabyte than DRAM while facilitating a huge jump in system memory capacities. To grossly oversimplify, lots of DDR4 means that large workloads can stay in RAM and not incur the disk swaps to distant storage that can slaughter latency and throughput. So, yes, Optane PMMs are slower than DRAM, but they’re faster than NAND, and eliminating disk swapping for active workload data should yield significantly improved application performance.

Which takes us back to our article opening: Intel launched Optane DC persistent memory in early April 2019, and it’s a reasonable bet that buyers like Baidu had access to pre-production parts before the launch date. Are there real-world numbers that prove the promise?

The moving cost target

Total cost of ownership (TCO) discussions depend in part on, well, costs. When someone says that an Optane PMM-with-DRAM configuration (because you need both, not just Optane) yields better TCO than an all-DRAM configuration, your first impulse might be to check pricing on both and compare. There’s no reason to do that here on these pages today, because costs change — and that’s the point.

“DRAM prices are extremely volatile,” said Michael Yang, analyst and director of research at Informa Tech (formerly IHS Markit). “It can double or halve in a year. Two years ago, DRAM would have been three times more than Optane. Today, there’s barely any cost premium, only 20% or 30% more — so cheap you can almost argue there’s parity. There’s not enough difference for people to rearchitect their server farm, for sure. That’s why Intel is moving the argument away from cost.”

Another factor in this shifting narrative may be the imminent arrival of DDR5 next year, which Yang says may double DIMM capacities. DDR5 is also expected to scale to double the data rates of DDR4.

If Yang sounds as if he’s arguing against Optane, don’t jump to conclusions. He’s an admirer of Optane technology in general and believes it holds much potential. However, he would like to see Optane’s promise being delivered and observe how Optane scales going forward. He’s also quick to point out that Optane PMM isn’t for everybody.

“We are certainly seeing data become more valuable, and real-time analytics are on the rise,” he said. “Optane PMM will be the right solution for some, but not all, workloads by providing the right mix of performance and cost.”

To perhaps extrapolate from Yang’s sentiments, bear in mind that many, if not most, server configurations never max out their memory potential. These systems go to their graves with open RAM slots and will never need Optane PMM. Similarly, keep a wary eye on sweeping marketing messages. Yes, Optane PMM may be amazing for the elephantine workloads that could be generated by, say, smart city systems. With cameras running on every corner, and everything from automotive traffic control to retail advertising using those HD video feeds in real time, the need for Optane PMM in such applications may be critical.

The rebuttal

Perhaps that’s an unfair question. After all, do we need to bring up that “640K ought to be enough for anyone“? Just because the immediate need for Optane may be limited doesn’t mean it will stay that way. And how much easier (and cheaper) will it be to build those smart city systems if appropriate hardware and software solutions are readily available?

Also, modern-day Intel is almost phobic about making statements that can’t be amply defended with a ream of citations. The company doesn’t know what its customers paid for their RAM or prior platforms, so it can’t make statements about case studies showing “X%” improvement unless the customer hands Intel that information — and many enterprises, especially cloud companies, are loathe to disclose their internal platform details.

And while we’re talking cost of ownership, note that there’s more to TCO than per-gigabyte costs. Consider a server running multiple virtual machines. If those VMs are limited by the amount of available system memory — and it’s common for application owners to seize more than enough memory, just in case — then it logically follows that increasing system memory will allow for more VMs per server. Potentially, fewer physical servers will be needed to run the same number of VMs, thus providing lower hardware costs, lower energy consumption, lower administration and maintenance, and on and on.

We spoke with Kristie Mann, Intel’s senior director of product management for persistent memory, and she shared with us some of the few definite statistics now emerging from early Optane PMM adopters.

- European sanitary products company Geberit reported that its SAP HANA in-memory database system went from a 54-minute data load time at startup to just 13 minutes, likely as a result of the data persistency inherent in Optane PMM across power cycling. Geberit moved to deploying 7.5TB of total memory (24 x 64GB DRAM and 24 x 256GB Optane PMM), which maintained 99.7% of the prior configuration’s query performance while lowering TCO via alleviating the need for new hardware.

- Game server and app hosting provider Nitrado deployed Optane PMM after realizing that the company’s CPUs were being underutilized because memory use per virtual machine was so high. Adding more memory allowed CPUs to be used more efficiently, thus allowing for node consolidation and a total efficiency gain of, according to Nitrado, 100-150%.

- Although she wasn’t authorized to disclose the user or specific stats, Mann mentioned one ecommerce retailer that had been forced into batch processing the workloads driving customer preferences and shopping recommendations. These batches had to be run at night because the loads had grown so large that they couldn’t be run in real time during the day. Then, the company started running out of time to run the batches even at night. Switching to a maximum capacity Optane PMM configuration improved performance so much that the company could resume real-time processing during the day.

These stories show that results are beginning to emerge.Your mileage may vary, and in fact, your business may not need Optane at all today.

Still, non-volatile media suitable for system memory and/or ultra-fast storage was going to reach the market eventually, and Intel appears to have both a viable technology and the muscle to push its adoption. As with the arrival of most new technologies, though, adoption will likely come with a lot of resistance and the need for market education.

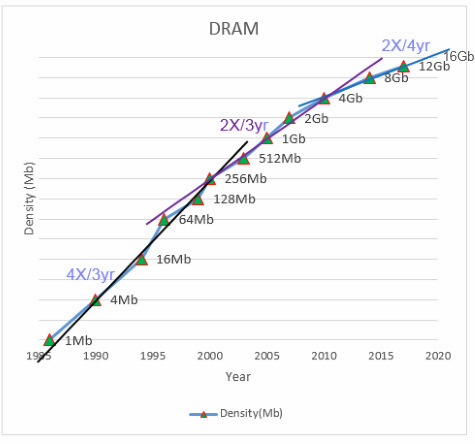

“This is a product unlike anything we’ve had in the past,” said Mann. “You’ve seen multiple tiers of storage for years. We need to do the same thing with memory because of two things. One, the rate of data generation is increasing very quickly, to the point where businesses can’t quickly and adequately process that data and turn it into business insights. And two, the scaling of DRAM capacity is slowing. We’ve seen the stretching out of the Moore’s Law timeframe for CPU architecture, and now we’re seeing the same thing with DRAM. Add it up, and memory can’t keep pace with rising data workloads over the next five years. That’s why we need a two-tier memory system.”

Above: Source: Flash Memory Summit 2015

With tiered storage, users need to right-size their SSD capacity, often using it as a sort of cache for “hotter data” more likely to be sought than the data kept on “cold,” archival disk media. According to Intel, the same principle applies with memory. Again, this isn’t an either/or case of DRAM versus Optane PMM. The two work together, with DRAM serving as the faster cache to Optane’s slower but far more capacious mass memory.

Expect more for your org soon

The obvious question for businesses follows: How much DRAM do I actually need? Surprisingly, relatively few people know, and the tools to find the answer aren’t within easy reach.

“The answer to this question varies by data set and workload, so it’s very difficult to provide one-size-fits-all guidance,” said Mann. “We’re working on building some new tools from our existing internal tools. We can check cache miss rates, latency, bandwidth — all these real-time things we can analyze while a workload is running. But our tools are made for engineers, not the average customer. So, over the next couple quarters, we’ll come out with more advanced tools customers can use to help understand their workload characteristics and effectively balance their memory investments.”

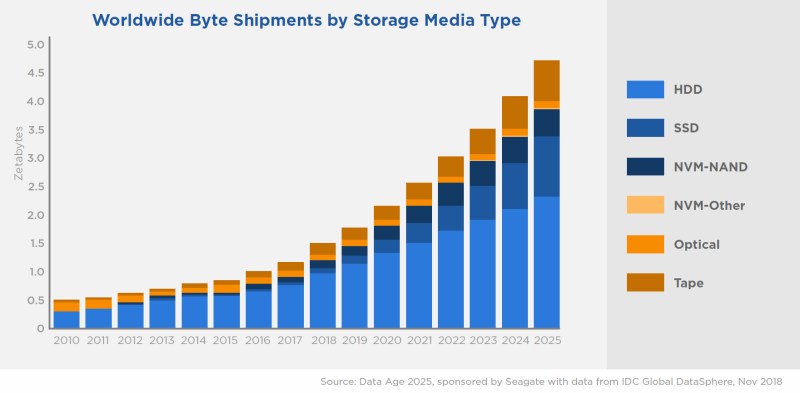

Above: Source: IDC

Recognizing flash as a strong alternative to hard disk technology, the founders of SanDisk filed a patent on flash-based SSDs in 1989 and shipped the first such drive in 1991. Arguably, the first enterprise SSD arrived in 2008, and you can see from the IDC/Seagate numbers in the above graphic how long it took for SSDs to make a serious dent in the world’s storage totals. System memory may now face a similar adoption trend.

This isn’t to say that the game has already gone to Intel. For instance, Samsung’s Z-SSD has strong potential, and the 3D XPoint media underlying Optane is being licensed by Micron (perhaps under the name QuantX) to other storage manufacturers.

Intel’s thesis, though, seems sound. One way or another, it’s time we had a leap in capability. Now, we just need the results made public to prove that the promise of Optane is real and that we have a clear path forward for a world drowning in data.