During E3, we previewed the replay system as a live broadcast system. If you watch that program, you’ll notice that instead of just seeing the player’s camera view, the desktop of the gamer playing Fortnite, we can now go into the game and film it from any angles, drone cameras, follow cameras, all sorts of crazy stuff. If something cool happens in the game, a bunch of budding filmmakers now will go into the replay system and make little edits out of what happened. There’s some pretty awesome stuff out there. You see pop videos made inside Fortnite.

All that stuff in there is on the customer side. What we’re super excited about there is, as new games come out and we see more games built on Unreal Engine 4, the esports capabilities are off the charts. They could do ESPN-like broadcasting live from within their games. We’re excited about what that could mean.

If you pair that with all the virtual production and performance capture stuff we’ve done—we did that Siren demo at GDC and several other things with our friends at Ninja Theory. If you take that and beam it into the world of a replay, where you can have commentators and actors and crazy stuff happening in front of the backdrop of what happens in the game, the sky’s the limit. We’re excited to see what our customers can do in terms of this emerging crossover entertainment. Is it gaming? Is it television? Is it streaming? It’s only a matter of time before we start to see streamers mocapping up and streaming as virtual characters within the worlds of Unreal Engine games. It’s exciting times ahead.

Above: Shiny Star Wars uniforms in Unreal Engine 4.2.

GamesBeat: Is anybody shipping something soon with the technology we saw in Siren?

Libreri: Almost everybody that has a digital human character in their game is taking advantage of Siren. All the skin shading work we did that was taken into Siren shipped with 4.20. There’s a bunch of stuff that I probably can’t mention yet. As always, the facial animation and facial modeling experts are making their work better and better. I think you’ll see some pretty exciting stuff over the next 12 months.

GamesBeat: Is that the timeline for when you think this will be getting into the hands of gamers?

Libreri: In terms of gamers, yes. Although there are some pretty—the nice thing is we ship Unreal Engine with the source code. We’re not the only people writing shaders. People have done work themselves with the code. There’s some skin stuff that, as I say, I can’t disclose, but I’ve seen some pretty amazing stuff out there.

GamesBeat: Siren certainly looks hard to do, but is that on the level of modern console technology? Or does it need something like a next-generation console?

Libreri: Obviously it would be better. Right now, when we do the demos, to get them to run at 60 frames per second or at VR frame rates, we end up needing a pretty beefy graphics card to render all the hair. But if you have a character that doesn’t need actual individual splines of hair, you can run that on a modern PlayStation or Xbox. When we start to see a next generation or whatever that is, the uncanny valley is going to be crossed in video games.

It’s going to end up being not about your technical expertise to make these things. That technique will become ubiquitous over time. It’s got to be about telling an awesome story where people will want to watch that face get involved and do interesting things.

GamesBeat: People have said that the thing that seems artificial is the level of interaction that’s capable, not just how it looks.

Libreri: That’s the hardest thing you can think about, the AI. Traditional AI in games is very state-driven systems. As we start to go down the path of being able to 3D analyze more human emotions and animations, there are machine learning capabilities that will start to help act out pretty impressive next-generation AI. That’s something we’ll see over the next five years. We’ve seen a lot of AI in self-driving cars and computer vision, but I think it’s going to absolutely revolutionize animation. The rendering work—I’m not saying that we’re there, but we’re taking big steps.

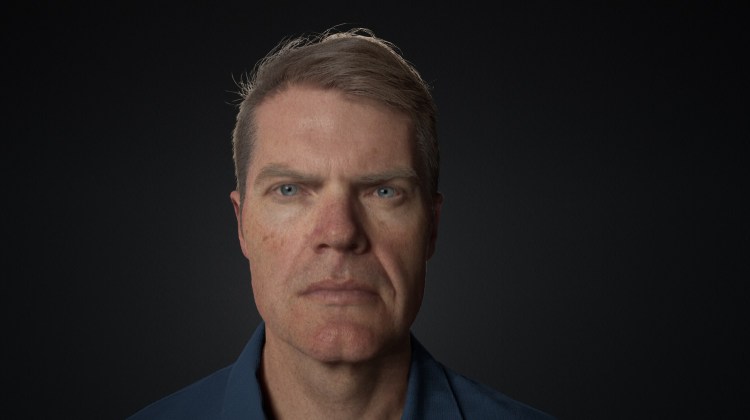

Above: Unreal Engine 4.2 character.

GamesBeat: Tim was talking about things like blockchain enabling the Metaverse and the like, but is there anything like that integrated into the engine now that you think advances that whole cause of having interconnected virtual worlds?

Libreri: We’re still learning. Having a game that has so many players, it’s led us to think more about how we want to scale the engine beyond 100 players. I wouldn’t say that there’s anything there right now that totally addresses all the problems, but we’re thinking about how we scale up to thousands or millions of players. It’s definitely on Mr. Sweeney’s mind right now.

GamesBeat: Is that something you would translate directly into what you want the product to be the, the engine to be? Here’s the big vision Tim has talked about, and this is what we want the engine to enable?

Libreri: Absolutely, absolutely.

GamesBeat: As far as your platform needs, is this able to work across all the platforms you need?

Libreri: Oh, yes. We have great relationships with all the platform providers. The tech is obviously—I think things are going to be very exciting over the next few years. We’re in this amazing time where computer graphics and computer technology is evolving at a rate where things that seemed impossible are right within your reach.

GamesBeat: Have you signaled to anyone what an Unreal Engine 5 would look like?

Libreri: We’re thinking about what the future brings and whether we need to do major architectural changes. But that’s a conversation where Tim needs to be present.