With so much news about Fortnite lately, it’s easier to forget that Epic Games makes the Unreal Engine. But today, the game engine behind some of the most realistic and is taking center stage as Epic launches Unreal Engine 4.20.

The update for the entertainment creation tool will help developers create more realistic characters, immersive environments, and use them across games, film, TV, virtual reality, mixed reality, augmented reality, and enterprise applications. The new engine combines real-time rendering advancements with improved creativity and productivity tools.

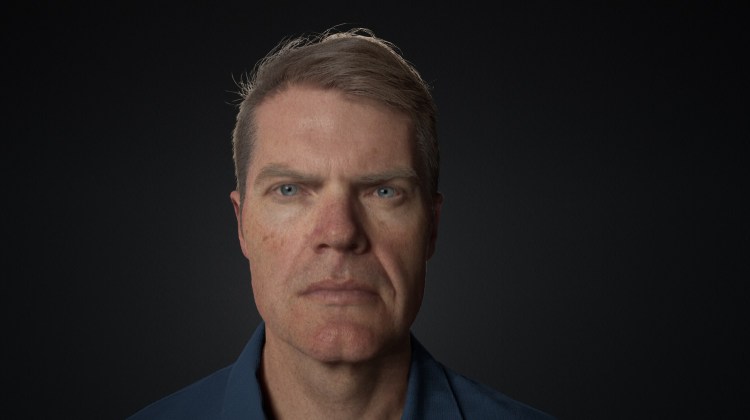

It makes it easier to ship blockbuster games across all platforms, as Epic had to build hundreds of optimizations on iOS, Android, and the Nintendo Switch in particular to build Fortnite. Now those advanced are being rolled into Unreal Engine 4.20 for all developers. I spoke with Kim Libreri, chief technology officer at Epic Games, about the update and how the development of Fortnite helped the company figure out what it needed to provide in the engine.

Libreri said that in non-game enterprise applications, new features will drive photorealistic real-time visualization across automotive design, architecture, manufacturing, and more. The features include things like a new level of detail system, which determines the visual details a player will see on platforms such as mobile devices or high-end PCs.

Well over 100 mobile optimizations developed first for Fortnite will come to all 4.20 users, making it easier to ship the same game across all platforms. There are also tools for “digital humans,” which get the lighting and reflections just right so that it’s hard to tell what’s a digital human versus a real one.

All users now have access to the same tools used on Epic’s “Siren” (in the video above) and “Digital Andy Serkis” demos shown at this year’s Game Developers Conference. The engine also has support for mixed reality, virtual reality, and augmented reality. It can be used to create content for things like the Magic Leap One and Google ARCore 1.2.

Libreri filled me in on these details, but we also talked about the competition with Unity and what it means that Epic can use what it learns from creating its own games to try to outdo its rival.

Here’s an edited transcript of our interview.

Above: KIm Libreri is CTO of Epic Games.

GamesBeat: Tell me what you’re up to.

Kim Libreri: We’re talking about 4.20, Unreal Engine 4.20. The majority of the themes for this release are, we’re following up on what we said we would do at GDC. A lot of the things we showed and demonstrated and talked about at GDC this year are in this release. A large part of that is the mobile improvement, thanks to shipping Fortnite on iOS and soon Android. There’s a ton of work we’ve done to optimize the engine to allow you to make one game and scale across all those different platforms. All of our customers will find excellent upgrades in terms of performance on mobile devices.

Part of that was, we have the proxy LOD system we put in the engine. That allows for automatic LODs to be generated in a smart way. It’s similar to what Simplygon does, but it’s our own little spin on how to do that. That’s shipping. The other cool thing is, Niagara is shipping now in a usable state. We’re getting lots of interesting comments about how customers are finding it and how awesome it’s going to help their games look from a visual effects point of view. Niagara is the new particle system. We used to have a system called Cascade in Unreal Engine, and Niagara is what will ultimately replace Cascade.

You saw a couple of digital human demos we did at GDC. We’re shipping not just the code and the functionality, but also some demo content, thanks to our collaboration with Mike Seymour. He was kind enough to let us give out his digital likeness for people to better to learn how to set up a photorealistic human. From a code perspective, we have better skin shaders that now allow double specular hits for skin. Also, light can transmit through the backs of ears and the sides of the nose. You get better subsurface scattering realism. We also have this screen space indirect illumination for skin, which allows light to bounce off cheekbones and into eye sockets. It matches what happens in reality.

Above: A digital human.

We showed a bunch of virtual production capabilities in the engine as well, cinematography inside a realtime world. The tool we showed, which was used to demonstrate a bunch of our GDC demos, is shipping with 4.20 as well as example content. You can drive an iPad with a virtual camera.

We’ve added new cinematic depth of field. It was built for the Reflections Star Wars demo. At this point we have the best depth of field in the industry. Not only is it accurate to what real cameras can do, it also deals gracefully with out-of-focus objects that are over a shot or in the background, which traditionally was a difficult thing in realtime engines. It’s also faster than the old circle depth of field we had before. It’s going to make games and cinematic content look way more realistic.

On the lighting side, the area lights that we showed at GDC are shipping. That allows you to light characters and objects in a better approximation of how you would do it in a live environment with real, physical lights. You get accurate-looking reflections and accurate-looking diffuse illumination. You have more matches when you’re lighting with a soft box or a diffusion in front of a light, the way you would light a fashion shoot or a movie or whatever. You can replicate all that stuff in the engine now.

On the enterprise side, we now support video input and output in the engine. If you’re a professional broadcaster and you’re doing a green-screen television presentation, similar to what you see in weather broadcasting and sports events, now you have the ability to pump live video from a professional-grade digital video camera straight into the engine, pull a chroma key, and then put that over the top of a mixed reality scene. Things like the Weather Channel are doing some cool stuff now. We see loads of broadcasters using the Unreal Engine for live broadcasting, and we just made that easier with support for AJA video I/O cards.

Again on the enterprise side, we have this thing called nDisplay, which allows you to use multiple PCs to render a scene across multiple display outputs. Say you’ve got one of the massive tiled LCD panels and you have a really super high-resolution display you need to feed to it. You can now have multiple PCs running Unreal Engine, all synchronized together, to drive these tiled video walls.

Shotgun is a very popular production management tool that a lot of studios use in film, television, and games to drive their artist management in terms of what assets they have to make and when they’re due and what the dependencies are. We’ve integrated that into Unreal Engine, so it’s very easy to track assets in and out of Unreal as your production is happening over time.

That’s the main story in terms of what we’re doing. There are lots of minute details that will be in the release does, but that’s the big stuff.

Above: An environment made in Unreal.

GamesBeat: When was the last big update?

Libreri: That was prior to GDC, 4.19.

GamesBeat: What do you think is the most important thing you’re delivering for developers here?

Libreri: Right now, this concept that you can make one game it ships on all platforms, from high-end PCs and consoles down to Switch and mobile phones, that’s a very powerful message for the future of gaming. You don’t have to be as restrictive as you normally would be. Quite often a lot of mobile games aren’t even ports. They’re new versions of a particular game to deal with the differences in hardware.

We’re in a great place now with mobile hardware being pretty powerful, and the fact that Unreal has this cool rendering abstraction capability that allows us to ship the same game content, game logic, on multiple platforms. Everyone can take advantage of what we’ve done on Fortnite. We’re seeing it. We showed ARK running on Switch at GDC. It’s exciting times.

GamesBeat: Do you think Fortnite has proven out the engine’s capability, then, as far as cross-platform goes?

Libreri: Yes, especially considering it’s a live game. We put out updates every week. When we do a new season pass, there’s a lot of stuff that goes up. Being able to do that once and push it to all platforms is a game-changer. We can be live. As you saw from the event in the game last weekend, when we launched the rocket worldwide at the same time—if we had to maintain multiple versions of the game, it just wouldn’t be possible to be as agile as we are. We just can’t wait to see what our customers in the gaming space will be able to do with that capability.

GamesBeat: Fortnite really is just one game in that respect?

Libreri: Yes, one game. Through clever management of LODs and content, we’re able to push it out to all platforms. That proxy LOD system is a big part of making sure the environments scale down and work on the mobile hardware that we support.

GamesBeat: The theory that Unity had, not making games and not competing with its customers, do you think there’s a question mark there given what’s happened with Fortnite?

Libreri: Obviously we’re different companies that have different philosophies. But making stuff, making games, and having the engine team have access to great game teams – not just ourselves, but our customers as well – has a lot of advantages. We all speak the same language. We’re running a triple-A game on a phone. It’s pretty amazing that we can do that. I think it benefits all of our customers, and the players as well. People want to be able to play their game wherever they go, on whatever platform.

Above: Unreal Engine 4.2

GamesBeat: If you’re looking at what Fortnite benefited from as far as all the big advances that Unreal has made, what would you say Fortnite helped you do, or took advantage of in the engine?

Libreri: One thing is obviously that we had Paragon come before Fortnite. We put a lot of learning into getting the engine to be efficient for a complicated game like Paragon on console. Fortnite was immediately able to take advantage of that. The cartoony style as well, that rendering style in Fortnite adapts to all platforms pretty well. We put in better color management, better geometry management. We’ve put so many things in the engine over the years that have made Fortnite scale better than it would have done otherwise.

GamesBeat: What does Fortnite have that Paragon didn’t? Were there big advantages there?

Libreri: The big thing with Fortnite, which drove some features that a lot of our customers love, is that it’s a dynamic world. You can build. The concept of pre-computed lighting, the normal way that people do photorealistic lighting in games, isn’t possible. We have a bunch of these distance field rendering techniques that were added for Fortnite, and that’s benefited many games. Also, the fact that the battle royale mode of Fortnite is a 100-player game—we really had to go through our networking code and make it super efficient to be able to sustain such large gameplay teams.

GamesBeat: I notice that Fortnite doesn’t run on everything, though. It doesn’t run on an iPhone 6. Is it just the computing power of that device?

Libreri: Yeah, you’re just getting to compute levels that can’t sustain the game well. We want to make sure that everyone gets a great experience when they play the game. We could ship on it, but it wouldn’t be a great experience at all.

GamesBeat: What are you telling developers about where the road map is going from here?

Libreri: Honestly, this is the way we’ve been for a few years now. Every three or four months we put out a new version of the engine. Even new features, we demo them, so people can go up on Github and pick things as they’re emerging even before the dot release is usually out. We’re telling developers that we push this thing as quick as we can, and you have access to new features almost as quickly as we do.

Above: Mobile gaming art in Unreal

GamesBeat: Outside of Epic, what sorts of games are taking advantage of 4.19 and 4.20?

Libreri: Many developers on games that have yet to release take the latest version into critical content. We’re seeing lots of awesome usage of the cinematic tools, not just for cutscenes, but for a lot of adventure-type games. We’ve seen games that were running at 30 being able to go to 60 because of the optimizations in the engine. It’s a pretty open community. Unless you have a game you’ve been shipping for quite a while, people are pretty keen to take the latest update.

GamesBeat: How is Unreal doing on the VR front?

Libreri: There are still a lot of awesome developers doing UE4-based VR. In fact I’m just about to go visit my friends at Fable Studios this afternoon to check on their latest stuff. We’re seeing a lot of activity in the location-based VR space. I think that The Void has shown the way forward with the Star Wars: Secret of the Empire title that they put together with Lucasfilm. We hear a lot of buzz, a lot of people taking it very seriously. Our friends at Ninja Theory just did a project with The Void called Nicodemus, which is a sort of horror, scary—I don’t know if I’m up to the job. I’d probably have a heart attack. I’m sure that’s on 4.19.

GamesBeat: Outside of games, you were talking about movies and TV production. Is that an area where activity is picking up?

Libreri: In the enterprise space we’re seeing, across the board, the amount of different use cases for the engine now—obviously computer graphics changed the way that people design and visualize objects and products. All these companies in that space are starting to use Unreal. We have a great package that helps you translate scenes you’ve built in CAD packages or traditional DCC packages into Unreal Engine quickly. It’s called Datasmith. We’ve seen massive adoption across the board.

It’s not just things like architecture or car design. We’re seeing drug discovery companies using the engine to visualize how molecules fit together. We’re seeing a lot of AI companies training their AI using Unreal Engine. The sky is the limit. Anywhere that computer graphics or the knowledge of a physically plausible world is useful, we’re seeing massive adoption. It’s pretty exciting. Every day some new thing comes up.

Above: Level of detail in Unreal Engine 4.2.

GamesBeat: The success of Fortnite itself, does that come back and feed into the engine in some way? I’m sure you have a remarkable budget for hiring engineers.

Libreri: I don’t know about that, but we’re always looking for awesome talent.

Having something that engages at the level Fortnite does allow us to work on a little bit more in the way of out-there crazy ideas than maybe we normally would. One thing we were very committed to was this awesome way of being able to spectate what happens in games. That’s why we have the replay system in the engine.

During E3, we previewed the replay system as a live broadcast system. If you watch that program, you’ll notice that instead of just seeing the player’s camera view, the desktop of the gamer playing Fortnite, we can now go into the game and film it from any angles, drone cameras, follow cameras, all sorts of crazy stuff. If something cool happens in the game, a bunch of budding filmmakers now will go into the replay system and make little edits out of what happened. There’s some pretty awesome stuff out there. You see pop videos made inside Fortnite.

All that stuff in there is on the customer side. What we’re super excited about there is, as new games come out and we see more games built on Unreal Engine 4, the esports capabilities are off the charts. They could do ESPN-like broadcasting live from within their games. We’re excited about what that could mean.

If you pair that with all the virtual production and performance capture stuff we’ve done—we did that Siren demo at GDC and several other things with our friends at Ninja Theory. If you take that and beam it into the world of a replay, where you can have commentators and actors and crazy stuff happening in front of the backdrop of what happens in the game, the sky’s the limit. We’re excited to see what our customers can do in terms of this emerging crossover entertainment. Is it gaming? Is it television? Is it streaming? It’s only a matter of time before we start to see streamers mocapping up and streaming as virtual characters within the worlds of Unreal Engine games. It’s exciting times ahead.

Above: Shiny Star Wars uniforms in Unreal Engine 4.2.

GamesBeat: Is anybody shipping something soon with the technology we saw in Siren?

Libreri: Almost everybody that has a digital human character in their game is taking advantage of Siren. All the skin shading work we did that was taken into Siren shipped with 4.20. There’s a bunch of stuff that I probably can’t mention yet. As always, the facial animation and facial modeling experts are making their work better and better. I think you’ll see some pretty exciting stuff over the next 12 months.

GamesBeat: Is that the timeline for when you think this will be getting into the hands of gamers?

Libreri: In terms of gamers, yes. Although there are some pretty—the nice thing is we ship Unreal Engine with the source code. We’re not the only people writing shaders. People have done work themselves with the code. There’s some skin stuff that, as I say, I can’t disclose, but I’ve seen some pretty amazing stuff out there.

GamesBeat: Siren certainly looks hard to do, but is that on the level of modern console technology? Or does it need something like a next-generation console?

Libreri: Obviously it would be better. Right now, when we do the demos, to get them to run at 60 frames per second or at VR frame rates, we end up needing a pretty beefy graphics card to render all the hair. But if you have a character that doesn’t need actual individual splines of hair, you can run that on a modern PlayStation or Xbox. When we start to see a next generation or whatever that is, the uncanny valley is going to be crossed in video games.

It’s going to end up being not about your technical expertise to make these things. That technique will become ubiquitous over time. It’s got to be about telling an awesome story where people will want to watch that face get involved and do interesting things.

GamesBeat: People have said that the thing that seems artificial is the level of interaction that’s capable, not just how it looks.

Libreri: That’s the hardest thing you can think about, the AI. Traditional AI in games is very state-driven systems. As we start to go down the path of being able to 3D analyze more human emotions and animations, there are machine learning capabilities that will start to help act out pretty impressive next-generation AI. That’s something we’ll see over the next five years. We’ve seen a lot of AI in self-driving cars and computer vision, but I think it’s going to absolutely revolutionize animation. The rendering work—I’m not saying that we’re there, but we’re taking big steps.

Above: Unreal Engine 4.2 character.

GamesBeat: Tim was talking about things like blockchain enabling the Metaverse and the like, but is there anything like that integrated into the engine now that you think advances that whole cause of having interconnected virtual worlds?

Libreri: We’re still learning. Having a game that has so many players, it’s led us to think more about how we want to scale the engine beyond 100 players. I wouldn’t say that there’s anything there right now that totally addresses all the problems, but we’re thinking about how we scale up to thousands or millions of players. It’s definitely on Mr. Sweeney’s mind right now.

GamesBeat: Is that something you would translate directly into what you want the product to be the, the engine to be? Here’s the big vision Tim has talked about, and this is what we want the engine to enable?

Libreri: Absolutely, absolutely.

GamesBeat: As far as your platform needs, is this able to work across all the platforms you need?

Libreri: Oh, yes. We have great relationships with all the platform providers. The tech is obviously—I think things are going to be very exciting over the next few years. We’re in this amazing time where computer graphics and computer technology is evolving at a rate where things that seemed impossible are right within your reach.

GamesBeat: Have you signaled to anyone what an Unreal Engine 5 would look like?

Libreri: We’re thinking about what the future brings and whether we need to do major architectural changes. But that’s a conversation where Tim needs to be present.