ARM executive Rene Haas is responsible for the shipment of billions of chips. As the executive vice president and president of IP Products Group at ARM, he interacts with the customers that license ARM’s product designs and use them in chips that are made in very large numbers.

Thanks to ARM’s domination of smartphones, the company and its customers have shipped 120 billion chips to date. But there’s a big opportunity in the expanding internet of things, or making everyday objects smart and connected. As those devices become interoperable and voice-controlled, they need more computing power. And ARM is making sure that its processors are used to provide it.

Over time, the goal of ARM’s new owner, SoftBank CEO Masayoshi Son, is to create the artificial intelligence needed for the Singularity, or the day when collective machine intelligence is greater than that of collective human intelligence. ARM’s job is to push AI into the edge of the network, where the company’s small, power-efficient chips are a natural choice. But it is also pushing into servers, where Intel has a newfound vulnerability, and into Windows 10 computers, which now work with ARM chips.

I caught up with Haas at CES 2018, the big tech trade show in Las Vegas last week.

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

Here’s an edited transcript of our interview.

Above: ARM-based processors are powering the dashboards in cars.

VentureBeat: So are you busy trying to make the Singularity happen?

Rene Haas: I essentially run what was the classic ARM, pre-Softbank. All the IP business, the product development, licensing, sales, marketing, for all the products. We’re based in Cambridge. I moved over to London about a year ago. I spend most of my time there.

VB: What does the acquisition mean for what you do day to day?

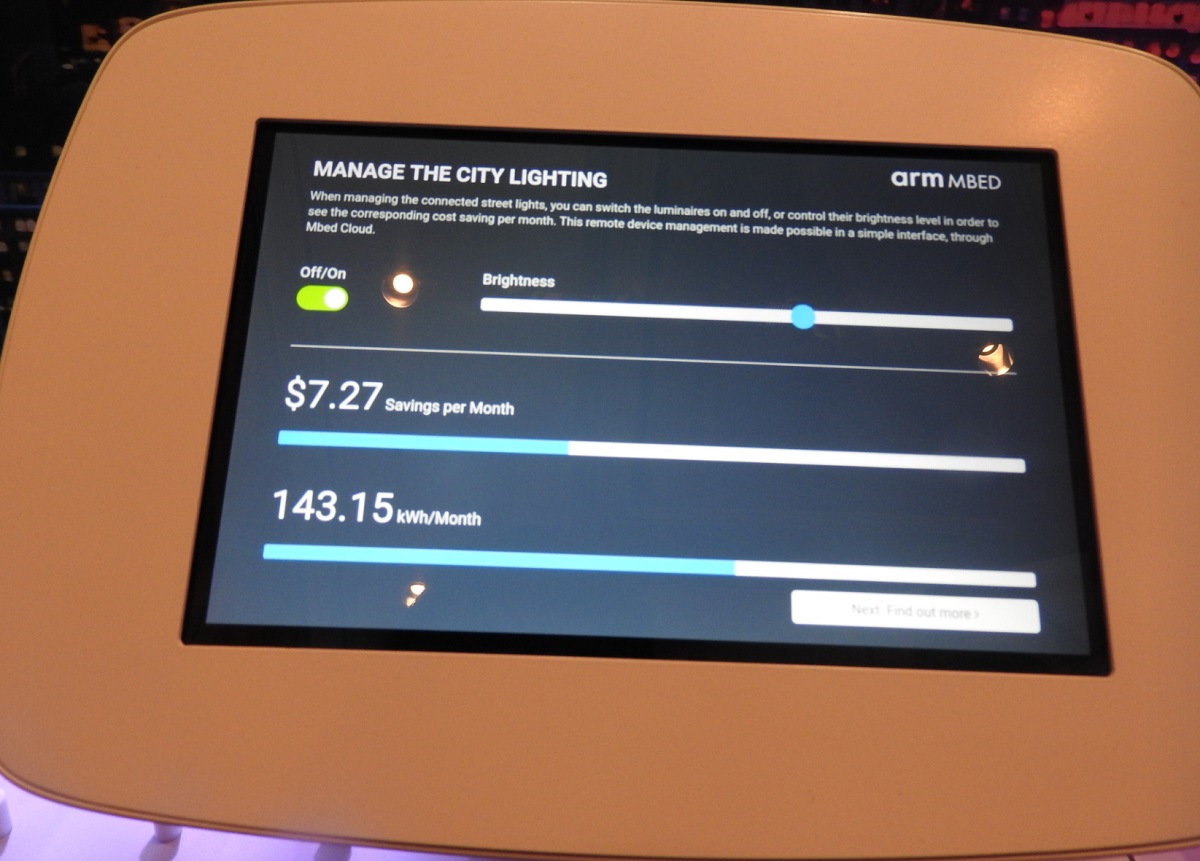

Haas: Without making the role sound larger than it is, it’s essentially the CEO of the IP group, which was what ARM was prior to Softbank. Post the acquisition, we accelerated some efforts around another business, around connected devices, specially software as a service. You’ve heard of Mbed Cloud, right? Mbed Cloud and the strategy around managing connected devices and building a business around downloading software updates, security, and so on.

We created a business unit around that, ISG. It stands for IOT Services Group. It’s still a nascent organization, but the decision was made to create two independent operating groups, because they attack different markets and different customers. At the executive level Simon is still the CEO, so the enterprise functions — enterprise marketing, legal, finance — are all cross-functional. But now this group I run is pretty autonomous in terms of everything relative to owning the top line P&L, owning revenue.

VB: What’s your to-do list for 2018?

Haas: Now that we’re part of SoftBank, some things have changed. Some things are the same. We’re still a publicly facing company in the sense that we’re part of the SoftBank number, but we don’t have to report numbers quarterly with the same level of introspection as we did in the past. As a result of that, we have some more freedom to invest a bit more aggressively in new markets. That’s a big thing for us in 2018, accelerating our investments in areas like machine learning, AI, doubling down on areas like security. Automotive is a big push for us. A lot of the markets we’ve been involved in, the big difference for 2018 is the acceleration of those investments.

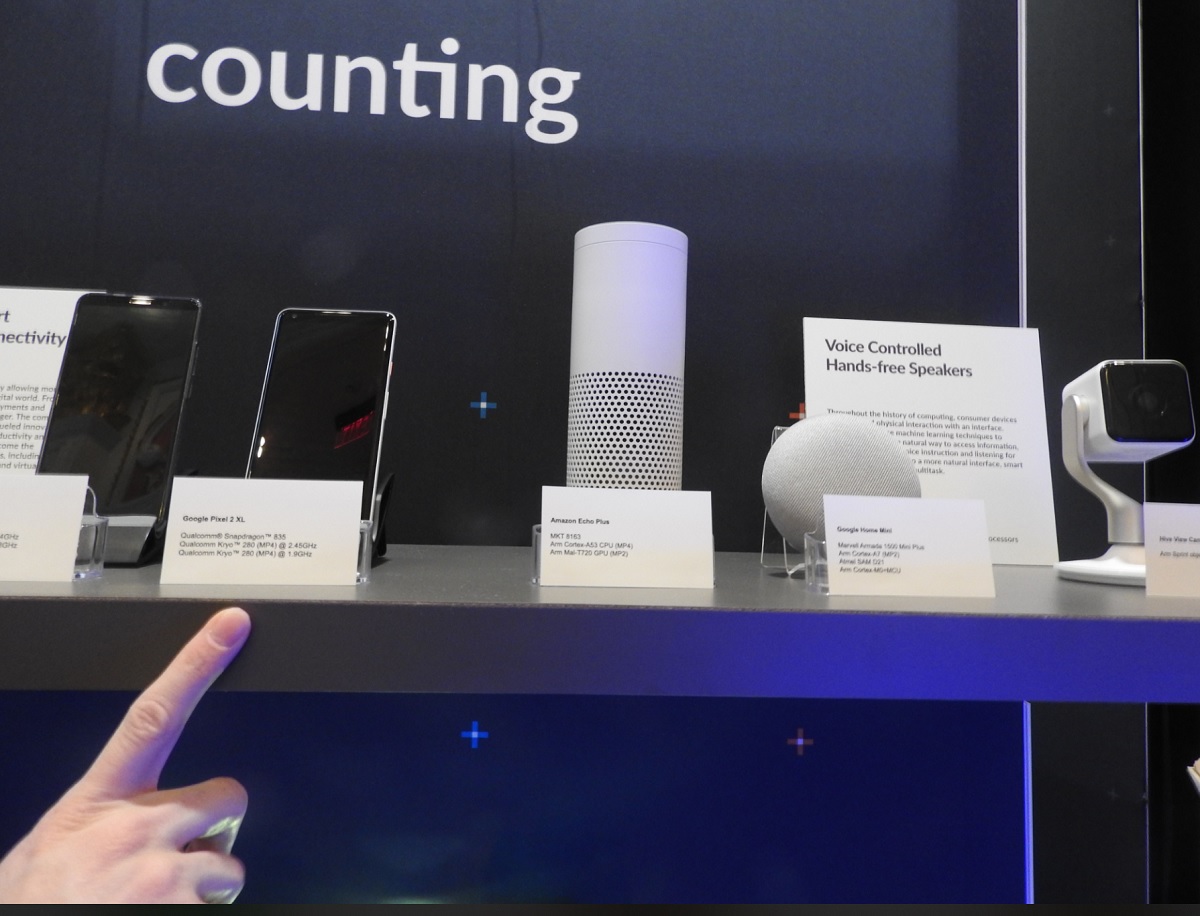

VB: I went to Samsung’s press event. Their interest is in pushing SmartThings as the standard for IOT. On a high level it makes sense for one big company have one way to connect to connected devices and bring everything else in. I wonder how easily some of this is to happen. Is every big company going to have their version of this? Are they going to be interoperable? Are these devices really going to connect and work together?

Haas: This year, all the announcements of products that are Alexa ready or Google Assistant ready — a year ago nobody was even thinking about that. I think what will happen is you’ll have standards around the input methodology, whether it’s voice or whatever. Underneath the hood people will try to put their special sauce on it. A Samsung-only interface or an LG-only interface for consumer devices, that’s hard. I think it needs to be standard around some level of API, something that’s ubiquitous with another part of the platform.

From our standpoint it’s a huge opportunity for us, because we also see — this is a big 2018 initiative. The rush of compute moving to the edge and the need to do more and more local processing, less dependent on the cloud to do every bit of the processing piece. That’s just going to go off and accelerate, particularly as devices learn, in the context of the machine learning piece. The profile for what the learning algorithm looks like for your own devices, the performance and benefits you get as that’s more personalized and done locally, that will be pretty huge. We’re seeing an uptick there.

Above: Will the internet of things be interoperable?

VB: How far along in the process do you feel like everybody is now, the standards process? Does it feel like things are going to be interoperable sometime soon?

Haas: I default to waiting and seeing who the winner ultimately will be. But devices that are Google Assistant ready, Alexa ready, I see those more as default standards, as opposed to a set of companies all getting together and trying to decide, “This is the actual standard.” That’s hard. It’s like the smart TVs you bought in the early phase that had their own web browsers and interfaces. It’s clumsy in terms of interoperability, clumsy for the end user. The stuff Google and Amazon are doing is going to accelerate it. We’re in a good spot, because that’s the technology that underpins us.

VB: Blockchain is part of some of this, but does that come on your radar in any ways, on the silicon level?

Haas: Just from the standpoint of the processing that’s required for it, what’s required in terms of security. But in terms of the interface and what’s going on inside, not so much.

VB: I talked quite a long time with Phil Rosedale, who created Second Life, and now he has this company High Fidelity. They can create a bunch of things for an avatar to wear, sell those, and then log that transaction in a blockchain. Then it becomes interoperable with other virtual worlds. If you buy something in High Fidelity maybe you could use it in Second Life. Your avatar travels with you and all the stuff you bought. It seems like IOT transactions might work in a similar way.

Haas: Potentially. But blockchains are static. With the real-time issue when it comes to payments, you need some kind of other type of methodology. In the area of crypto and anything going on with security of payments, that’s a very central area for us. It takes a lot of processing. That’s something that requires some level of standardization. Different countries have different laws and bars in terms of threats and the like.

Because China has so much control — talking about mobile, all the carriers in China are state-run. Getting an illegal SIM card is very hard. Fraud is prevented by your identity, your mobile number. As a result, mobile payments are ubiquitous in China. In North America we’re way behind. But a lot of it has to do with the way payments are set up, the relationships between banks and so on.

It’ll be interesting to see what happens in China. The government has such tight controls on monetary issues. I lived in China for a couple of years, so I lived through this. Taking money out of the country is really hard. But now, with Tencent and Alibaba as really large merchants, the government can’t see where all the money is going, particularly if it travels outside of China. They’re already getting their nose into trying to take partial ownership of these companies.

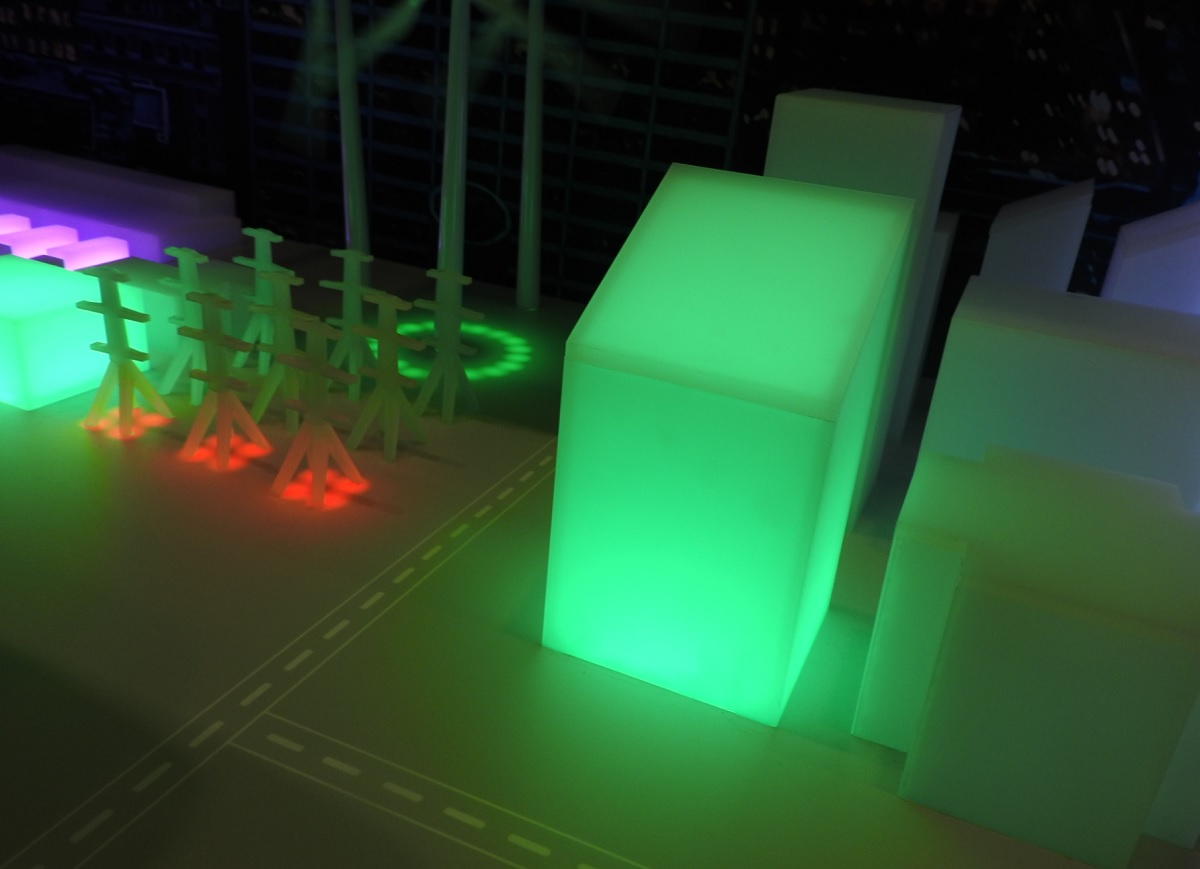

Above: Smart cities need a lot of processors.

VB: How you architect a blockchain depends on what kind of government is overlooking you.

Haas: Exactly.

VB: When you have conversations about blockchain within ARM, what do you have to think about?

Haas: Primarily we’re focused on edge compute. When we think about blockchain and the things required around security and local processing, it’s all about power and area. Machine learning is a big spot there for us, because you’ll need to do some level of neural network processing to handle the data. Whether a GPU is the right thing — if you’re putting it in an edge device, power is a big issue. Solving those issues in the cloud, one way would be GPUs, but for us, it’s more about the edge. We’re looking at all kinds of different architectural methodologies there. Nothing we’re talking about publicly yet.

VB: There’s all the talk about the CPU flaw. Is there any easy way to describe it and reassure people?

Haas: We had a lot of conversations on that. It’s interesting that it’s called a “CPU flaw,” because it’s actually — researchers have found a hole in modern programming techniques to potentially subvert some code. It impacts more high-end CPUs than low-end CPUs because it’s all about speculative processing and cache control. It does require a massive amount of coordination across the ecosystem. It’s not just an Intel CPU problem or an architecture problem. It’s a modern compute problem. Chip vendors, OEMs, software vendors, all of us need to work together.

You have to look at the workloads. It’s very workload dependent. Again, the issue is around this methodology of speculative caching. It’s basically how much predictability you want to do. Some of the patches slow that speculative process down or eliminate it, which in layman’s terms — let’s say you’re driving between Phoenix and Los Angeles and the speed limit is 60, but you know you can get away with 80 because there’s no radar checks. But if you find out there’s a speed camera every three miles, you just go 60 the whole time. If you figure out the cameras are 100 miles apart, you go back to 80 most of the time and slow down for the cameras.

It just ends up being, with the patches, how much of these speculative caching workloads get compromised. That’s a function of software and hardware together. I don’t know if you’ve seen any of the benchmarks, but it’s very workload dependent, very much a function of how aggressive the patches get as far as slowing down this methodology.

Above: More devices as the edge of the network are getting ARM processors.

On our side, looking mainly at mobile phones, it can vary by use case. But it’s not going to be noticed by customers on a day-to-day basis. What people do on their phones isn’t really impacted by high level speculative caching. Your phone is not constantly doing a bunch of massive local processing, trying to do a lot of different things in parallel. It’s running and closing apps. It’s fetching data. It’s not actually processing spreadsheets or doing recompiles and such where real work would be impacted.

We’re not impacted greatly either from a standpoint of this speculative caching, out-of-order execution methodology. It’s not in all of our processors. The volume of product shipped — a low percentage of products we ship even have this in their architecture. Around five percent. It’s a subset of our Cortex-A chips. By default, otherwise they’re not impacted. We have a website with a table online showing a couple of Cortex-A and a couple of Cortex-R chips that are impacted. The biggest one of the Cortex-A chips we sell, the highest volume, is probably Cortex-A 53, which is not impacted.

This was an interesting one. The silver lining I saw on this particular event — this wasn’t a situation like some of the earlier flaws, where the NHS was hacked in the U.K. with ransomware. This was a preemptive strike. Researchers found a potential liability. The industry got together and worked through a bunch of methodologies and ideas to mitigate it. Nothing actually happened. There were meetings taking place between a number of companies. From the outside looking in you’d say, “Wait, these guys are all together at the table? They’re working collaboratively to solve a broad problem? That’s great!” That actually happened.

The biggest learning in this is it won’t be the first time. The biggest benefit we got is we know our counterparts at all the other companies. We know all the folks involved. This is not going away. This is the world we live in. It requires a hardware and software ecosystem that works together. It’s not just one company.

We work very closely with the OS vendors anyway, but this forges not only a deeper sense of responsibility, but at fairly senior levels — again, the silver lining in all this, people get it. They know it’s important that we don’t just have these conversations as a one-off. When we develop our next-generation processors we work closely with the software folks in terms of features and so on. Sometimes there’s very good input and sometimes it’s a different level of interaction. This will help. This puts at the top of the mind, “Here are the things we’re thinking about. What are the tradeoffs?”

We’re addressing Spectre especially. We’re looking at it in future products as far as hardware and a software discipline. People look at this as if it’s fundamentally a hardware issue, but it’s very much hardware and software, because it’s using how the software works with the CPU on this timing. Both things have to be addressed in the future.

VB: I saw a Reuters story saying that if the patches start slowing down Intel processors, maybe that’s another reason to think about ARM server processors.

Haas: We’d like to think there are a lot of other good reasons to think about ARM server processors. The end of last year there was a lot of good momentum around ARM-based servers, with the stuff Qualcomm and others were doing. We feel like it’s a long, continued march. I also think what’s going to happen in the server space is the move of distributed processing from the cloud to different areas of the network to the edge. We’ll keep at it. The flaw is maybe good marketing, but we have a lot of other good momentum going on.

Above: HP’s ARM and Qualcomm-based Windows 10 always-connected computer.

VB: I saw some of the ARM-based Windows PCs here. Always on is nice, always connected.

Haas: One of the nice things, too, about having — I think we spoke back in the Windows RT days about this. A lot of the issues around Windows RT have been addressed now with Windows 10. One, fundamentally, the fact that you can run it in the enterprise. You don’t have an app compatibility issue. Underneath the hood it doesn’t look as negative to the user as far as running two different OSs.

Also, five years later, the world has changed. Virtually all the modern applications today are written for this. One of the issues we faced back in 2012 was, does iTunes run on Windows RT? At the time it didn’t. Now Spotify and Pandora–even the whole concept of how people get access to music is web-based, which by default means, who cares what the CPU is? That’s a micro-example. The app ecosystem has moved so fast in five years that I think, for the end user who buys one of these machines, there should be no compromise not only in terms of the experience, but also in the apps they’re used to running.

I haven’t seen the machines out there on this go-round. I know there a lot more people talking. Lenovo just announced theirs this week. I don’t think Microsoft would have done this just for the sake of saying, “We need another hardware platform out there.” ARM’s never talked about Mhz and those types of things. We’ve always been talking about how we can advance the user experience. I think they saw this opportunity, with ARM and Qualcomm working together, to say, “Oh, we can advance the user experience around connectivity and battery life.”

To their credit, they never wavered on the fundamental value proposition of mobility and power. People want the battery life and mobility of their laptops to be the same as their tablets or their phones. That’s a fundamental user experience thing. That’s there with these machines.

VB: Are you guys getting toward the Singularity that Masayoshi Son’s been talking about?

Haas: It’s a big focus. You’ve seen him speak. He talks about 300-year plans. He has a long time horizon. He’s definitely a big thinker. No question about that.

There’s a video of Softbank World, where he had a number of the companies on stage — Boston Dynamics, ARM, and so on. One of the benefits of being part of the Softbank ecosystem is just the interaction we get with the portfolio companies involved with Softbank. It isn’t a situation where we’re encouraged to work more closely with companies in the portfolio, but on the flip side, this whole notion of singularity, of a broader ecosystem working together, where the human brain and the artificial brain become one, or the artificial brain passes the human brain — realizing that vision, there are a lot of benefits to being under the Softbank umbrella. It’s an exciting time.

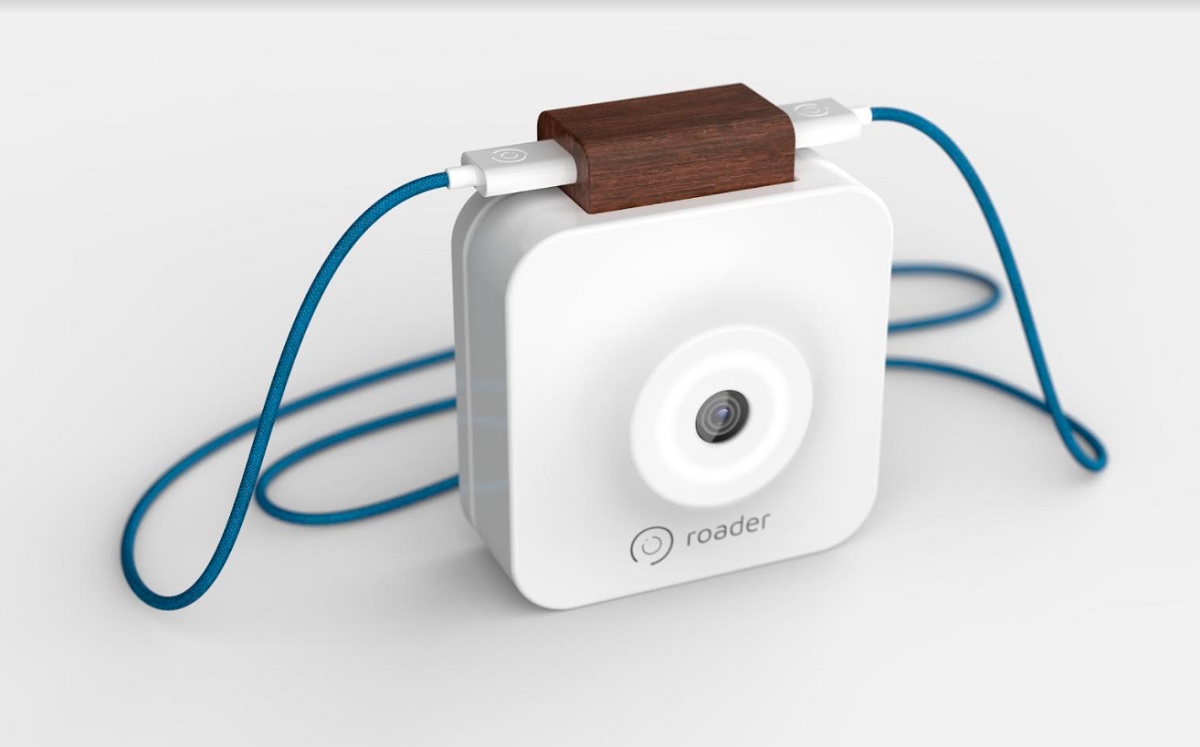

Above: Roader has built the Time Machine Camera.

VB: There’s a company here called Roader, a little Dutch startup. They have a camera you hang around your neck. It’s constantly recording. The battery can last seven hours, because one thing they’re not doing is saving anything. They’re keeping that video in a 10-second buffer, and so you press this button and it says, “Save that 10 seconds, plus 10 more afterward.” They call it a time machine. You can save something you were going to miss because you didn’t get your smartphone out in time, like your kid taking his first steps.

Haas: It’s a huge area. One of the areas we’re seeing huge demand for is around intelligent computer vision. You’re recording, but there’s some level of intelligence that knows whether what you’re recording is relevant. In the security space, if you’re recording a parking lot for an hour and nothing in it moves, you don’t keep that data. Once you start seeing something strange, then you start recording. The interesting part comes from a learning standpoint. If you have one of these things and it has some intelligence, it starts to learn behaviorally what things you find interesting. That’s a massive opportunity.

We’re doing a lot of work in the IP area around intelligent computer vision. It’s probably the single biggest growth area for us in the IP space. People are trying to build these smart cameras, “smart” being defined by making intelligent decisions about when to record and when not to record, and then the learning piece that says, “Okay, I now know that white Audi that I thought was mysterious is actually always there at 7:17 in the morning, so maybe I don’t need to make decisions around it.” There are limitless opportunities there.

VB: You think about something like a police shooting. There’s no reason not to record that. These cameras they’re using now are still the GoPro kind.

Haas: If you solve the issue of intelligent recording and storage and battery life, then these cameras are always on. They’re already always on in surveillance situations. We just have to solve the battery life. That goes back to this edge compute thing I talked about earlier. The whole notion of edge compute is a massive growth space now. That drives a lot of issues around power.

Above: ARM-based Amiko Respiro has sensors that measure how much the inhaler is used by patients, and that info is sent to physician.

VB: AI in the cloud is not as good as AI in the device. You can supplement what’s in the device, but if you make the device smarter, you save a lot of traffic.

Haas: It’s a supplement, yeah. You have to reduce the traffic. Again, localized machine learning is always going to be better. But there’s a tradeoff as far as how much you can do, at least today. It’s only going to get better.

VB: 5G comes along and relieves some of the traffic problems.

Haas: That has to happen, because on the flip side, as more and more — with 100 trillion devices online, you’ve got a petadata problem. You can’t take the data through the current pipes. 5G helps to some extent.