testsetset

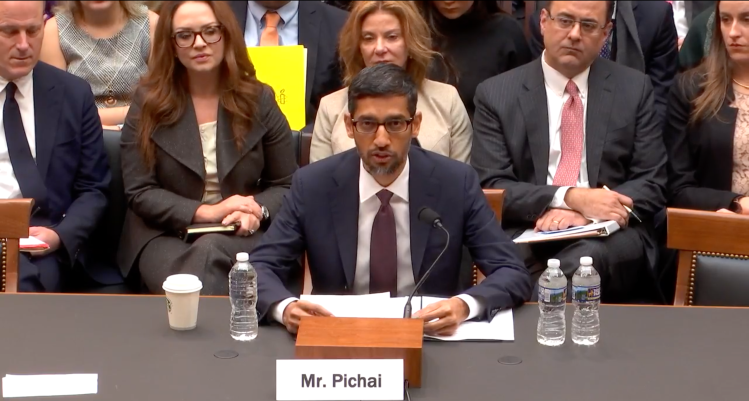

I like Google. A lot. And I also happen to like Google’s CEO, Sundar Pichai as well, so writing this post doesn’t spark joy.

Before I begin, let me say that no company is perfect. No CEO is either, and that’s okay. In the age of outrage, it is worth repeating as many times as necessary. Not every misstep is a scandal or the end of the world. But since Pichai just took a pass on fixing a pretty big mess that falls under his purview, and it isn’t trivial, let’s talk about it.

Some context: I’m generally a fan

Unlike some of my peers, I don’t think Google is as nefarious or deliberately deceitful as, say, Facebook. I think Google tries, and mostly succeeds, at balancing the needs of its platform and the needs of its users, to arrive at a mutually-beneficial balance of data collection and utility. It’s just that Google misses the mark sometimes, but as frustrating as it is for a former product manager like me to witness, it isn’t exactly the sort of thing that puts Google in the dog house for me.

But with great power comes great responsibility

Google, Apple, Amazon, Microsoft, Cisco, Tesla, Intel, Samsung, and their peers all exist to create value for their stakeholders, and maximize profits. They aren’t there to eradicate malaria, solve income inequality, or combat political corruption. They are businesses, and their primary objective is to maximize the growth and profitability of their products, services, and platforms. Everything else is secondary. How they choose to do so is a matter of vision and culture.

June 5th: The AI Audit in NYC

Join us next week in NYC to engage with top executive leaders, delving into strategies for auditing AI models to ensure fairness, optimal performance, and ethical compliance across diverse organizations. Secure your attendance for this exclusive invite-only event.

Some of these companies are led and staffed by people who are as driven by being a force for good in this world as they are by hitting their financial numbers. Others are led and staffed by people who don’t necessarily care about being a force for good in this world, but understand that not lying to their customers, not defrauding them, and ultimately not harming them, is just a sound business policy. I don’t know which Google is, and it doesn’t really matter. What matters isn’t what a company aims to do but what it does.

YouTube’s content problem and … wrong answers

And so here we are. YouTube appears to be 1) causing harm by having become a breeding ground for toxic and dangerous content, and 2) profiting from advertising revenue boosted by algorithms that point users to that content in an effort to keep them on the platform as long as possible. YouTube has its own CEO (Susan Wojcicki, since 2014), but the platform is Google’s responsibility. Google is Pichai’s responsibility. So when he was asked what he intends to do about YouTube’s content problem and his answer was that YouTube is too big to fix completely was wrong.

What he really means is, “I don’t know how to fix this problem, so let’s just agree that it can’t completely be fixed. Not my fault. The company is just too big.”

Small failures of leadership lead to big ones

If you start with excuses as to why you can’t do something you know you have to do, you’ve already lost the plot. If you’re head of a company, you can’t simply abdicate responsibility.

Leadership means you have to try, and then keep trying, and then try some more until you find a way to make it work.

If, as a CEO, you really believe the company is too big for you to fix, maybe you’ve found the limits of your own ability to lead it.

Zeroing-in on the problem

Per CNBC’s reporting:

YouTube, which is owned by Google, has come under fire in the last couple of years, as content ranging from deniers of the Sandy Hook massacre to supremacist content has continued to show up on the site despite the company’s attempts to filter it out.

During a CNN interview that aired Sunday, Pichai was asked whether there will ever be enough humans to filter through and remove such content.

“We’ve gotten much better at using a combination of machines and humans,” Pichai said. “So it’s one of those things, let’s say we’re getting it right 99% of the time, you’ll still be able to find examples. Our goal is to take that to a very, very small percentage well below 1%. […] Anything when you run at that scale, you have to think about percentages.””

First of all, YouTube isn’t getting it right 99% of the time. Not even close. Second, that sort of linear, symmetrical thinking doesn’t really work with regards to an asymmetrical problem. It doesn’t make sense to measure the problem in terms of percentages, let alone to craft a solution based on those metrics.

YouTube’s problems require clusters of new solutions, not the optimization of existing half-measures. Pichai doesn’t appear to be thinking proactively about this, let alone broadly enough. What he is telegraphing with his answer is that incremental progress along percentages is all he hopes to realistically accomplish. “Anything when you run at that scale, you have to think about percentages.” No. This is not a percentage problem.

Unfortunately, it doesn’t stop there. Again, per CNBC:

“Pichai added that he’s “confident we can make significant progress” and that “enforcement will get better.” He also said Google wishes it had addressed the problems sooner, since many of the videos have been running for years. “There’s an acknowledgement we didn’t get it right,” he said. “We’re aware of some of the pitfalls here and have changed the priorities.”

Huh? “Confident that we can make significant progress. Enforcement will get better. We’ve changed the priorities.” What? Look, we all know the difference between lip service and an actual plan. Does anyone hear a plan here? I don’t.

The trouble with blaming predecessors…

Perhaps the worst part of Pichai’s answer is the moment he blames Google for not having addressed the problem sooner. Not my fault! I didn’t cause this. Someone else should have taken care of it before I got here.

Look, good leaders don’t talk like this.

More importantly, let’s not forget that Pichai became CEO of Google in 2015. Come October, he will have been CEO for four years. Blaming predecessors for a problem that has been festering at YouTube for Pichai’s entire four-year tenure as CEO rings dangerously hollow. This was a problem in 2014, when Wojcicki took over YouTube. It was a problem in 2015 when Pichai took over Google. It was still a problem in 2016 and 2017 and 2018. And it is still a problem now. When does a current problem stop being your predecessor’s fault?

YouTube seemed perfectly comfortable capitalizing on ad revenue when its “up next” feature was recommending white supremacist content as well as scores of conspiracy theories, disinformation, and otherwise toxic video to users from 2014 to early 2019. The practice didn’t stop until February of 2019 (at least with regard to white supremacist content), a mere two months after Pichai was called to testify before Congress.

Public and legislative pressure were enough to trigger changes at YouTube in just two months’ time that Pichai and Wojcicki together somehow weren’t able to address for almost half a decade.

How about that.

To make matters worse, YouTube’s still very new white supremacist content ban (June 2019), came several months after Facebook announced its own policy (in March 2019), making YouTube (and parent company Google) relatively late entrants in the “Let’s clean up our platforms” game.

Pichar is leading from behind, and only then under the threat of enforcement. Which brings us to our final topic.

The regulators are coming

Google and Facebook have giants targets painted on their backs right now. There’s talk of regulating big tech again, and the topic is gaining momentum this time. This is due in part because social platforms like YouTube, Twitter, and Facebook have been profiting from political extremism, pseudoscience, misinformation, and radicalization for years, and those chickens have come home to roost with a vengeance.

Yes, the real issue with regulation and antitrust for big tech focuses more on data and privacy, but the toxicity of these platforms, and the growing danger they pose to public safety, cannot be ignored.

Social platforms’ toxic and harmful content problem has been obvious and reported on extensively for some time, and yet it took the growing threat of regulation and antitrust enforcement to finally get Pichai, (Google, YouTube), Zuckerberg (Facebook), and Dorsey (Twitter) to start taking the issue seriously.

This was most assuredly not because they felt that it was their responsibility to get their houses in order, or they would have made a move earlier. Instead, it is because they don’t want to be regulated or broken up.

Given the very thin ice that big tech is already skating on, Pichar didn’t do himself, YouTube, or Google any favors with his statements this week. If nothing else, his lackluster answer just provided fresh ammunition to lawmakers and ambitious bureaucrats betting their careers on the upcoming fight between Washington DC and Google.

A version of this story originally appeared on the Futurum Research blog.